The Graviton 4 chip, left, is a general-purpose microprocessor chip used by SAP, among other things, for large workloads. The Trainium 2 chip is an accelerator chip intended for training very important neural networks. Amazon AWS

re:Invent 2023 (Las Vegas) – During its annual conference for developers, Amazon announced yesterday Tuesday a new version of Trainium 2, its chip dedicated to training neural networks. Trainium 2 is specifically designed for training so-called large language models (LLMs) and foundation models. These are generative AI programs such as OpenAI’s GPT-4.

The company also unveiled a new version of its more generic-use chip, Graviton 4. It also said it was expanding its partnership with Nvidia to run Nvidia’s most powerful chips in its cloud computing service.

Trainium 2 is designed to manage neural networks with billions of billions of parameters. These parameters are the neural weights of the networks. And it is these weights which make it possible to optimize the algorithms of the AI program, but also to guarantee their capacity for scalability and power.

“Train an LLM with 300 billion parameters in a few weeks rather than a few months”

Trillions of parameters have become something of an obsession for the AI industry. For what ? Because the human brain contains 100 billion neuronal connections. And this parameter volume makes it seem like a neural network program with billions of billions of parameters is connected to the human brain. Whether this is true or not.

The AI chips are “designed to deliver up to four times faster training performance and three times more memory capacity” than their predecessor, the Trainium chips launched in 2021, “while improving efficiency energy (performance/watt) up to two times,” Amazon said.

Amazon makes the chips available in instances of its EC2 cloud service, called “Trn2” instances. The instance includes 16 Trainium 2 chips working in concert, which can be scaled to 100,000 instances, according to Amazon. These larger instances are interconnected using the Elastic Fabric Adapter network system, which can provide a total computing power of 65 exaFLOPs. (One exaFLOP is one billion billion floating point operations per second).

At this scale of computation, Amazon said, “customers can train an LLM of 300 billion parameters in weeks rather than months.”

Graviton 4: 30% higher computing performance

AWS is continuing this infrastructure design work in conjunction with Anthropic, the startup in which it has invested $4 billion. A startup whose founders made a transfer with OpenAI. And this investment puts Amazon in a position to compete with the Microsoft / OpenAI alliance.

The Graviton 4 chip, also announced Tuesday, is based on an ARM design, and competes with processors from Intel and Advanced Micro Devices, based on the old x86 standard. The Graviton 4 offers 30% higher computing performance than the previous generation Graviton 3 also launching in 2021, Amazon said.

Unlike Trainium chips intended for AI training, Graviton processors are designed to run more traditional workloads. Amazon AWS said customers – including Datadog, DirecTV, Discovery, Formula 1, Nielsen, Pinterest, SAP, Snowflake, Sprinklr, Stripe and Zendesk – use Graviton chips “to run a wide range of workloads, such as databases, analytics, web servers, batch processing, ad serving, application servers, and microservices.”

SAP said it achieved “35% higher performance for analytical workloads” with these chips, running its HANA in-memory database.

These announcements follow those of Microsoft last week on its first chips for AI. Google, the other titan of the cloud, innovated in 2016 with its first chip dedicated to AI, the TPU, or Tensor Processing Unit, of which it has since offered several generations.

AWS, precursor on GH200

In addition to the two new chips, Amazon has expanded its strategic partnership with AI chip giant Nvidia. AWS will be the first cloud service to use Nvidia’s upcoming Grace Hopper GH200 multi-chip product, which combines the ARM-based Grace processor and the Hopper H100 GPU chip.

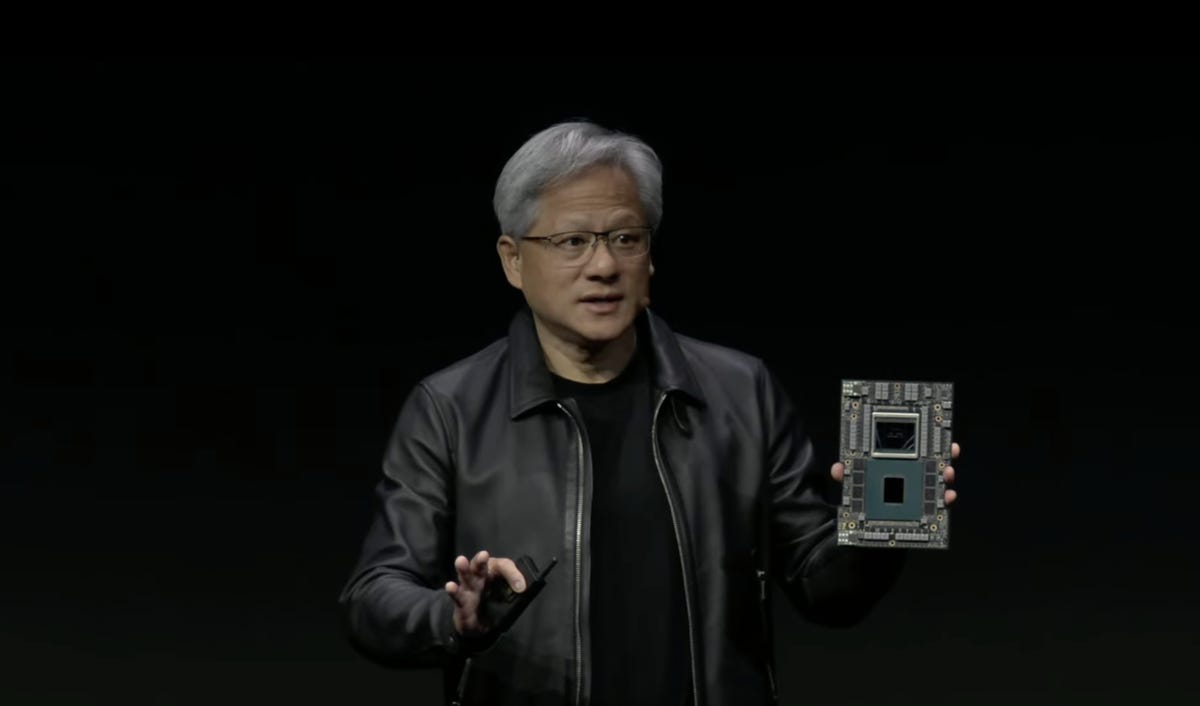

Nvidia CEO Jensen Huang and AWS CEO Adam Selipsky on stage at re:Invent 2023.

GH200 is expected to be delivered next year. They will be hosted on AWS via Nvidia’s specially designed AI servers, DGX, which the two companies say will accelerate the training of neural networks with more than a trillion parameters.

Nvidia said it would make AWS its “primary cloud provider for its ML research and development.”

Nvidia CEO Jensen Huang and the next iteration of his company’s CPU and GPU combination, the Grace Hopper “GH200” “superchip.” Nvidia

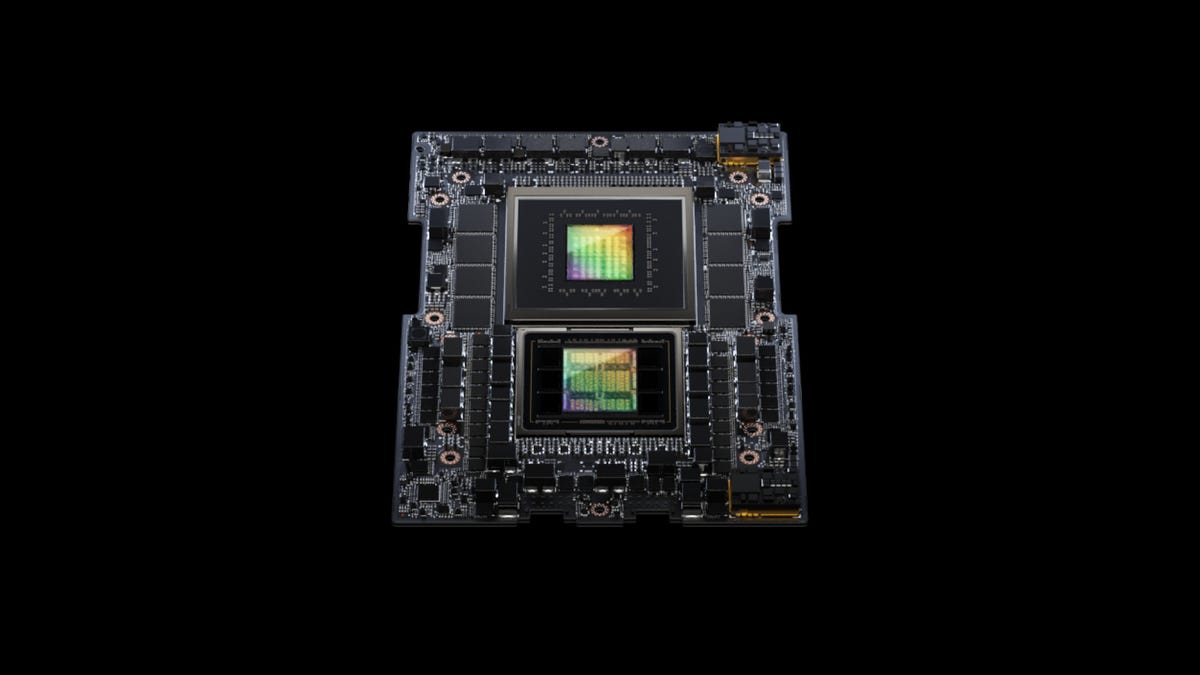

The GH200 chip combines the CPU and GPU, named NVLink

The GH200 chip combines the CPU and GPU, with faster memory and ultra-fast connectors, called NVLink. Nvidia CEO Jensen Huang made this announcement in August 2023.

If the initial Grace Hopper superchip contained 96 gigabytes of HBM memory, this new version contains 140 gigabytes of HBM3e memory, the next high-bandwidth memory standard. HBM3e increases the data rate feeding the GPU to 5 terabytes (trillion bytes) per second, compared to 4 terabytes in the original Grace Hopper.

“The chips are in production, we will sample them at the end of the year, or thereabouts, and we will be in production at the end of the second quarter [2024]”, said Jensen Huang during the presentation.

The GH200, like the original chip, features 72 ARM-based CPU cores and 144 GPU cores.

Stacked GH200s

Jensen Huang assures that the GH200 can be connected to a second GH200 in a dual configuration server, for a total of 10 terabytes of HBM3e memory bandwidth.

GH200 is the next version of the Grace Hopper superchip, designed to share the work of artificial intelligence programs through tight coupling between the CPU and GPU. Nvidia

HBM began replacing the previous GPU memory standard, GDDR, in 2015, due to increased demand for memory. HBM is a configuration of memory “stacked” vertically on top of each other and connected to each other.

Source: “ZDNet.com” and “ZDNet.com”