Climatologists have spent decades accumulating data on the evolution of meteorology in various parts of the globe. As an example, let us cite the ERA5 project, which is a climate record since 1950, developed by the European Center for Medium-Range Weather Forecasts (ECMWF). Enough to reproduce a sort of simulation of the earth’s weather over time, with a recording of wind speed, temperature, atmospheric pressure and other variables, hour by hour.

Last week, Google’s DeepMind announced what it called a turning point in using all that data to make weather forecasts at a lower cost. Using a single artificial intelligence chip, Google’s Tensor Processing Unit (TPU), DeepMind scientists were able to run a program that could predict weather conditions more accurately than a traditional model running on a supercomputer.

DeepMind’s paper is published in the scientific journal Science, along with a research article calling the breakthrough a “revolution” in the world of weather forecasting.

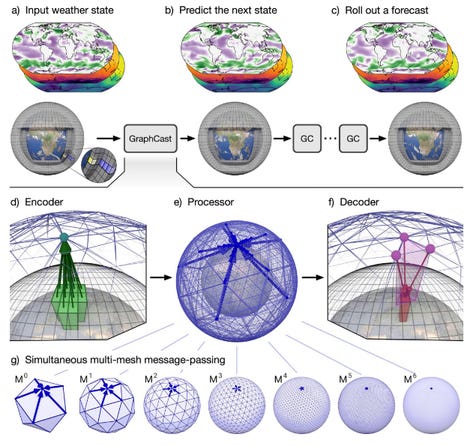

Data from a simulation over several decades, since ERA5, is fed into the GraphCast graphics network. GraphCasts then predicts the next measurement. Google

GraphCast does not replace traditional forecasting models

Please note that GraphCast, as the program is called, does not replace traditional forecasting models, according to Remi Lam and his colleagues at DeepMind. Rather, they view it as a “complement” to existing methods. Indeed, if GraphCast is possible, it is only because climate scientists built the existing algorithms that were used to “reanalyze”, that is, go back in time and compile the enormous daily data from ERA5 . Without this precision effort to create a global weather model, there would be no GraphCast.

The challenge Remi Lam and his team took on was to take a number of weather readings from ERA5 and see if their program, GraphCast, could predict some never-before-seen readings better than the gold standard of weather forecasting, a system called HRES , also developed by ECMWF.

HRES, which stands for High RESolution Forecast, predicts the weather in the next ten days, worldwide, in one working hour, for an area of approximately 10 square kilometers. HRES is made possible by mathematical models developed by researchers over decades. HRES is “enhanced by highly trained experts,” which — while valuable — “can be a lengthy and expensive process,” write Lam and his team, and which comes with the multimillion-dollar computational cost of supercomputers.

It takes a month for 32 TPU chips working in concert to train GraphCast on ERA5 data

The question then becomes whether a form of deep learning AI could match this model created by human scientists with an automatically generated model.

GraphCast takes weather data such as temperature and air pressure and represents it as a single point for an area of the globe. This individual point is connected to the weather conditions in neighboring areas by so-called “ridges”. Think of the Facebook social graph, where each person is a point and they are connected to their friends by a line. The Earth’s atmosphere then becomes a mass of points, with each area connected by lines representing how the weather conditions in each area relate to those in the neighboring area.

This is the GraphCast “chart”. Technically, this is a well-established area of AI deep learning called Graphic Neural Network (GNN). A neural network is trained to determine how points and lines are related, and how these relationships may change over time.

Armed with the GraphCast neural network, Lam and his team captured 39 years of ERA5 data on air pressure, temperature, wind speed, and more, then measured their ability to predict what would happen next over a 10-year period. days compared to HRES programs.

It takes a month for 32 TPU chips working in concert to train GraphCast on ERA5 data. This is the training process in which the neural network’s parameters – or neural “weights” – are tuned so that they can make reliable predictions. Next, a group of ERA5 data that has been set aside – the “retained” data, as they are called – is fed into the program to see if the trained GraphCast can predict, from the data points, how those points will evolve over ten days. Which amounts to predicting the weather within this simulated data.

“GraphCast performs significantly better” than HRES in 90% of prediction tasks

The authors observe that “GraphCast performs significantly better” than HRES in 90% of prediction tasks. GraphCast is also able to outperform HRES in predicting the shape of extreme heat and cold developments. They also note that HRES performs better for forecasting the stratosphere than for surface weather changes.

It is important to understand that GraphCast does not predict the weather based on data from previous days. What he managed to do was a controlled experiment with weather data known in advance, not live data.

Lam and his team note that one of the limitations of GraphCast is that it no longer works well when it goes out of a 10-day period.

GraphCast becomes “fuzzy” when things become more uncertain

“Uncertainty increases when lead times are longer,” they say. GraphCast becomes “fuzzy” when things become more uncertain. This suggests that they need to make changes to GraphCast to handle the greater uncertainty of longer time frames, most likely by creating a “set” of overlapping forecasts. “Developing probabilistic forecasts that model uncertainty more explicitly […] is a crucial next step,” write Mr. Lam and his team.

Interestingly, DeepMind has big ambitions for GraphCast. Not only is GraphCast part of what should be a family of climate models, but it is part of a broader interest in simulation. The program works on global data that simulates what is happening over time. Lam and his team suggest that other phenomena, not just weather, can be mapped and predicted in this way.

GraphCast may open new avenues for other important problems in geospatiotemporal prediction,” they write, “including climate and ecology, energy, agriculture, human and biological activity, as well as other complex dynamic systems.

“We believe that learning simulators trained on rich, real-world data will be critical to advancing the role of machine learning in the physical sciences.

Source: “ZDNet.com”