Many artificial intelligence functionalities, sometimes more experimental, are not deployed in France and more generally in the European Union. At issue: the legislation which discourages large companies from launching their AI tools on the Old Continent. Overview of what is blocking and how they could resolve this problem.

Very often on Frandroid, we report developments in ChatGPT, Copilot, Gemini, Midjourney, etc. Sometimes these are experimental features for a few users, sometimes they are almost global launches. Almost, since often, a geographical area is left behind: the European Union of 27, including France, but also our French-speaking friends in Belgium. It also happens that new products only arrive several months after other countries.

What explains all this? A main answer in truth: European legislation. Here’s why ” Europe is behind on generative AI“.

GDPR, Digital Markets Act, AI Act: European legislation bothersGamam

Several European laws require large companies to adapt if they want to deploy their AI systems on the Old Continent.

GDPR

We will soon celebrate the five years of the GDPR, the General Data Protection Regulation which entered into force on May 24, 2016 and entered into application on May 25, 2018. A law now fundamental for European Internet users which has brought about many changes, both in in terms of transparency as well as restrictions on the tracking of Internet users. This law is today accused of preventing innovation, which the CNIL denies: for the Commission, it is a received idea.

The problem in training and improving an LLM (a large language model, i.e. the engine of a chatbot) is the datasets used. They may contain personal data, either collected on the Internet by robots or collected from users of a chatbot. It is this use of data that “poses risks to people, which must be taken into account in order to develop AI systems» according to the CNIL.

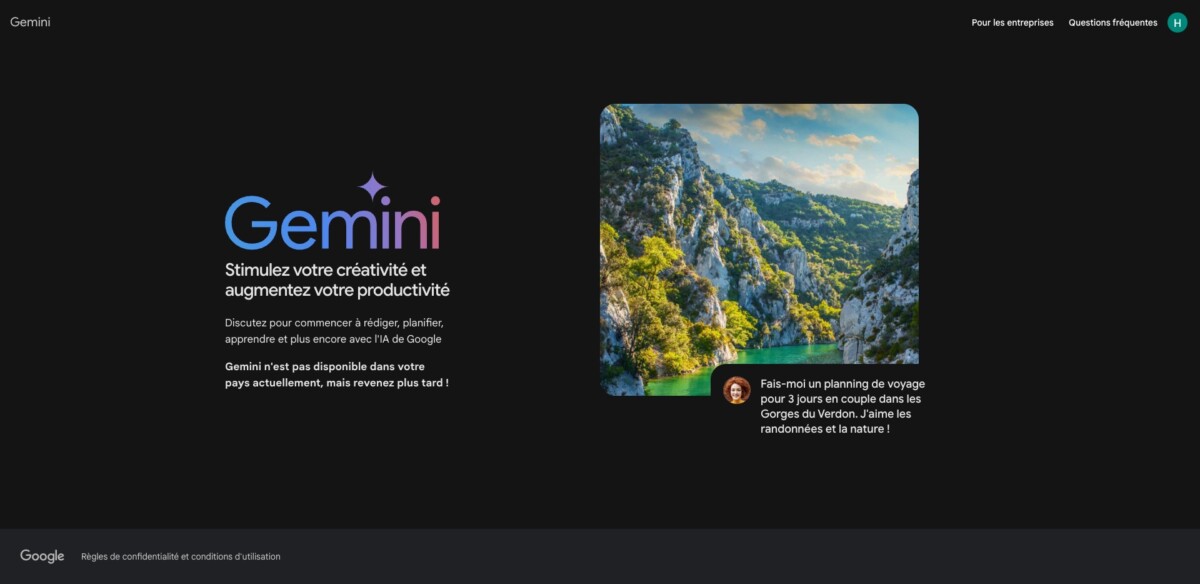

For example, the GDPR forced Google to delay the release of Bard, the first version of its AI chatbot, which gave way last December to Gemini. So, Google had not provided enough formal guarantees about data protection to the Irish Data Protection Commission, the equivalent of the CNIL in France. Moreover, it is still not possible to use Gemini instead of Google Assistant on your smartphone in France. At the same time, complaints against OpenAI were filed with the CNIL.

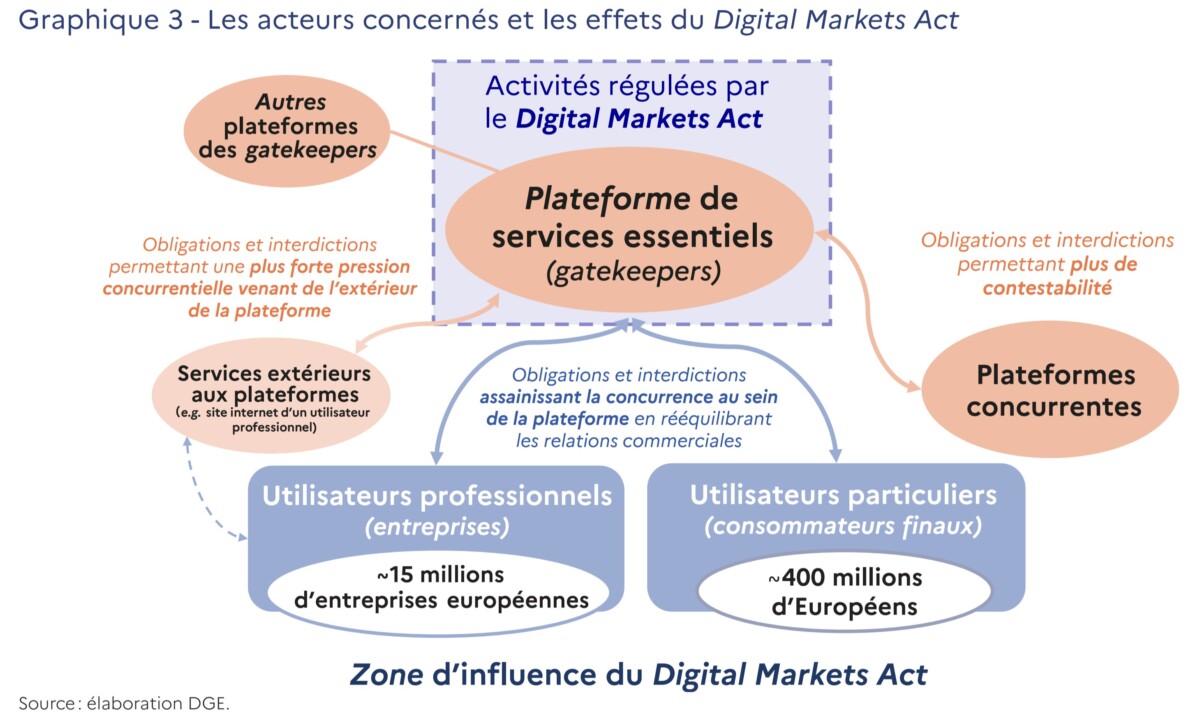

The Digital Markets Act

The Digital Markets Act also adds complexity to the deployment of generative AI tools in Europe. First of all, it marks the end of self-preference on platforms: Google can no longer favor its own services, for example. What he could do with his chatbot Gemini, by promoting his other services.

Another concern that legislation may pose to generative AI tools: data portability. Since the arrival of the GDPR, we can ask any service for a copy of the data it has on us. The DMA extends this right, allowing Internet users to have better data portability. So, our conversation history with one chatbot might need to be integrated into another chatbot. Finally, we can mention the end of combined advertising profiling from several services. Copilot for Microsoft, Meta AI for Meta or even Gemini for Google: these chatbots have the ultimate goal of being profitable. For this, the recipe is the same as for social networks or search engines: the sale of our personal data. However, with the DMA, Gamam can no longer combine data from several services for advertising purposes without the consent of users. One more obstacle therefore.

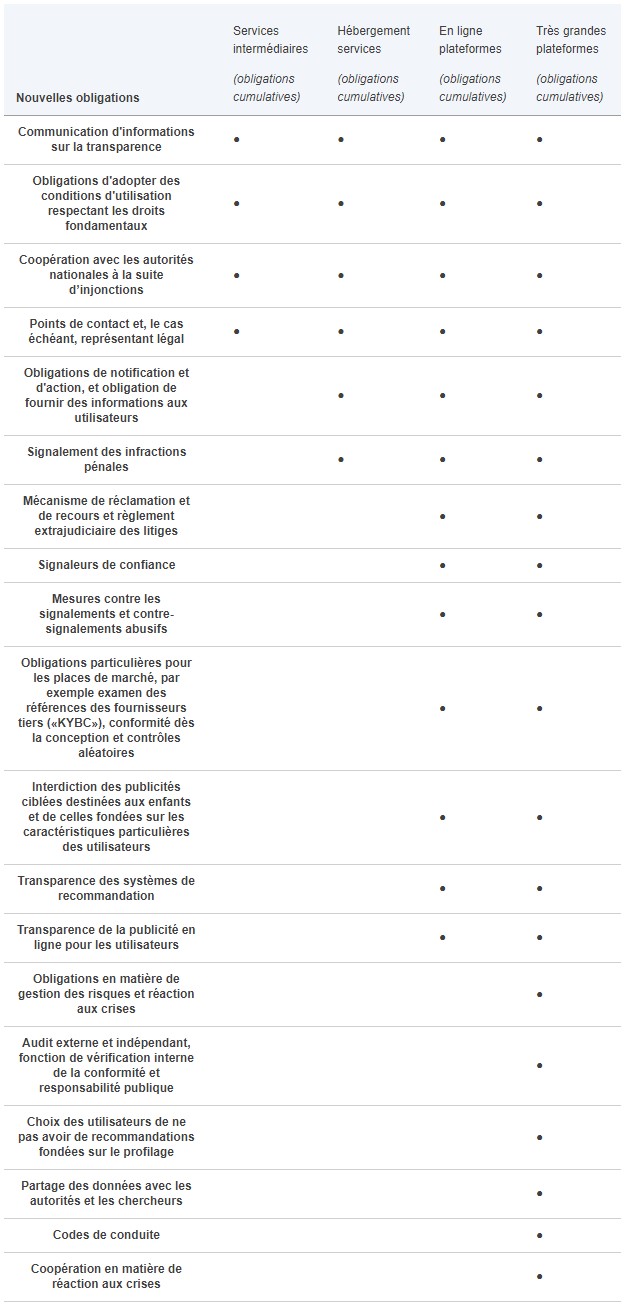

The Digital Services Act

Another European law that recently came into force: the Digital Services Act, or DSA. It asks platforms to moderate content more reactively: no content must be illegal on the platforms. Moderation systems are required to process “complaints in a timely, non-discriminatory, diligent and non-arbitrary manner.»

If the DSA mainly targets social networks, we can imagine its extension to chatbots and image generators, which should then no longer create misleading content. All at the risk of being exposed to heavy financial penalties.

The AI Act

The AI Act has not yet been ratified, but was definitively adopted by the European Parliament last March. It is the first large-scale text on the subject in the world. What is of concern is above all the fact that generative AI must be entrusted to the EU before being placed on the market, while having to respect transparency rules. If these tools produce illegal content, the penalties could be heavy.

The AI Act should be published in the Official Journal before the end of the current legislature, i.e. next July maximum. Then it will come into force in 12 months from its publication for systems “for general use“.

Launching AI too quickly in Europe is very risky

The other concern is that chatbots and other image generators remain quite experimental for the moment. As mentioned previously, they can produce illicit or even dangerous results, particularly in terms of the protection of personal data (chatbots have already revealed other users’ data to users). Generative artificial intelligence is still full of errors and launching in Europe is very risky.

With these three pieces of legislation, the European Commission and more generally the European Union as well as Internet user protection associations have grounds to attack Gamam in the event of breaches. For several years now (notably with the GDPR), fines have been increasing against services that we use every day. But what is increasing above all is the amount of these fines. Some reach hundreds of millions of euros, or even more than a billion euros. And with the Digital Markets Act for example, this can go even further: up to 10% of a company’s global turnover. We no longer speak in hundreds of millions of euros, but in billions of dollars. Please note, however, that these are theoretical amounts: for the moment no sanction has been issued for the violation of the DMA.

How to comply with European laws to deploy artificial intelligence

What blocks the most is the GDPR. However, all is not lost for businesses and the users of their services. The CNIL recently published some recommendations for the development of generative AI that respects this European law which is celebrating its fifth anniversary, as well as the AI Act adopted recently, and which must still be validated before coming into force.

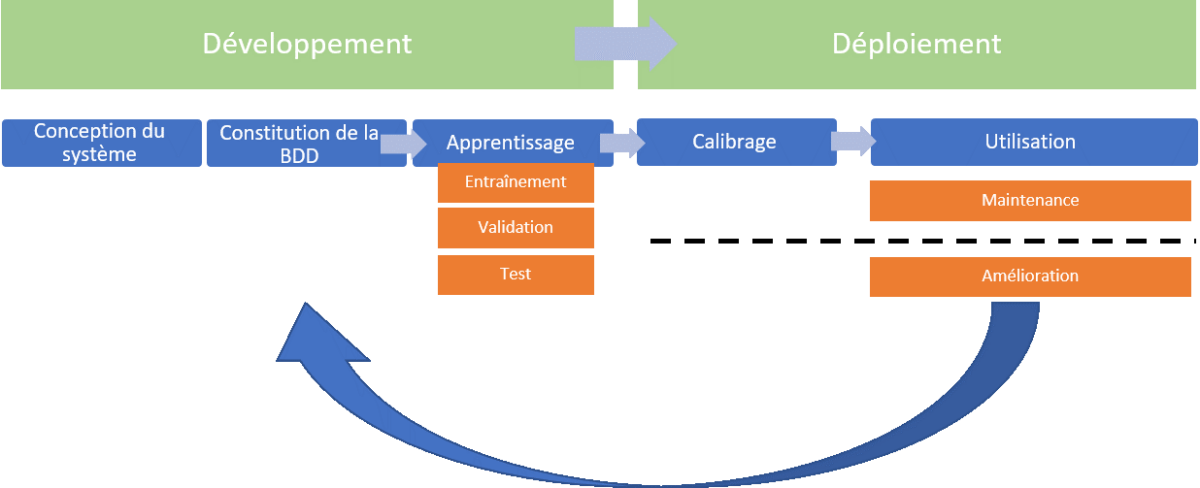

It determines several steps in building an AI tool:

- Define the objective of the system;

- Determine the different responsibilities;

- Define the legal basis for being authorized to process personal data;

- Check whether the actor concerned can reuse certain personal data;

- Minimize the personal data used;

- Define a retention period;

- Carry out a data protection impact analysis.

However, the situation for Gamam seems to be improving: Gemini 1.5 Pro, the latest version of Google’s LLM, is available in France. OpenAI’s latest announcements do not exclude the European market either: GPT-4o is available here and across the Atlantic.

Want to find the best Frandroid articles on Google News? You can follow Frandroid on Google News in one click.