Exascale designates a new scale for supercomputers. At this level, machines are capable of performing a billion billion operations every second. Yes, every second.

Exascale is the current culmination of the long process of evolution of computer calculation. The performance of a computer is measured in the number of “float operations”, multiplications and/or additions, that it can perform per second and, more precisely, on real numbers coded on 64 bits. We note Flops/s for this unit.

On our mobile phones or personal computers, to browse the Internet ever more quickly or to interactively view videos, we count billions of operations per second (giga). On the largest machines, it is in the power of a thousand. Giga is the third power (1,0003 or 109), then come in order tera, peta and exa. These prefixes of the international system of units are deformations of Greek tetra, penta And hexa, they are therefore quite easy to memorize. Exascale therefore corresponds to the scale of supercomputers whose computing capacity exceeds 10 to the power of 18 Flops/s.

High-performance computing (HPC) aims to execute scientific applications such as nuclear simulation or meteorology as quickly as possible. Computing needs beyond human capabilities emerged during World War II and have been increasing ever since, with the massive digitalization of society and globalization.

Continuous evolution since the 1960s

The history of high-performance computing has followed major technological milestones from the invention of integrated circuits in the early 1960s to recent 5 nanometer technologies. Vector processors appeared in the 1970s. They were designed on a reduced instruction set that worked efficiently on large collections of homogeneous data. From the 1980s, we have witnessed the development of parallelism with in particular massively parallel multiprocessors, large numbers of computing units linked by sophisticated interconnection networks.

Towards the mid-1990s another type of supercomputer appeared, based on the idea of assembling general equipment. Taken to the extreme, this gave rise to peer-to-peer computing where anyone could provide their own internet-connected machine to contribute to an ambitious scientific experiment like protein folding. The notion of accelerator (specialized co-processor, more efficient on a certain type of operations) appeared around 2000 and took on a crucial dimension with GPUs (graphics processors) at the origin of the development of deep learning in 2013.

Today, all recent consumer processors include several dedicated units. GPUs have evolved and their advanced parallelism makes them very efficient for matrix and tensor computing, specialized for machine learning like Google’s TPUs.

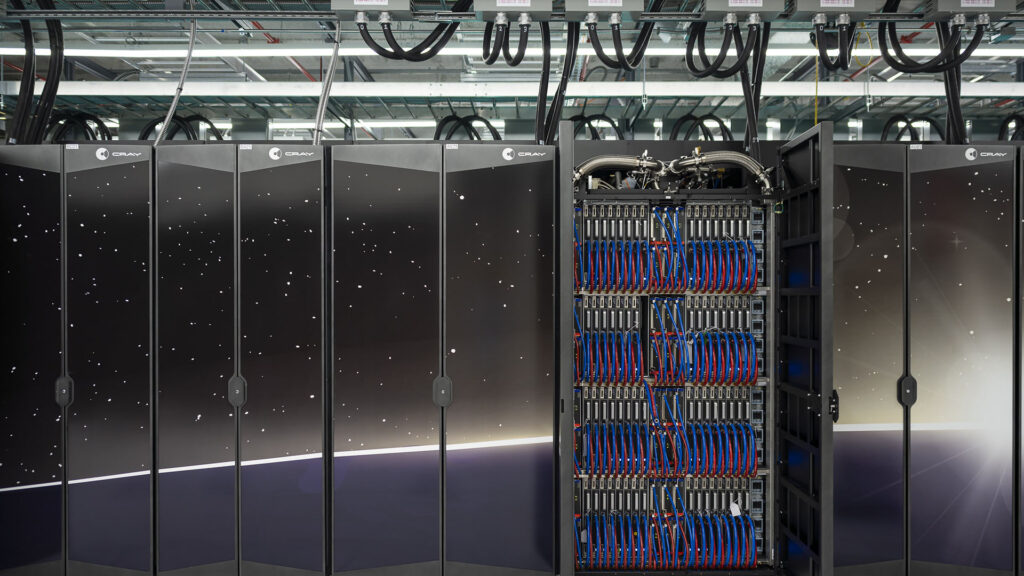

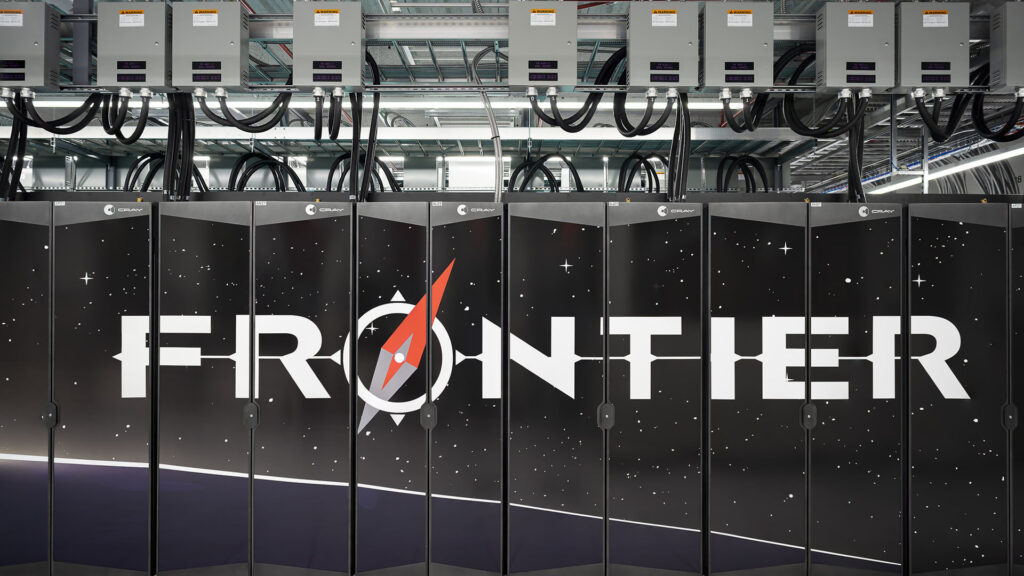

The exascale objective was achieved in 2022 with the entry of the Frontier supercomputer at the top of the Top500. This is the ranking of the 500 most efficient systems in the world. This ranking is carried out after careful preparation of a supercomputer on classic linear algebra benchmarks. Not all of the most powerful HPC systems are in the Top 500. Large companies or the military have HPC systems that are not there.

Like the conquest of space, being among the first in the Top 500 is clearly a geopolitical issue, led by the United States, to assert technological supremacy and sovereignty. France and Europe have programs to build their own exascale systems.

The question of power consumption of large-scale HPC platforms has always been present, but it is becoming crucial today with the fight against carbon emissions which are at the origin of the climate crisis. Some believe that the race for performance can contribute to solving current ecological challenges, but more and more voices are warning of a headlong rush which contributes to uncontrolled acceleration and are advocating a slowdown, especially since there are very few applications that really require that much power. In the debate over these two opposing positions, politicians and technophiles are already considering the next step of zettascale (seventh power of 1000, unit just after exascale, i.e. 1,000,000,000,000,000,000 000 Flops/s).

Denis Trystram, University Professor of Computer Science, Grenoble Alpes University (UGA)

This article is republished from The Conversation under a Creative Commons license. Read the original article.

If you liked this article, you will like the following: don’t miss them by subscribing to Numerama on Google News.