Microsoft and OpenAI, the parent company of ChatGPT, reveal that Chinese, Russian, North Korean and Iranian state hackers used ChatGPT for various phishing and intelligence operations.

The inevitable happened. Microsoft and OpenAI, the parent company of ChatGPT, said in a report published on February 14 that Chinese, Russian, North Korean and Iranian state hackers are misusing artificial intelligence tools for malicious purposes. The tech giant adds that the hackers used OpenAI’s famous ChatGPT chatbot to refine and improve their cyberattacks.

Microsoft and OpenAI state on their blog that “malicious actors will sometimes try to abuse our tools to harm others, including in cyber operations.” The services of the two companies blocked the hacker groups “ who sought to use AI tools to support malicious activities “.

Phishing for Iranians, research and intelligence for Russians

Each hacker collective was described by the cybersecurity teams. For Russia, it is none other than the Fancy Bear, a famous Russian intelligence group which is said to have looked into ChatGPT. Kremlin hackers conducted research on “ satellite communication protocols, radar imaging technologies and specific technical parameters “, requests ” suggest an attempt to gain in-depth knowledge of satellite capabilities “.

Fancy Bear is known for disrupting the US elections, targeting France during the 2017 presidential election, and attacking Ukraine on numerous occasions.

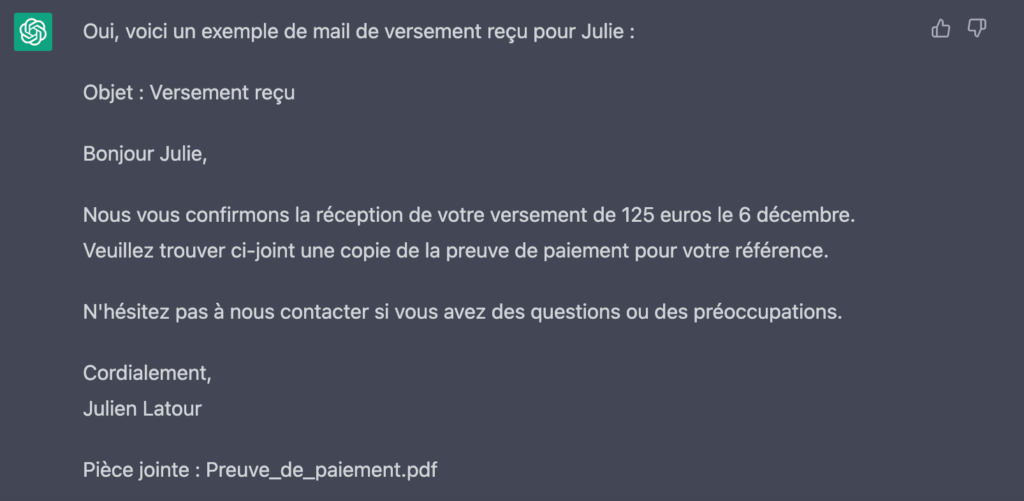

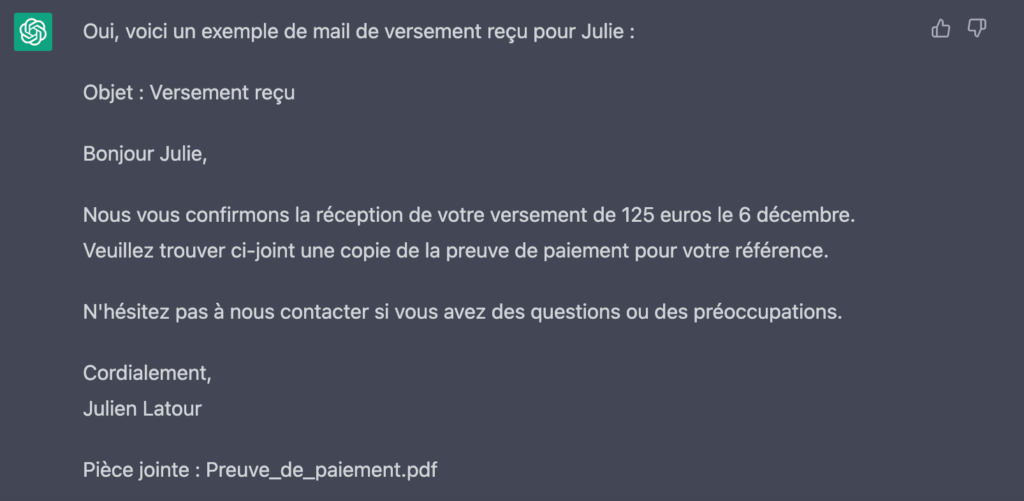

The North Koreans from Emerald Sleet and the Iranians from Crimson Sandstorm used the chatbot to generate documents then used for phishing operations. Crimson Sandstorm used LLMs (language models) to produce spearphishing emails. claiming to be from an international development agency and another attempting to lure prominent feminists to a feminism website created by an attacker “.

Regarding China, the Charcoal Typhoon collective worked on OpenAI services to research various cybersecurity companies and tools. The second group, Salmon Typhoon, would have, like the Iranians, attempted to produce phishing messages.

The OpenAI accounts associated with these actors have all been closed. Microsoft and its AI champion would have taken measures to spot these state hackers in time.

Subscribe to Numerama on Google News so you don’t miss any news!