This is not the first time that a deepfake featuring a boss has embezzled millions from a company. The recent example reported by the Hong Kong Free Press shows a scam of almost 24 million euros (200 million Hong Kong dollars).

If this type of attack is currently an isolated case, unlike fraud against the president by e-mail or BEC attacks, the principle is relatively identical. An employee, preferably from the financial department, receives an instruction from the company’s management to confidentially transfer a sum of money to an account. The reason given does not matter; this could be finalizing a transaction, a business acquisition or, on a smaller scale, purchasing goodies for staff.

In each of these scenarios, the common point is the need for the transaction to be completed quickly and for no one in the company to be informed. Who would refuse to obey their boss when he gives them such a directive during a video conference? This is where the current debate lies.

Deepfake: trust called into question

“The president scam” falls into the category of “breach of trust”, or “confidence scams”, that is to say frauds based on manipulation of the victim.

To work, the story and overall feel must be consistent. Often, a simple email sent from the management account (or appearing to be) is enough to convince. In the Hong Kong case, a very convincing deepfake video would have been used, since no interaction actually took place. A subterfuge which relies on the existing relationship of authority in a company and which benefits from its hierarchical environment.

It’s difficult to refuse a request from a superior who insists: “Look, I’m in a hurry. » An aspect that reveals itself ultimately crucial to the success of the method.

The state of the art of deepfake

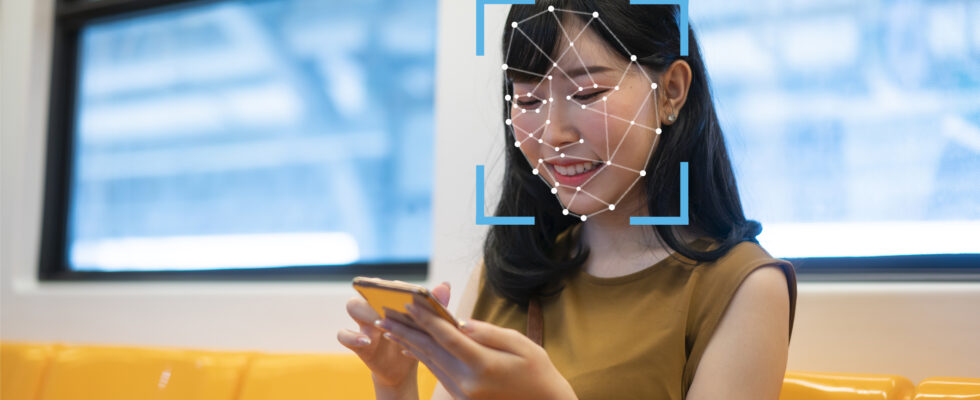

Deepfakes are video and/or audio recordings of an individual, digitally inserted into an image or video using artificial intelligence (AI). In the case of a video or photo, facial features can be superimposed on those of another. You’ve probably seen the fake photos of Taylor Swift that have circulated on the internet, or even a digitally rejuvenated Harrison Ford to play Indiana Jones in a film set during the Second World War. The results are so convincing that you can practically make the “deepfake” person say anything as long as the video image and voice are perfectly synchronized. To achieve this, it is necessary to have a sufficient number of recordings of a person, such as interviews or speeches, as well as photos and/or audio recordings.

However, creating a live appearance for an artificially generated person in a meeting is different. Trying to make an artificially generated image and voice appear in a format that requires an immediate response is theoretically possible. However, the result is not particularly convincing. There are longer pauses, facial expressions and tone don’t match the words, and it comes across as wrong.

In this specific case, what was even more striking was that there were several “deepfakes” during the videoconference, and only the employee who made the bank transfer was real. In fact, the deepfake videoconference was pre-recorded and did not feature any dialogue or interaction with the victim – only dialogues between the other people in the videoconference (also deepfake).

How to protect yourself?

Faced with this type of scam, the company must have structured its internal payment processes in such a way that they cannot be authorized by a simple request or instruction from defined individuals, but follow a more complex approval process . Double checking remains an excellent way to avoid falling into the trap. Do not hesitate to confirm the request made by a call or e-mail should be an acquired reflex to avoid being duped and risk appearing among the victims of future attacks.

Deepfake attacks allow us to learn some additional lessons. In the recent Hong Kong case, video and audio confirmation did take place, but there was no actual interaction. The employee only listened and looked at the message from the person he thought was “his leader”. A fabricated scam which made it possible to lure the employee and complete the money transfer.

To prevent such attacks from recurring and being successful, employees must feel comfortable questioning their superiors about both their identity and the accuracy of the assigned task; a way that is as simple as it is effective to complicate the attackers’ task. So, don’t hesitate to ask “who’s the boss”!