Artificial intelligence (AI) is increasingly penetrating our lives. Nevertheless, there are no moral or legally binding rules for the technology. This must urgently change, demands the ethicist Peter G. Kirchschläger. Because AI endangers our right to data protection, privacy or political participation.

SRF: Elon Musk assessed the danger potential of AI to be higher than that of the atomic bomb. How do you see that?

Peter G. Kirchschläger: The ethical risks are enormous. Our right to political participation is being massively undermined as we are manipulated into voting the way the AI wants. Our data is stolen every day and sold to third parties. According to current law, neither should happen.

A care robot cannot morally distinguish between bad and good.

If you and I don’t follow the traffic rules, we’ll get a fine. But I can throw an AI onto the market that violates human rights and nothing happens. Apart from the fact that I can make a lot of money.

How can it be that regulations are so slow to roll out?

You don’t want to prevent any positive developments. But above all it has to do with economic interests. There is massive lobbying. The technology companies do a very good job of ensuring that there is no regulation. Therefore, an independent agency for data-based systems is needed that is responsible for approval and supervision.

They prefer to talk about data-based systems rather than artificial intelligence. How come?

In some areas of intelligence, so-called AI is far ahead of us, for example in dealing with large amounts of data. We have to be careful not to lose control. However, other areas are inaccessible to them, such as emotional and social intelligence. I can teach a care robot to cry when a patient cries – or just as easily to slap them. He carries out the order, but cannot morally distinguish bad from good.

Sometimes we seem to believe that these systems are natural.

We therefore have to look closely: What can data-based systems do and what cannot they do? We have moral ability, the potential to think differently, to decide freely. Machines can’t do that. They are not free, but based on data.

Legend:

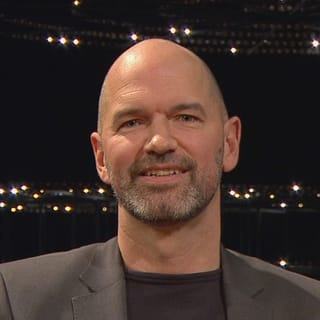

“We have to wake up,” demands the ethicist with regard to our handling of data.

Getty Images/IndiaPix/IndiaPicture

Where do you see a need for action?

We have to start with the data. Sometimes we seem to believe that these systems fell from heaven, are natural. But the future of AI is not a fate that we simply have to endure. Data-based systems are not neutral either, but are distorted by the selection of their training data and algorithms. This brings serious disadvantages for certain people – when looking for a job or with the criminal authorities.

There is a growing consensus among UN member states that regulation is needed.

Technology companies would say that these are teething problems and that the systems will soon become fairer.

That’s not true, because the human rights violations are not an unwanted side effect, but are at the core of the business model, which wants to keep people on social platforms for as long as possible in order to resell as much data as possible. We have to wake up.

Many say it is already too late to turn the wheel back. What gives them hope?

A look at history: Humanity has already achieved something similar, for example with the nuclear regulatory authority. And fortunately there is a growing consensus among UN member states that regulation is needed. But it’s true, we don’t have much time for it.

The interview was conducted by Wolfram Eilenberger.