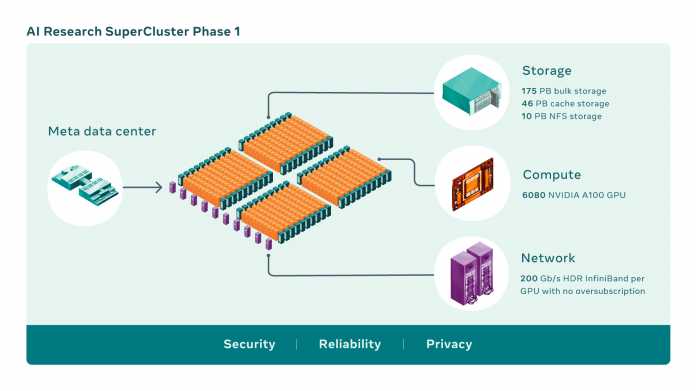

The Facebook group Meta has been building a new data center since 2020 for the AI research computer AI Research SuperCluster (RSC) with initially 1.895 exaflops of floating-point computing power with single precision (FP32).

The RSC essentially consists of 760 Nvidia DGX A100 systems, each with eight A100 accelerators, i.e. a total of 6080 A100 modules. However, Meta wants to expand the RSC to around 16,000 A100 accelerators in the course of 2022. Then the system should deliver around 5 exaflops of AI computing power with mixed-precision data processing.

Meta buys the flash memory systems with a total capacity of 175 petabytes from Pure Storage. The company Pengiun Computing contributes a cache system (Altus) with 46 PB, another 10 PB hold a Pure Storage FlashBlade.

Structure of the Meta AI Research SuperCluster (RSC)

(Image: Meta)

Nvidia technology is used for networking, namely Infiniband with 200 Gbit/s. With the Nvidia system, the A100 accelerators are connected directly to it, i.e. not indirectly via the respective (AMD Epyc) processors.

It is interesting that Meta does not rely on a system build with hardware according to specifications of the Open Compute Project (OCP), which Facebook itself launched in 2011. Instead, essentially proprietary technology from Nvidia is used.

Huge AI models

Meta wants to use the RSC primarily to train even larger AI models. As the company explains in a blog post, models with up to 1 trillion parameters and data sets with up to 1 exabyte in size are to be processed. As one of several goals, Meta names the detection of dangerous social media postings in real time. To do this, the AI models would have to be able to evaluate many more different languages, for example.

(ciw)