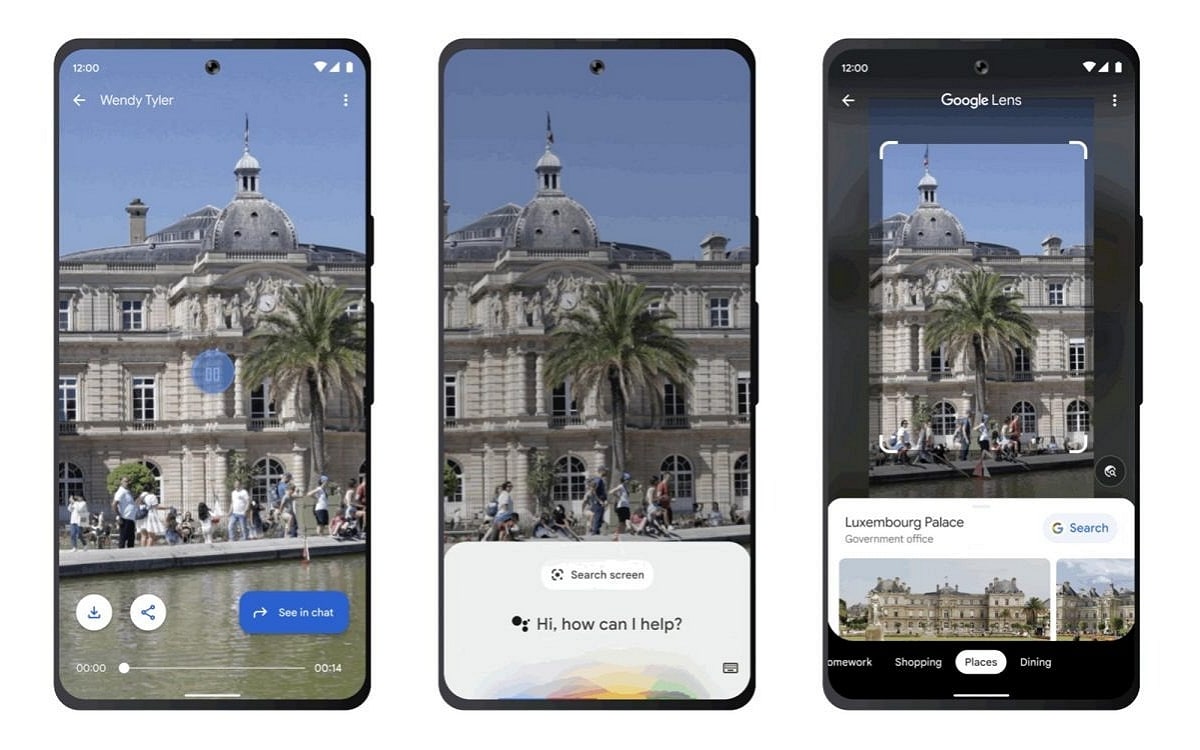

Google had announced that Assistant would have a Lens-powered “Search screen” feature that will effectively replace the “What’s on my screen” feature, and this one has just appeared on the pixels.

Google has started testing a new Google Lens feature that was announced earlier this year. This feature, called “Screen Search”, has started appearing on Android devicesespecially on Pixel devices running Google Beta app version 14.31.

According to a report from 9to5Google, when Pixel smartphone users use Google Assistant on their device, they now see a “Search on Screen” button instead of the usual “Lens” option. By tapping on this button, users can take anything on their screen and run it through Google Lens to search the internet.

Also read – Google Assistant: the firm is secretly working on a small bomb that will transform its tool

Google will make it easier to find the content displayed on your screen

Now, thanks to this new “search screen”, finding information about an element displayed on your screen becomes easier than ever. Previously, you had to take a screenshot and use Lens as an alternative search functionbut now just press the new button.

The “Search on screen” option is useful not only for finding new clothes or items, but also for navigating Google Maps, likely due to his close relationship with Lens. Now you can even analyze moles in your photos. This feature supports photos, videos, and more, making it versatile for various search scenarios.

The “Search on Screen” feature is part of Google’s visual search AI development and was first announced in February. Google said it will be rolling out to all Android devices in the coming months. However, for now, it appears to be limited to Pixel devices participating in the latest Google app beta. This could be an indication that its release to a wider audience is imminent.

Source: 9To5Google