Generative AIs tend to become more democratic. But for the moment, they are all the preserve of private companies and run on remote servers. What if we could “run” ChatGPT on our computers? Developer Simon Willison achieved this by running LLaMA, Meta’s language model, on his laptop.

The trend is undeniably towards automatic text generation artificial intelligence: ChatGPT, the new Bing, My AI from Snapchat, the You search engine and soon Google Bard. For the moment, all work thanks to servers, in the cloud. But within a few years, by optimizing and increasing the capacity of our machines, we could make them run smoothly thanks to our computers. This is already the case, not with the most famous language model, GPT-3, but with Meta’s, LLaMA. Because of its leaked code, some developers managed to use LLaMa on their computer.

The leak of LLaMA, Meta’s language model

That’s what changed everything according to open source developer Simon Willison: the release of the source code for LLaMA, the language model released by Meta a few weeks ago. The aspiration is great: 65 billion parameters are taken into account for text generation. Moreover, Meta claims that its model outperforms GPT-3 on most points.

Where Meta wanted to stand out from its competitors is in the opening of its model. It has been made available to researchers, although you have to accept certain strict conditions, such as using it only for research purposes, without commercial purposes of course. But as Simon Willison writes, a few days after its publication, LLaMA saw its source code published on the Internet with all the model files. It is now too late for Meta: her baby is in the wild and can be used locally.

Running a text generation tool like ChatGPT on your computer: it’s possible

So Simon Willison ran the program on his own MacBook and couldn’t suppress some emotion: ” As my laptop started spitting text at me, I really had a feeling the world was about to change, once again.He who thought that it would still take years before being able to operate a model similar to GPT-4, he was wrong: “that future is already here.»

According to the developer,our priority should be to find the most constructive ways to use it“, speaking of AI. He adds that “assuming Facebook doesn’t relax the licensing terms, LLaMA will likely be more of a proof of concept that local language models are usable on consumer hardware than a new base model for people to use in the future.” At any rate, “the race is on to release the first fully open language model that provides users with ChatGPT-like capabilities on their own devices.»

In his blog post, the developer explains how he pulled off this tinkering. Simon Willison talks about the llama.cpp project by Georgi Gerganov, also an open source developer based in Sofia, Bulgaria. The latter published on Github LLaMA in order to “run the model using 4-bit quantization on a MacBook.Simon Willison clarifies that “4-bit quantization is a technique for reducing the size of models so that they can run on less powerful hardware. It also reduces the size of models on disk: 4 GB for the 7B model and just under 8 GB for the 13B model.” THE “Bbeing here the number of parameters used by LLaMA, expressed in billions.

The Dangers of Open-Source or Locally-Running Language Models

However, while it’s a great thing for the open source world and for limiting control of these language models to a few companies, this “releasehas some dangers. Simon Willison even listed ways to use this technology for harm:

- Generation of spam

- Automated romance scams

- Trolling and hate speech

- Fake news and disinformation

- Radicalization due to a bubble effect

Moreover, these multipleChatGPTcan still make things up that are wrong on the same level as she can write factually correct sentences. This is also where the strength of thegamam»: by providing access to their language models via virtual machines, an API or conversational tools, they can add layers of moderation. By fine tuning and which must constantly improve, they can control the way in which users interact with these artificial intelligences. By running these locally, you can lose those layers of control.

Only big digital companies control language models

Finally, there are few language models: among the most important are GPT-4 from OpenAI, LaMDA from Google or LLaMA from Meta. While Microsoft doesn’t fully control OpenAI, it has invested around $10 billion in the company. Announced recently, GPT-4 is a major new version of GPT that takes into account many more parameters, for even better performance and increased precision. If we say that the expressiongamammay seem simplistic, it is actually these few private companies that control the most popular language models. The potential dangers of this control are great: they are in fact black boxes about which we do not know much.

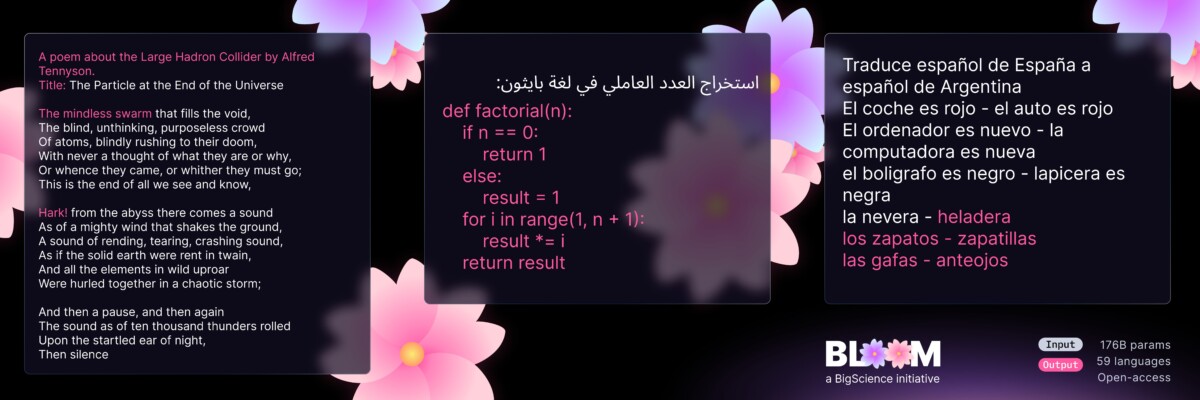

Other initiatives exist, even in France. With us, it’s Hugging Face and its model Bloom which is at the head of the bow. As indicatedLe Figaro, the company was founded by three French people and is valued at $2 billion. A language model designed in open source and in collaboration with the CNRS: it has beentrained for one hundred and seventeen days on the French public supercomputer Jean Zay, and already downloaded for free by more than 65,000 professionals“. The ambition is clear: Hugging Face dreams of a Franco-European Bloom that will compete with ChatGPT. If this type of AI is still reserved for large companies, it is also because their development requires significant funding.

Running generative AIs is expensive

This week, Microsoft presented new virtual machines in Azure, its cloud solution: they are expressly designed for AI. But to make it all work, Microsoft used Nvidia H100 GPUs. An infrastructure valued at hundreds of millions of dollars which ultimately makes Nvidia the big winner in this race in which all the giants are launched. Moreover, this race could lead to a new shortage of graphics cards in the coming months, according to some forecasts.

As Simon Willison writes in his article, automatic text generation models are even bigger than image generation models, such as Stable Diffusion, Midjourney or DALL-E 2. For him, “even if you could get the GPT-3 model, you wouldn’t be able to run it on commodity hardware — these models typically require multiple A100-class GPUs, each of which retails for over $8,000.»

In addition to a lot of computing power, it also takes skilled teams to create these models. Still for Simon Willison, if dozens of models exist, none manages to tick the following boxes:

- Ease of execution on own hardware

- Powerful enough to be useful (equivalent to the performance of GPT-3 or better)

- Open enough to be modified.

Do you use Google News (News in France)? You can follow your favorite media. Follow Frandroid on Google News (and Numerama).