Are you interested in AI and would like to generate text or images by yourself, without using a paid service? It’s possible (fairly) simply if you have a generation 30 or 40 Nvidia GeForce RTX card. We explain how to do it in this article.

If the companies behind ChatGPT, Midjourney or Google Gemini rely on Nvidia graphics cards in their servers to run their generative AI, then why couldn’t you do the same? In fact, it’s entirely possible. For several months, Nvidia has been providing free tools to individuals to run LLMs (Large Language Model) or extensions to take advantage of Stable Diffusion locally with better performance. As long as you don’t mind getting your hands dirty and have a graphics card with substantial VRAM, installing these tools is relatively simple.

ChatRTX: a generative text AI to analyze your own documents

Available since February 2024, ChatRTX promises to create a ChatGPT clone based solely on your computer’s power and data. To run it, the necessary configuration is as follows:

With these prerequisites, the installation and use of this LLM interface is very simple. It is done automatically via an executable file. It doesn’t seem like much, but the fact that this configuration work is automatically carried out in the background is already a small feat in itself.

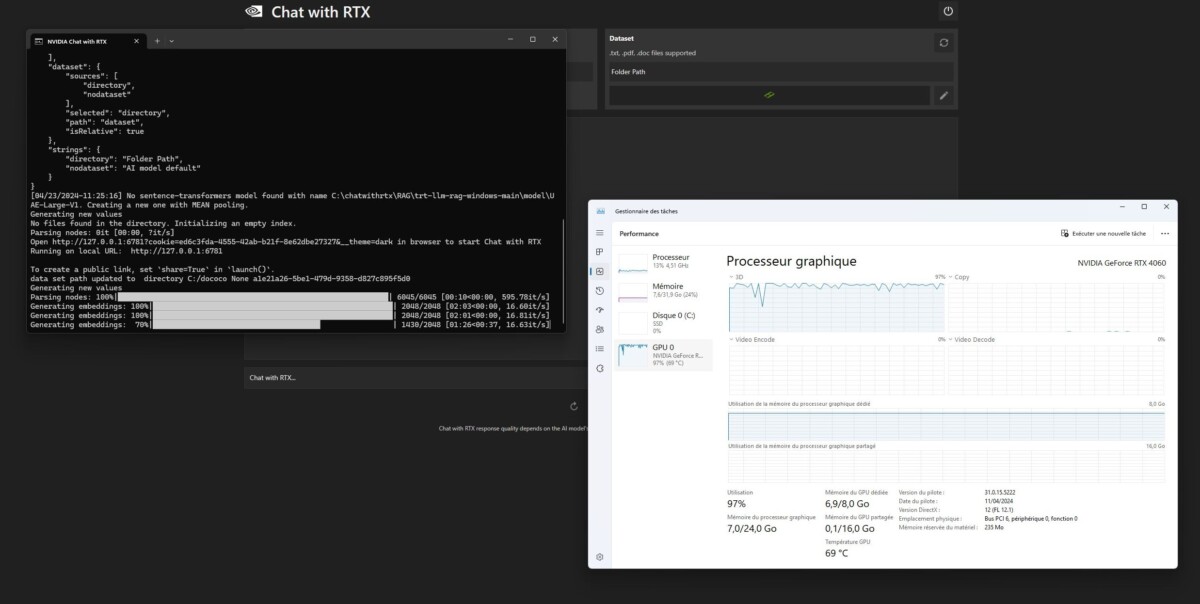

Launching ChatRTX for the first time can be confusing. A window containing command lines appears and runs an obscure program. This window called “Shell » is the heart of the program. You will have absolutely nothing to do or enter except visualize the inference process running in the background.

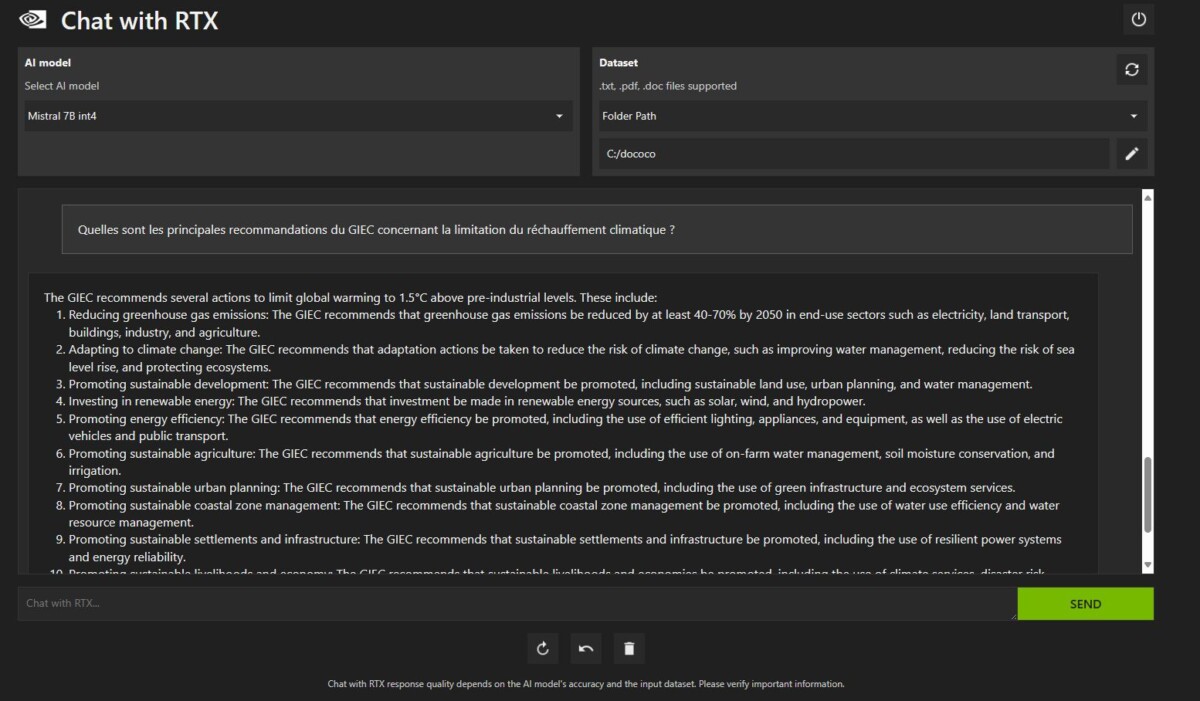

Interaction with the AI itself is via a web browser (a Web UI), hosted on a local IP address on your computer, and displayed in a simple interface. It is this interface which is responsible for translating the user’s requests to the famous Shell window.

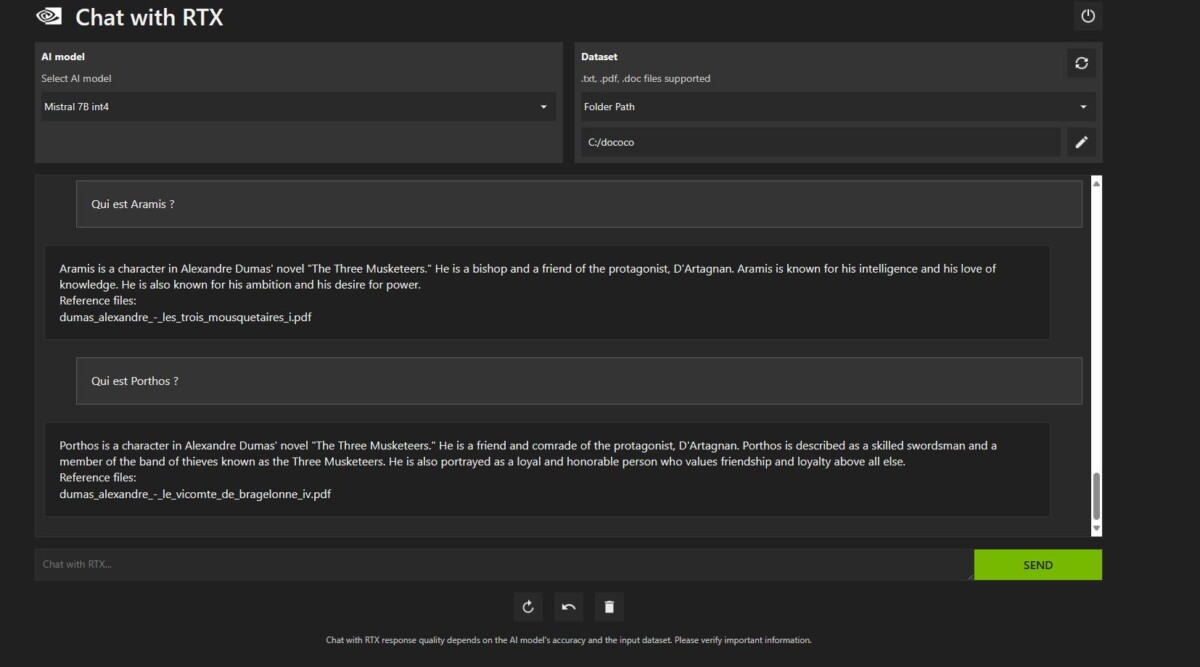

The main particularity of ChatRTX is that this AI does not need an Internet connection to function. It is up to the user to provide him with a knowledge base, in this case, files to which you agree to give him access on your PC. Currently, only text and PDF formats can be scanned by AI. Which can be very interesting for finding answers if you have, for example, large PDF documents to look through or even a library of ebooks to summarize.

This video (in English) shows very clearly what ChatRTX is capable of

Cutting-edge technologies to run LLMs locally

To achieve this result locally, Nvidia relied mainly on three technologies: generation augmented by recovery ( Retrieval Augmented Generation or RAG), its open source library TensorRT-LLM and finally the chips based on the Ampere architecture to power everything. The RAG technology is then responsible for connecting an LLM to data present on the user’s PC, while the TensorRT-LLM library uses the Tensor Cores of the graphics cards to optimize and boost the AI analysis.

ChatRTX is currently only in the demo stage. Nvidia plans to improve it over time and plans to offer a full version in a few months. Nvidia is today the only player to offer a tool that is easy to install and use to play with LLMs.

ChatRTX is able to analyze photos

Nvidia is constantly improving its software and plans to offer a full version in a few months. The latest update, released on May 1, 2024, brings a lot of new features:

- New LLMs supported, like Gemma, Google’s latest LLM, and ChatGLM3, an open, bilingual (English and Chinese) LLM.

- Support for photos in files that can be sourced. This way, ChatRTX users can easily search and interact locally with their own photo data without having to label complex metadata, via OpenAI’s CLIP feature.

- ChatRTX users can also speak (with their mic) with their own data, thanks to support for Whisper, an AI automatic speech recognition system that now allows ChatRTX to understand spoken speech.

Generate images with TensorRT and Stable Diffusion

Generating images by AI from your own PC is also possible. The simplest for this type of operation is still to use Stable Diffusion. To install it, you will need the following prerequisites:

- a GitHub account (to download and install AUTOMATIC1111);

- a HuggingFace account;

- download and install Python in version 3.10 (we advise you to use the Microsoft Store to facilitate installation);

- around thirty GB of free space on your hard drive;

- and if it’s the first time you’re doing it, allow at least a good hour for installation.

We are not going to go into detail about the process of installing Stable Diffusion on a PC locally using the AUTOMATIC1111 interface. Emmanuel Correia from the YouTube channel AiAndPixels explains it very clearly and in French what’s more:

To save time and skip steps, you can also download and install Stability Matrix. This is an installer all in one » which avoids asking questions about the version of the different software and accounts to create.

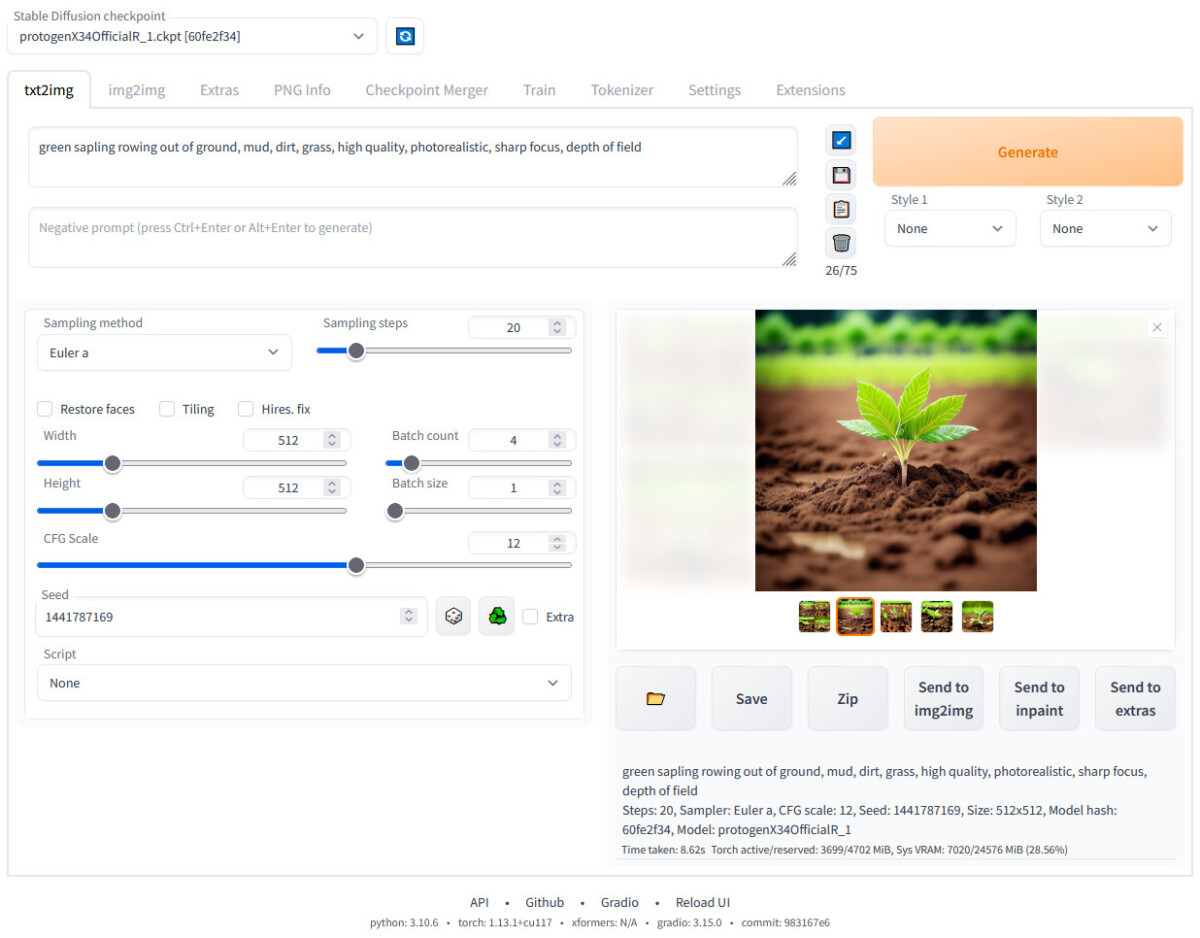

If all goes well, you should be able to access a visual interface, which will be displayed in your web browser (Web UI). Very complete, it offers a few more options than the official Stable Diffusion online interface. However, we advise you to consult some tutorials and other online documentation to get to grips with it.

The advantages of an Nvidia GeForce RTX 30 or 40 graphics card for generating images by AI

On the hardware side, it is not mandatory to have an Nvidia GeForce graphics card to generate images. But the minimum configuration to get started with image generation must be robust. To start prompt in good conditions, count a GPU with at least 8 GB of VRAM and 16 GB of RAM in your PC (to be really comfortable, double these values). On the other hand, not all graphics cards are equal in terms of performance. And in this area, Nvidia has a good head start.

Indeed, the first benchmarks carried out with Procyon, the new benchmark software from the creators of 3D Mark, are clear. The results published in Hardware and co show very clearly the superiority of Nvidia’s GeForce over the competition: a “ simple » GeForce RTX 4070 thus offers higher scores than the most expensive graphics card from the competition.

Nvidia: a step ahead in the world of generative AI

This is not a surprise as Nvidia’s advance in the field of AI,Deep Learningand especially the software aspect is important. The company does not just producehardwarethe most efficient in the field, it also offers drivers and software extensions to get the most out of it. Nvidia engineers have recently offered an extension of the TensorRT library dedicated to Stable Diffusion:TensorRT Extension for Stable Webcast UIwhich can directly be attached to the interface of AUTOMATIC1111.

Nvidia’s advance in the field of generative AI should not stop any time soon. The American brand is today on all fronts of AI: generation of text, video, images in video games, generation of images and 3D representation in art, Nvidia is everywhere. To discover the extent of the possibilities offered by the manufacturer’s hardware and software solutions, the brand’s blog also has a dedicated section so as not to miss any advances in this area.