Google made new announcements on Wednesday at the Search On event that go well beyond historical text search.

For years, Google has been developing more and more natural and intuitive ways to search for information. From the text search of yesteryear, it is now possible to carry out searches from a photo or a voice command. On Wednesday, the Mountain View company took advantage of its Google Search On event to show all the capabilities offered by advances in artificial intelligence, through new, ever more immersive features.

The Multisearch functionality, which allows searching from images and texts simultaneously, will soon arrive in Europe

It was currently only available in beta version in the United States, but now it will expand to more than 70 new languages, including French, in the coming month. What are we talking about ? From the Multisearch functionality, which itself uses Google Lens visual search, inaugurated in 2017 and which today makes it possible to process some 8 billion requests per month.

Multisearch allows you to search from images and text, all at the same time. This first feature will soon be available in France, but Google has already promised to launch the improved version this fall in the United States. This is baptized Multisearch near me, understand “Multisearch near me” in French. Here, the user takes a photo of an object, a plant, a dish, and Google tells him in an instant where to find it in a nearby place. Quite stunning.

A new era for translation

Google Translate will also know its revolution. The American firm’s translation tool wants to help break down language barriers through visual communication. This is how Google, with the help of AI, was able to switch from translating text to translating images. A figure testifies to the usefulness of the tool: each month, the company registers more than a billion uses for the translation of a text from a photo, all in 100 different languages.

And to go further, Google now offers its users the possibility of reintegrating the text once it has been translated, directly into the original image, through GANs (Generative Adversarial Networks), which can be translated as “generative adversarial networks”, a fairly recent technology that is particularly promising, which can be compared to the creative part of machine learning.

On the practical side, if you point your camera at a magazine in a foreign language, Google will automatically translate the text and overlay it on the images on the page, as if it were printed.

The immersive view enriches Google Maps more than ever

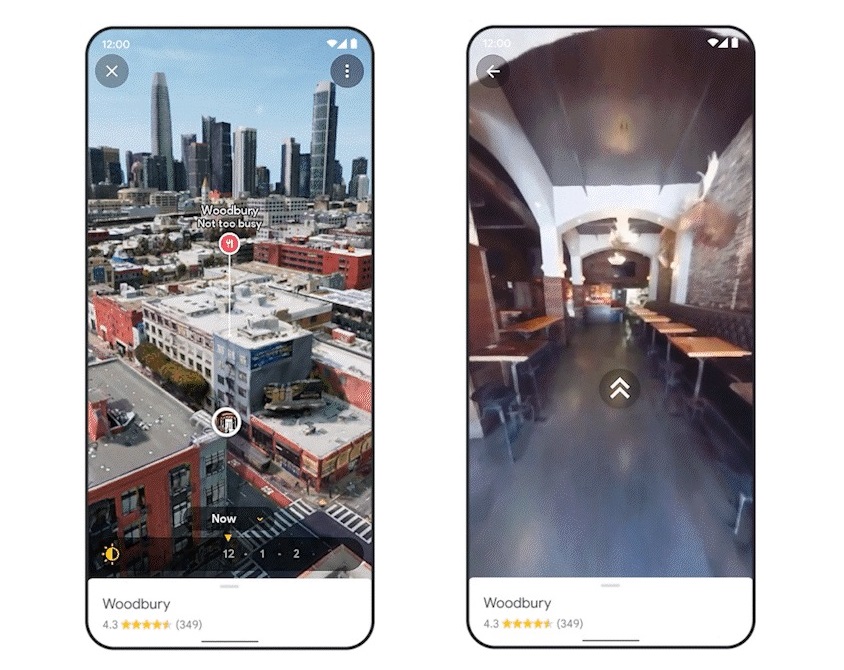

With the progress made in predictive models and artificial vision, Google is loudly claiming to have reimagined the concept of the map, moving from a 2D image to a multidimensional view of our world. All this so that the user represents a place almost as if he were there physically.

We already knew the display of traffic in real time, based in particular on the participatory aspect of Google Maps, but now the application will be enriched by the immersive view. The latter consists of a dynamic representation of various and varied information, such as affluence and the weather. In addition to all the information already provided… The user can then get a fairly precise idea of a target location even before setting foot there.

If we take the example of a restaurant, the immersive view allows you to zoom in first on the neighborhood, then on the targeted establishment, thus taking note of the frequentation of the place at such or such time of the day. (the user has a cursor which helps him to choose the exact time), and the weather.

Obviously, this functionality requires large human resources and aerial images, so it is particularly complex to deploy. It’s our little regret. 250 monuments are thus available for the first version of this feature. The immersive view will definitely be launched in five cities (San Francisco, Los Angeles, New York, London and Tokyo) in the coming months. Other cities will no doubt follow later.

4