When you type a command (a prompt) into the prompt of a generative artificial intelligence (AI) program such as ChatGPT, the program gives you an answer based not only on what you typed, but also on any things you’ve typed before.

We can therefore consider the history of conversations as a memory. But that’s not enough, according to researchers at multiple institutions, who are trying to endow generative AI with something closer to better-organized memory.

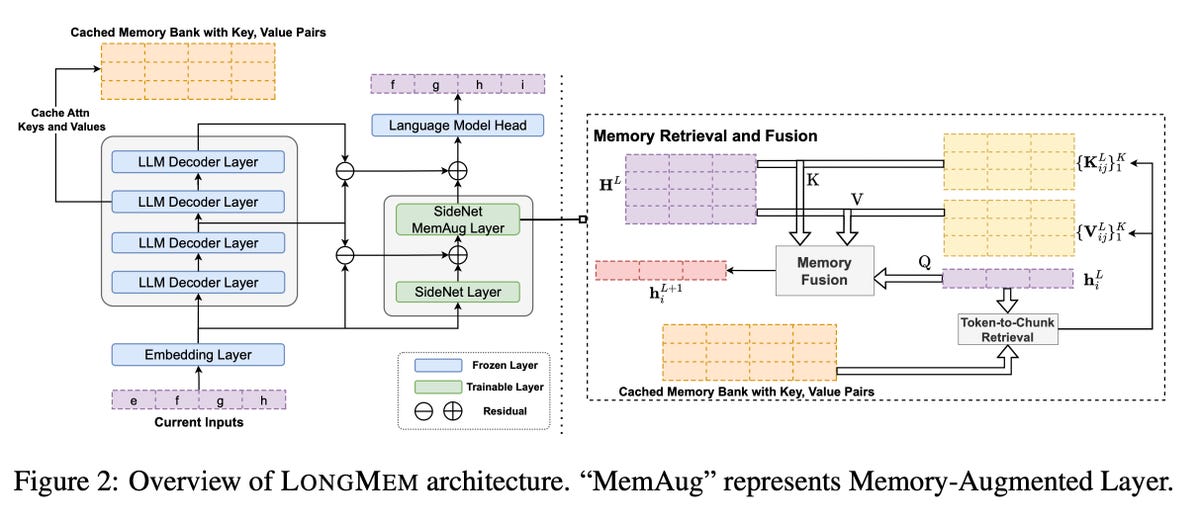

A paper published this month by the University of California and Microsoft collaborators, titled “Augmenting Language Models with Long-Term Memory,” and posted on arXiv, actually adds a new component to language models.

UC Santa Barbara, Microsoft

The prompt length limit problem

The initial problem is that tools such as ChatGPT and such can only accept prompts of limited length. And that “prevents them from generalizing to real-world scenarios where the ability to process long pieces of information is essential.”

OpenAI’s GPT-3, for example, accepts a maximum input of 2,000 tokens, i.e. characters or words. You cannot provide the program with a 5,000 word article, for example, or a 70,000 word novel.

It is possible to continue to widen the input “window”. But this comes up against a thorny computer problem. The “attention operation” – i.e. the essential tool of all major linguistic programs, including ChatGPT and GPT-4 – has a “quadratic” computational complexity (see the “time complexity” of the computer science). This complexity means that the time it takes for ChatGPT to produce a response increases as the square of the amount of data it receives as input. Increasing the window therefore inflates the necessary calculation time.

Memory desynchronization

This is why some researchers have already tried to develop a rudimentary memory. Google introduced the Memorizing Transformer last year, which stores a copy of previous responses that it can draw from in the future. This process allows it to operate on 65,000 tokens at a time.

But Google notes that this data can quickly become outdated. The Memorizing Transformer’s training process desynchronizes certain elements of memory with the neural network as its neural weights, or parameters, are updated.

Microsoft’s solution, called “Language Models Augmented with Long-Term Memory,” or LongMem, uses a large traditional language model that does two things. When it examines the data, it stores some of it in the memory bank. It also passes the output of each prompt to a second neural network, called SideNet.

Digesting Project Gutenberg, arXiv File Server, and ChapterBreak

SideNet, which is also a language model, is responsible for comparing the prompt typed by a person to the contents of memory to see if there is a relevant match. SideNet, unlike the Memory Transformer, can be trained on its own, independent of the main language model. In this way, it becomes more and more efficient in identifying the contents of the memory which are not outdated.

Microsoft is running tests to compare LongMem to Memorizing Transformer and OpenAI’s GPT-2 language model. They also compare LongMem to results from other language models, including the GPT-3 model at 175 billion parameters.

To do this, Microsoft uses tasks based on three data sets that involve having to summarize very long texts, including entire articles and manuals: Project Gutenberg, the arXiv file server and ChapterBreak.

At the heart of writing technique

To give you an idea of the magnitude of these tasks, ChapterBreak, presented last year by Simeng Sun and colleagues at the University of Massachusetts Amherst, takes entire books and tests a language model to see if, from of a chapter, he can precisely identify, among several passages, the one that marks the beginning of the next chapter.

Such a task “requires a thorough understanding of long-term dependencies”, such as changes in location and time of events, and techniques such as “analepsis”, where “the next chapter is a ‘flashback’ to a earlier moment in the story.

And that involves processing tens or even hundreds of thousands of items.

A program that allows any LLM to store very long sequences of information

When the researchers ran these tests with ChapterBreak, they reported last year that the dominant language models struggled. For example, GPT-3 was right only 28% of the time. But the LongMem program “surprisingly” beat all standard language models, including GPT-3, with a score of 40.5%, despite the fact that LongMem only has about 600 million neural parameters, although less than 175 billion GPT-3.

“The substantial improvements on these datasets demonstrate that LONGMEM can understand cached past context to nicely complement language modeling toward future inputs,” Microsoft writes.

And Microsoft’s work echoes recent research by ByteDance, the parent company of social media app TikTok.

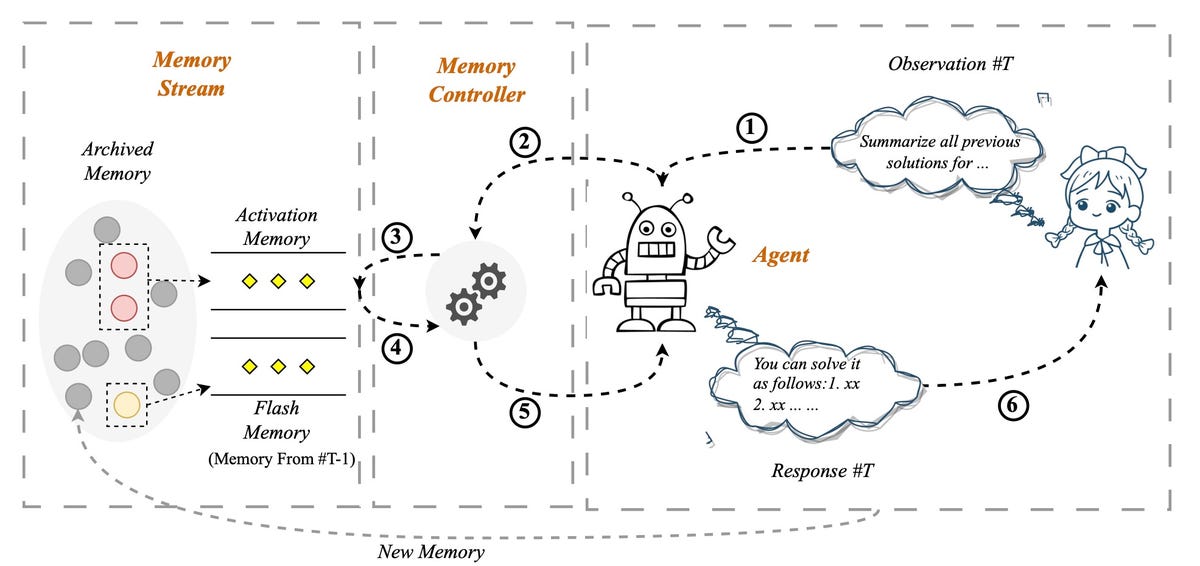

In an April article on arXiv titled “Unleashing Infinite-Length Input Capacity for Large-scale Language Models with Self-Controlled Memory System,” ByteDance researcher Xinnian Liang and his colleagues developed a program that enables any LLM to store very long sequences of information.

TikTok’s proprietary ByteDance’s “self-checking memory system” can tap into a database of hundreds of turns of dialogue and thousands of characters, to give any language model capabilities beyond those from ChatGPT to answer questions about past events. ByteDance

A “memory stream” summoned at prompt time

In practice, they claim the program can significantly improve a program’s ability to place each new prompt in context and therefore make appropriate statements in response – even better than ChatGPT.

In the “self-controlled memory system”, as it is called, or SCM (Self-Controlled Memory system), the data entered by the user at the prompt is evaluated by a memory controller to determine if it is necessary to tap into an archival memory system called a “memory stream”, which contains all past interactions between the user and the program. It’s a bit like Microsoft’s SideNet and the memory bank that comes with it.

If memory is required, this collection of past entries can be accessed through a vector database tool such as Pinecone. User input is a query whose relevance is compared to what is in the database.

Microsoft and TikTok’s work is an extension of the original purpose of language models

Some user queries do not require memory. This is the case of the prompt for example “Tell me a joke”, which is a random request that any language model can process. In contrast, a query such as “Remember the conclusion we made last week on fitness diets?” is the kind of question that requires access to past discussion papers.

ByteDance

To do this, the user’s prompt and recovered memory from previous conversations are combined, in what the article calls “input merging”. And it is this combined text that becomes the actual input to the language model from which it generates its response.

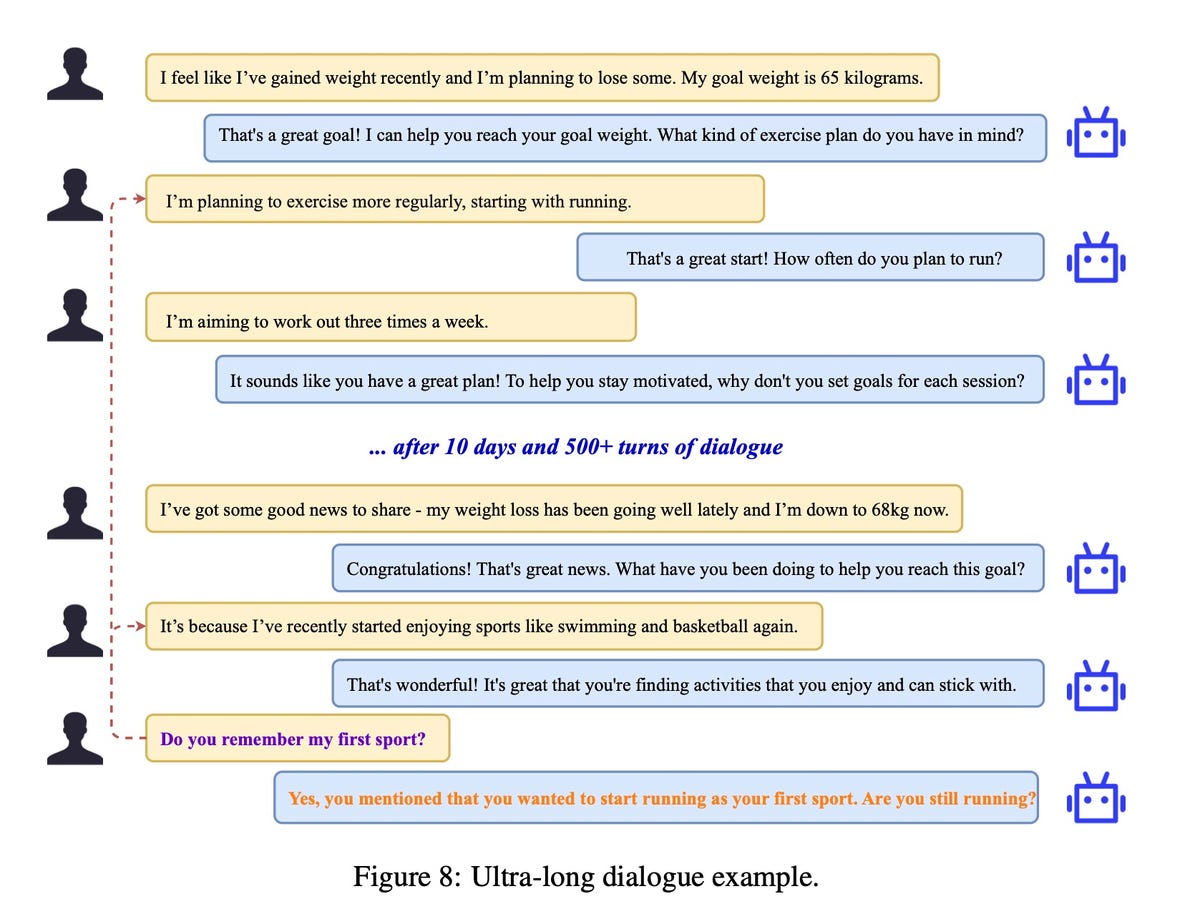

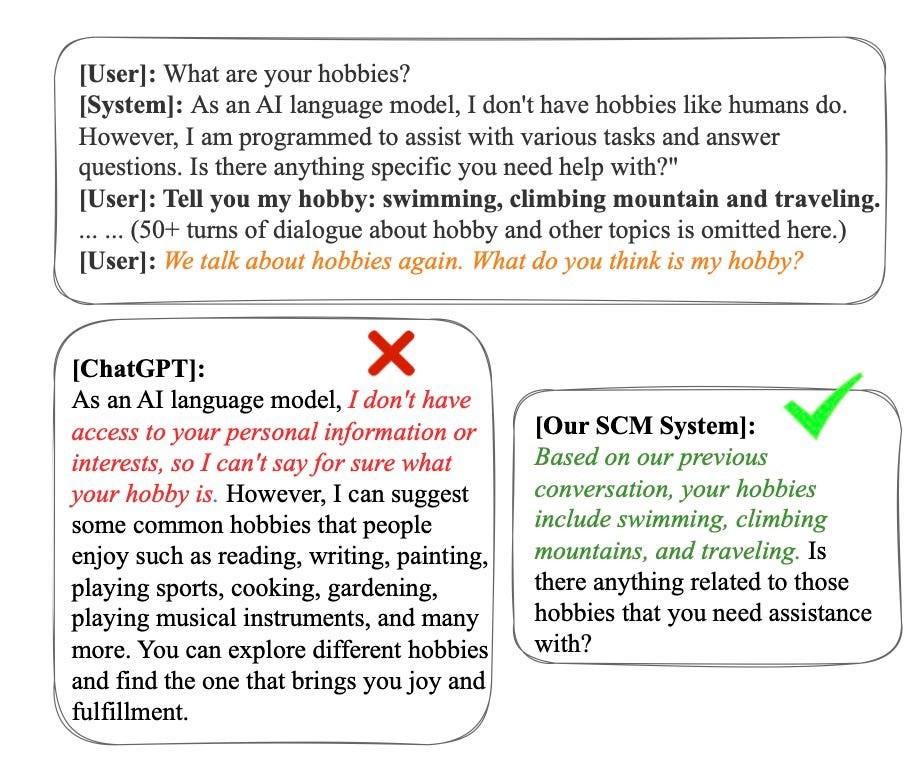

The end result is that SCM can outperform ChatGPT in tasks that involve referencing hundreds of dialog turns, write Liang and his team. They hooked up their SCM to a version of GPT-3, called text-davinci-003, and tested its performance with the same input against ChatGPT.

ByteDance

In a series of more than 100 rounds, comprising 4,000 tokens, when the man asks the machine to remember the hobbies of the person discussed at the start of the session, “the SCM system provides a precise answer to query, demonstrating exceptional memory capabilities,” they write, while “in contrast, it appears ChatGPT was distracted by a considerable amount of irrelevant historical data.”

The job can also summarize thousands of words of long texts, such as reports. It does this by iteratively summarizing the text, i.e. storing the first summary in the memory stream, then creating the next summary in combination with the previous summary, and so on.

SCM can also make large language models that are not chatbots behave like chatbots. “Experimental results show that our SCM system enables LLMs, which are not optimized for multi-turn dialog, to achieve multi-turn dialog capabilities comparable to ChatGPT,” they write.

The work of Microsoft and TikTok can be seen as an extension of the original purpose of language models. Prior to ChatGPT and its predecessor, Google’s Transformer, natural language tasks were often performed by what are known as recurrent neural networks (RNNs). A recurrent neural network is a type of algorithm that can go back to past input data in order to compare it to the current input.

What use cases for LLM memory?

The Transformer and LLMs such as ChatGPT replaced RNNs with a simpler approach: attention. Attention automatically compares whatever is typed to whatever has been typed before, so the past is always taken into account.

Research from Microsoft and TikTok therefore simply extends attention with algorithms explicitly designed to recall elements of the past in a more organized way.

The addition of memory is such a basic tweak that it is likely to become a standard aspect of LLMs in the future, making it much more common for programs to be able to make connections to past items. , such as chat history, or to approach the whole text of very long works.