Micosoft unveiled the new open-source SLM, Phi 2, on its official blog on Wednesday.

Phi 2 is a model with 2.7 billion parameters. It incorporates scientific knowledge and proprietary datasets from Microsoft, the company said. It was trained for approximately two weeks using 96 NVIDIA A100 graphics processing units (GPUs).

According to the researchers, Pie 2 did not undergo reinforcement learning like OpenAI’s ChatGPT. Despite this, tests show that it is less prone to hallucinations and biases than its predecessor, Phi 1.5, and Meta’s Lama 2.

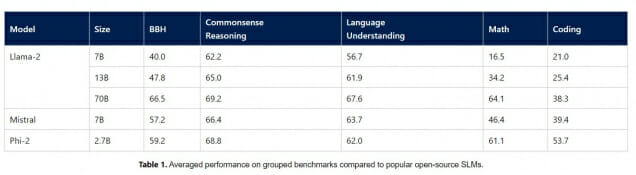

Microsoft compares Phi 2 to third-party open-source models

Microsoft compares Phi 2 to third-party open-source models in several categories, including reasoning, ethics, language comprehension, mathematics and coding.

Phi 2 outperforms third-party open-source models (Photo: Microsoft)

On average, Phi 2 outperforms the 700 million parameter Mistral model and the 700 million, 1.3 billion and 7 billion parameter versions of Lama 2, Meta.

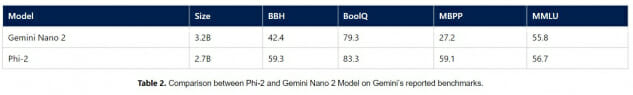

Phi 2 outperforms Gemini Nano 2 despite its smaller size (Photo: Microsoft)

Microsoft also compares Phi2 to Gemini Nano 2, recently unveiled by Google. Like Phi 2, Gemini Nano 2 is designed specifically for mobile devices. In testing, the Phi 2 performed similar to or better than the Gemini Nano 2.

Towards the use of AI in local mode

“In the future, research into artificial intelligence systems can easily be done on a regular laptop or smartphone,” Microsoft said, “and the Phi 2 is leading the way into the era of SLM.”

At this time, Phi 2 is only available for research purposes. It is not available for commercial use. “As Phi 2 is an open source model, we will open it for non-commercial, non-monetized, and research purposes only,” the company said.

Source: “ZDNet Korea”