Meta indicates that it is launching into the identification of content produced by AI, photos and videos, on Facebook and Instagram, so as not to mislead its users. The same day, OpenAI added C2PA certification to images generated by its DALL-E 3 tool.

“ This image was generated by AI »: when inspecting the images generated by the company’s OpenAI tools, it will now be easier to identify with which artificial intelligence they were generated.

It is OpenAI, the firm led by Sam Altman, at the origin of ChatGPT, which announced in a blog post on February 7, 2024 this update, modeled on the protocol called C2PA (Coalition for Content Provenance and Authenticity) .

This coalition founded in 2021 by Adobe and other firms (BBC, Intel, Microsoft) aims to create a universal and open-source standard to recognize the origin of images published online. The aim is to combat disinformation through modified visual objects to influence public opinion.

Successfully identifying whether or not an image has been altered is one of the biggest challenges of sharing information online. In the past, the question concerned tools like Photoshop, but now, generative AI makes it possible to create unprecedented volumes of content, with unparalleled simplicity and speed.

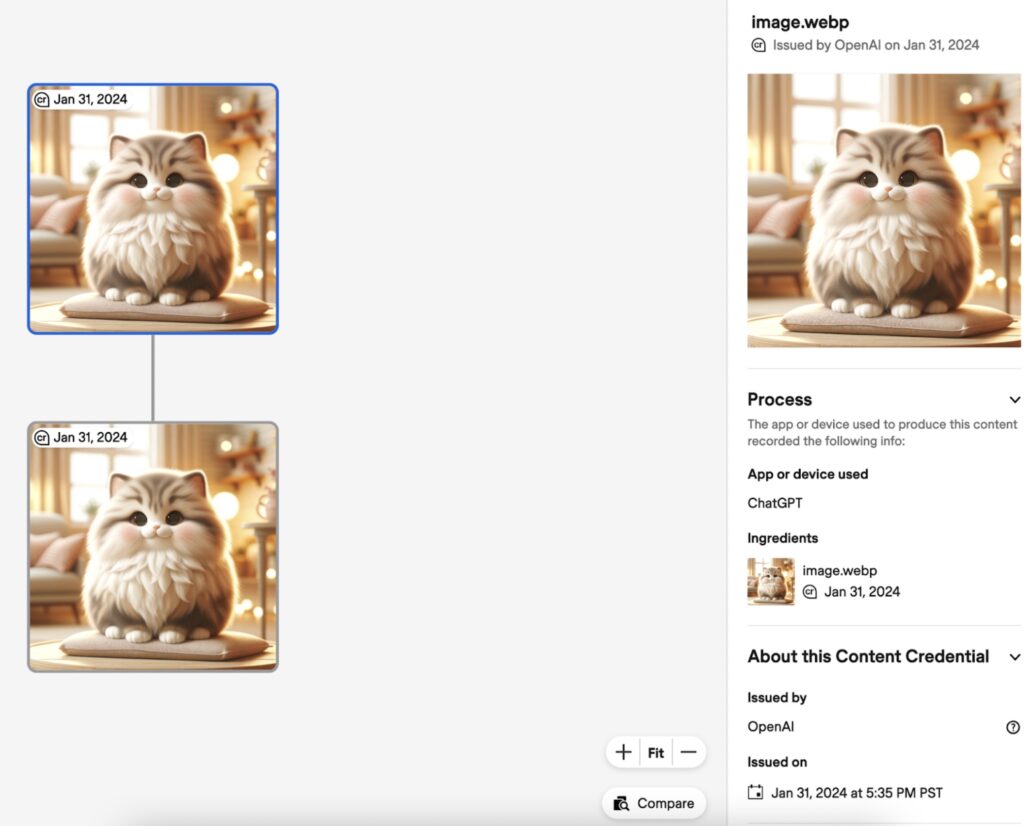

C2PA advocates for the addition of additional metadata: these are data, visible or invisible, which provide information about other data. In this case, it would be a small visual added to the top left of the image, with the date of its creation and a pictogram which indicates that it is not natural. In the additional data it is written that the image was created using OpenAI and that the tool used is ChatGPT, which is more precise than before.

Currently, only still images are affected, not videos.

OpenAI specifies, however, that these tools are not infallible: “ Since metadata can be removed, its absence will not mean that an image was not produced by our AIs », Recalls the firm in a tweet. “ Wider adoption of methods to establish provenance and encouraging users to identify these signals are steps to increasing the trustworthiness of online information. »

How to use AI sensibly?

Numerama has tested the DALL-E tool via ChatGPT 3.5 and ChatGPT 4, but as of yet the tool has not incorporated the watermark visual to our images.

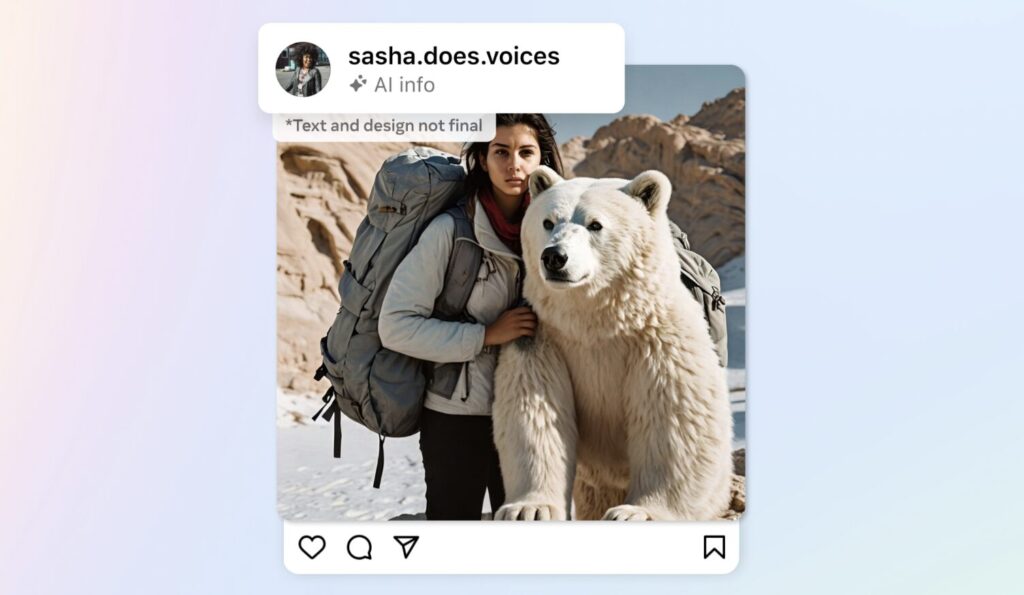

OpenAI’s announcement follows that of Meta (Facebook, Instagram), the same morning, which said it was working on the detection and identification of content produced by AI on its social networks. “ In the coming months, we will label images that users post on Facebook, Instagram, and Threads, when we can detect industry-standard indicators that they are AI-generated “, wrote President Nick Clegg. Meta would develop internal tools capable of detecting an AI image generated on competing platforms.

Users will also have the possibility to declare themselves whether the image they publish was created by an AI or not.

Many information sites have also struggled this past year to find out how to use image production tools, without taking the risk of generating public distrust.

Numerama has decided to add a visible “AI” watermak (created by our design team) on each image produced via Midjourney or Dall-E, as well as to follow certain basic rules – do not attempt to reproduce the image of a existing personality, for example.

If you liked this article, you will like the following: don’t miss them by subscribing to Numerama on Google News.