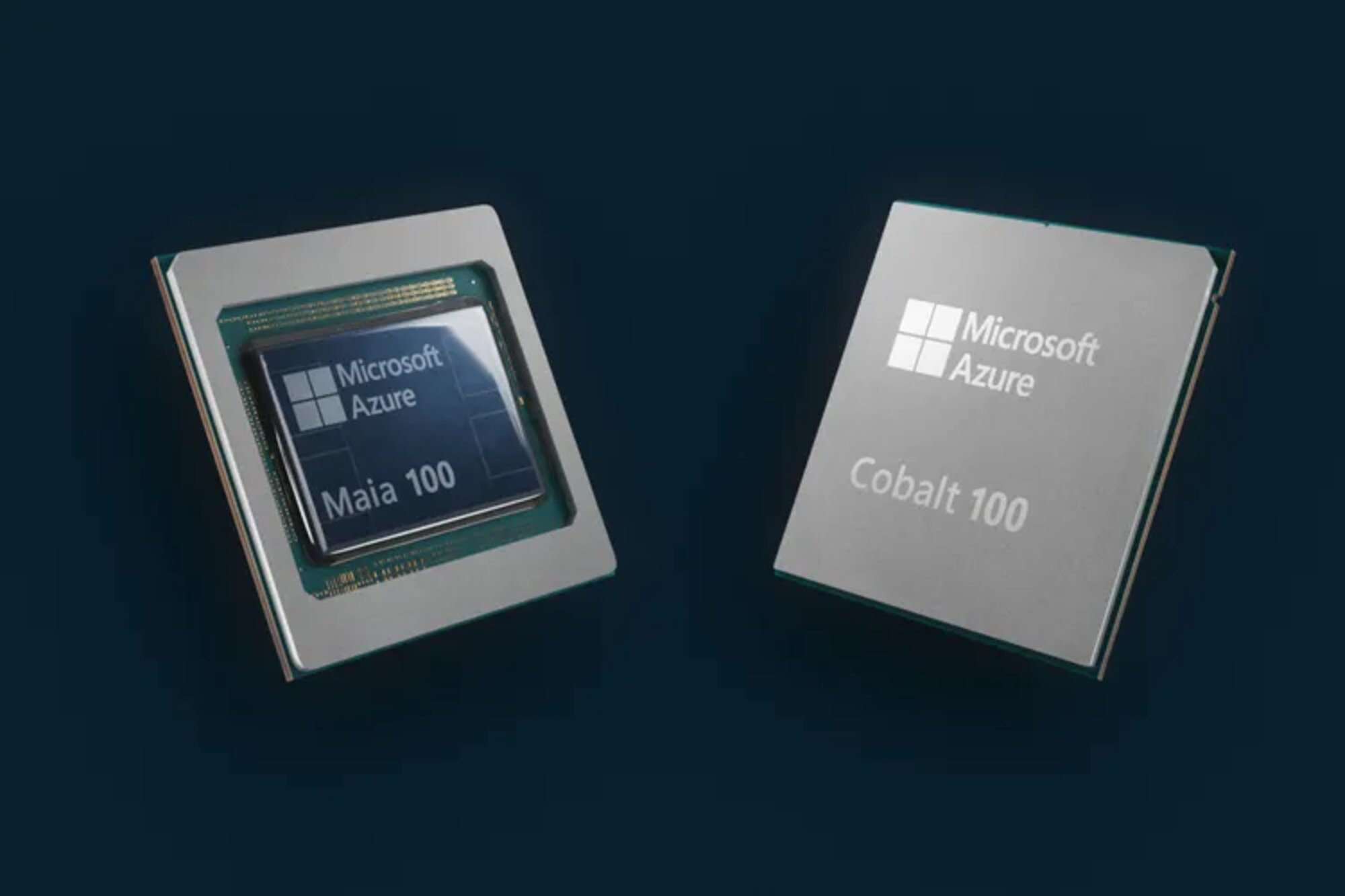

Microsoft has formalized the Cobalt 100 and Maia 100, ARM CPUs with 128 cores and accelerators with 105 billion transistors respectively. They will support the company’s cloud services in the very near future.

The rumor that Microsoft was working on its own processor for artificial intelligence, in part to limit the use of intermediaries as much as possible, had been circulating for several months. The Redmond firm confirmed this allegation during its annual Microsoft Ignite conference.

Moreover, it is not just one processor that has been announced, but two: the Azure Maia 100 and Cobalt 100.

Chips for data centers

Temper your hopes right away, you will not be able to install these chips in your home PC to replace your Intel Core or your AMD Ryzen. Indeed, these Maia 100 AI Accelerator and Cobalt 100 are designed for data centers.

More specifically, Microsoft presents the Maia 100, the first representative of the Azure Maia family, as an AI accelerator specialized in workloads on the OpenAI, GitHub or Copilot models.

The Cobalt 100, the first representative of the Azure Cobalt branch, is a CPU (central processing unit) under ARM architecture designed to support common workloads for the Microsoft cloud. The company mainly touts its energy efficiency.

To go into more technical details, Maia 100 benefits from 5 nanometer engraving and is made up of 105 billion transistors. It is therefore a fairly massive chip, since for comparison, NVIDIA’s H100, engraved in 4 nanometers, has 80 billion transistors. With its 146 billion transistors, AMD’s MI300X (engraved in 5 nanometers by TSMC) however maintains a good lead in this area.

Concerning the Cobalt 100 CPU, it is a 64-bit processor with 128 cores. Microsoft claims it improves performance by 40% over current Azure ARM chips in applications like Microsoft Teams and Azure SQL. We will have to wait for more detailed benchmarks to gauge the capabilities of Microsoft’s new chips.

The importance of liquid cooling

Regarding infrastructure, Microsoft must reorganize the server racks. The company’s engineers are taking the opportunity to emphasize liquid cooling. And for good reason, Microsoft explains that artificial intelligence tasks are accompanied by intensive calculations that consume a lot of energy. As a result, they make traditional air cooling methods unsuitable, and liquid cooling therefore represents the most suitable solution to ensure efficient and overheating-free operation. You can see a custom rack housing Maia 100 chips below.

Deployment is planned for early 2024, initially for Microsoft Copilot and Azure OpenAI Service.

For completeness, let us point out that Microsoft is not abandoning NVIDIA and AMD. The company says it especially wants to offer more choice to its customers. In this sense, it will soon offer new virtual machines supported by H100 GPUs (the NC H100 v5) and plans to also adopt the new H200 from NVIDIA. ND MI300 virtual machines with AMD MI300X accelerators are also in the works.

Sources: Microsoft (1), Microsoft (2)

3