Amazon Web Services and NVIDIA announced Tuesday during theAWS:reInventthe upcoming construction of an artificial intelligence supercomputer, using the powerful GH200 GPUs, superchips that will propel the field of AI to new heights.

Let it be said: we are here at the cutting edge of technology. From the early but boiling Convention Center at the Venetian Hotel in Las Vegas on Tuesday, Amazon Web Services (AWS) announced that it had become the first cloud provider to adopt the GH200 Grace Hopper chip (named after the famous American computer scientist) from NVIDIA, equipped with of the new multi-node NVLink technology.

This collaboration between Tech giants aims to build new infrastructure, software and above all a supercomputer dedicated to generative artificial intelligence, called NVIDIA DGX Cloud, which will be equipped, hold on tight, with 16,000 superchips and should be capable to process 65 exaflops of AI. Dizzying data!

Towards unparalleled power of AI, made in AWS and NVIDIA, with an on-demand supercomputer

AWS instances (which literally enable developers to break through traditional physical limitations) equipped with the GH200 NVL32 will deliver supercomputer performance on demand, thanks to up to 20TB of shared memory on a single Amazon EC2. This advancement is crucial for large-scale AI and machine learning workloads, distributed across multiple nodes, covering areas such as recommender systems, vector databases, and more.

The GH200 GPUs will power the EC2 instances with 4.5 TB of HBM3e memory, expected to enable the execution of larger models and improve training performance. We will add that CPU-GPU memory connectivity will offer bandwidth 7 times greater than that of PCIe (Peripheral Component Interconnect Express).

It will expand the total memory available for applications. The instances will also be the first to integrate a liquid cooling system, ensuring optimal operation of high-density server racks. Each GH200 chip must also combine a Grace processor based on ARM, with a GPU Hopper architecture on the same module.

A project ” absolutely incredible », for the founder of NVIDIA

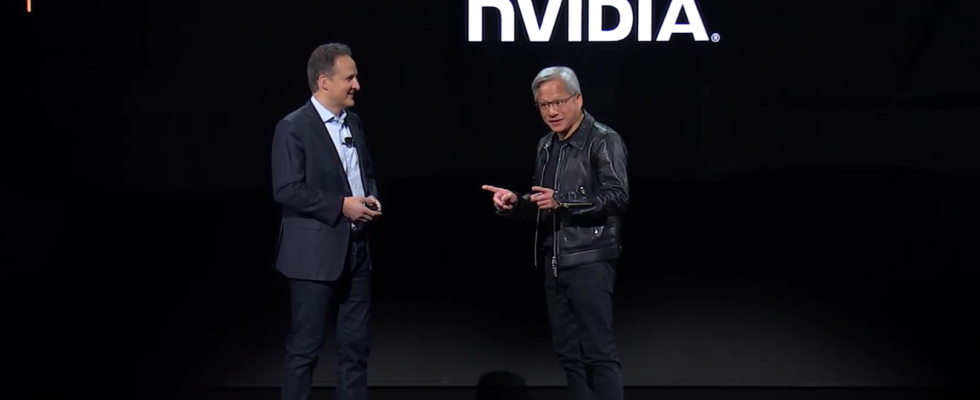

Jensen Huang, founder and CEO of NVIDIA, and Adam Selipsky, head of AWS, presented this innovation during the keynote of the AWS re:Invent 2023 event on Tuesday morning. Adam Selipsky highlighted the expansion of the collaboration between AWS and NVIDIA, which has been going on for several years now, and which the two leaders praised several times on stage.

The collaboration culminates with the integration of the GH200 NVL32 into the AWS Cloud, the creation of the NVIDIA DGX Cloud supercomputer on AWS, and the incorporation of popular NVIDIA software libraries. The DGX Cloud AI, also nicknamed “Project Ceiba”, will use 16,384 GH200 chips, to achieve a phenomenal AI processing power of 65 exaflops. Each Ceiba superchip promises to cut the training time of the largest language models in half. “ This project is absolutely incredible », blurted Jensen Huang, faithful to his leather jacket on Wednesday on stage.

Huang said the DGX supercomputer will also be used for NVIDIA’s AI research and development. Named after the imposing Amazonian Ceiba tree, the cluster will use its capabilities to advance AI in areas such as image, video and 3D generation, but also robotics, digital biology and climate simulation. , to recite nobody else but them.

With notable advances in computing power, memory and cooling, this collaboration between the two firms sets new standards for AI cloud infrastructure, paving the way for spectacular applications and innovations. GH200 instances and the DGX Cloud will be available on AWS in the coming year, the company promises, undoubtedly marking a major milestone in the AI and Cloud landscape.

3