During the 2023 end-of-year Christmas update, Tesla launched an improvement to parking assistance via Tesla Vision, for vehicles that do not have ultrasonic sensors. After some time and numerous tests, it is time to take stock: useless gadget or real essential help? We give you our opinion.

Traditionally, Tesla tries to spoil its customers at the end of the year with a software update that brings significant improvements to the interface, in addition to adding useful features. This year, the American brand is playing big, since its Tesla Vision solution has yet to prove itself as a valid replacement for ultrasonic sensors, which disappeared in the second half of 2022 on the Model 3 and Model Y.

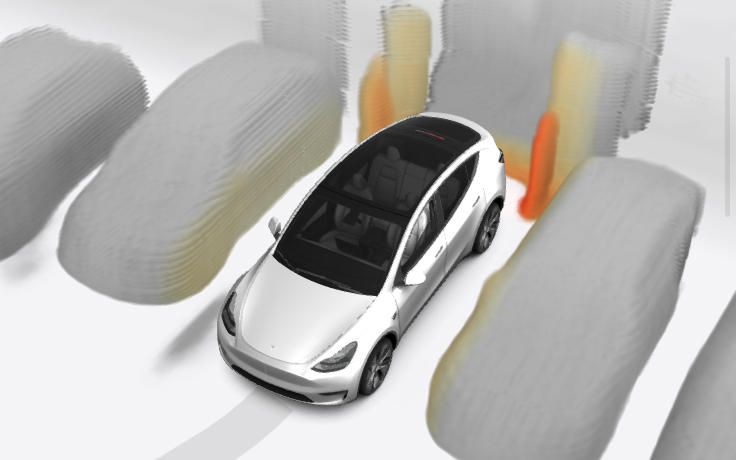

More than 12 months after switching to Tesla Vision, and 9 months after the proposal for parking assistance replacing ultrasonic sensors, Tesla is releasing its parking assistance with a view reconstructed in 3D, from information collected by the cameras .

The result is quite unique, since it attempts to imitate a 360-degree view, but instead of displaying a real image, it is a 3D rendering in shades of gray, supposed to allow you to park your Tesla much better than ‘using the mirrors and cameras. We will see together the strengths and weaknesses of this system, and after a few days of in-depth testing, its limitations. Has Tesla finally managed to convince us with its Tesla Vision solution?

Still no real 360-degree view at Tesla, but we’re getting close

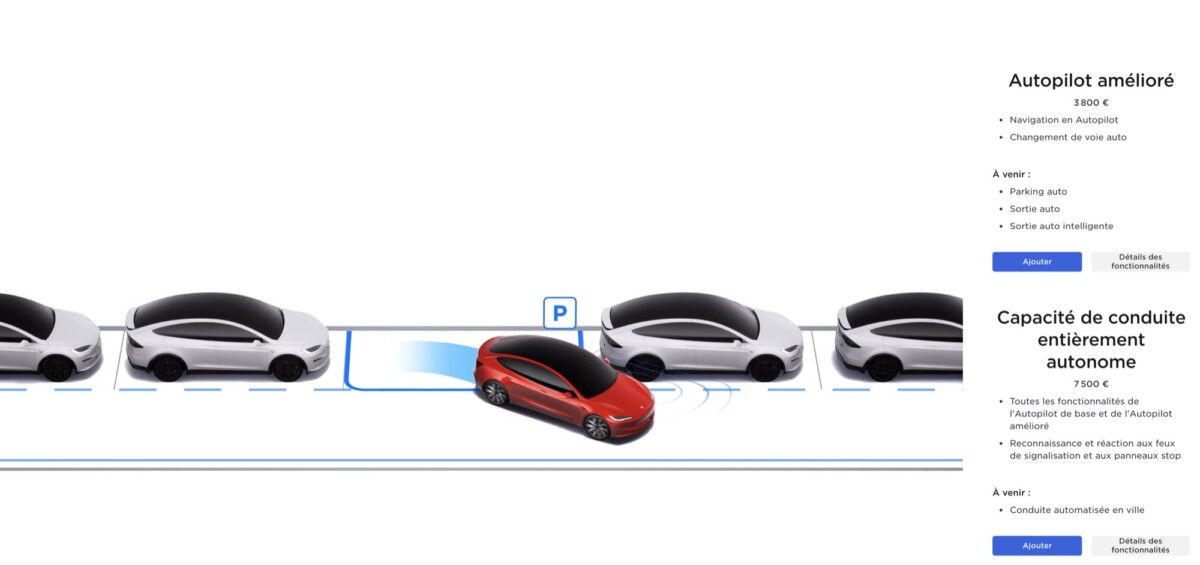

Since March 2023, we can benefit from parking assistance on Tesla vehicles that do not have ultrasonic sensors. We talked about it in particular here, and the least we can say is that we didn’t particularly find this help useful. Moreover, Tesla has just made it disappear completely, proof if any were needed that it was not very satisfactory.

The line that we had until today, as well as the indication of the distance in centimeters has just disappeared on all Teslas without parking sensors. Instead, we find a three-dimensional visualization that attempts to beat the laws of physics by displaying the environment in 360 degrees, even though there is no camera at the front bumper.

In fact, there is a blind spot in front of the car, where it is undoubtedly most important to have the right information for parking forward. This area not covered by the cameras extends up to one meter in front of the bumper, and any object measuring less than thirty centimeters high is thus invisible to the on-board computer.

Note that this visualization is still today reserved for cars which do not have sensors and it could be that the observation is therefore different for the brand’s vehicles produced before the second part of the year 2022, which have sensors. ultrasonic sensors, once they can enjoy this visualization.

Tesla tries to beat the laws of physics

Tesla recognizes the problem, since on the Cybertruck – and probably on future vehicles as shown on the page dedicated to the new Tesla Model 3 before disappearing – a camera is present under the bumper.

Without this camera, essential for a true 360-degree view, Tesla uses an ingenious, but still imperfect, solution. In this case, the cameras record what is visible while your Tesla is moving, and the on-board computer extrapolates the position of the entire environment, including what is found in the blind spot, based on the travel of your vehicle.

In practice, we therefore have a visualization of the complete environment, even if objects are invisible at time t by the cameras. However, despite all the efforts in the world that Tesla can do with its software, the laws of physics apply to Elon Musk’s company as well as to the rest of the world: if an object arrives in the blind spot of the cameras while the car is stationary, it will not appear on the visualization.

In addition, depending on the weather conditions, the cameras may be imprecise (fog, dirt, rain, etc.), which will necessarily alter the usefulness of the visualization on the screen, where you will also have an alert telling you that the precision may not be there.

If it’s not pretty, is it really useful?

Everyone agrees that the visualization proposed at the moment is anything but sexy. It also recalls the beginnings of FSD beta where everything was displayed in a very crude manner. No doubt it will improve over time, probably looking like something more pleasing to the eye, but for now it’s just going to have to be content with it.

In practice, does this visualization allow you to park properly? It must be admitted that thethere are some undeniable advantages, notably the visualization of the lines on the ground in car parks. The rear camera and side cameras already made it possible to see the real environment when parking in reverse, but for those who prefer to park in forward gear, it was sometimes difficult to clearly see where you were in relation to the lines white on the ground.

For niche parking, the assistance provided will be more or less useful, depending on the real environment. Indeed, in certain attempts like above, the information provided by the cameras turns out to be much more useful: the exact visualization of the vehicle in front of us, as well as the possibility of seeing the sidewalk so as not to scratch the rims are preferable to the display located on the left of the screen.

For the part concerning the different obstacles that may be present, unfortunately it is difficult to see the point, because we still can’t trust him once we have seen how it is caught at fault: either we stop too far away, or we risk hitting the obstacle if it becomes invisible.

The car is relatively far from the pole, although the screen displayed “STOP”.

As you can see from the example above, the visualization is fairly faithful (lines on the ground, number of visible vehicles, location of posts and walls, etc.), but not very useful. The default view is the top view, and you have to manipulate the screen to get a different view… which returns to the default view after just a few seconds. We would have liked to be able to lock a view a little longerto ensure that you can maneuver as you wish.

Displaying the cameras helps us much more than this reconstructed view, and by combining the exterior mirrors, we have much more confidence than with only viewing the left side of the screen. Tesla’s technical prowess is undoubtedly very real, namely that they manage to reconstruct a coherent environment only with the 8 cameras that are currently present (one at the rear, 4 on the sides, 3 on the top of the bumper). -breeze), but the current look is hard to convince.

What’s more, we really have a hard time trusting what is announced, given that we see the word STOP displayed as soon as we are about fifty centimeters from the slightest obstacle. Unless you have spaces 6 meters long and 3 meters wide, this is very useless information, since you will have to get even closer before being correctly parked.

To be optimistic, we could say that this is a step in the right direction and that we are hopeful that it will improve. Let’s hope that we can soon reach the level of augmented reality visualizations of the competition.

The laws of physics are, however, there to remind us that the impossible will not be achievable given the current hardware configuration. To say that the arrival of this visualization is useless would however be false: once again, there will be situations where it is very relevant (lines on the ground in particular, since a measurement error is not very risky), but with my own car (Tesla Model Y Propulsion end of year 2022), I will not rely on visualization alone to judge the distance to a solid obstacle. And you ?

Want to join a community of enthusiasts? Our Discord welcomes you, it is a place of mutual help and passion around tech.