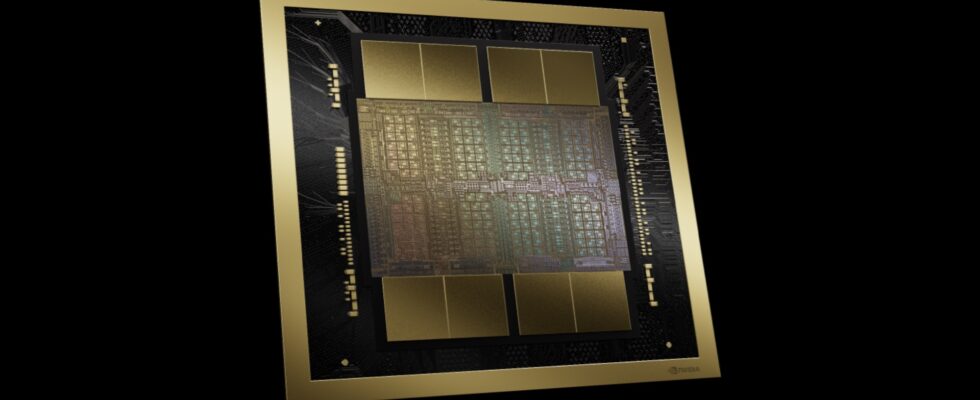

During its GTC conference, Nvidia lifted the veil on the Blackwell B200 chip, a new GPU that it presents as a “super chip”. With 208 billion transistors and falling energy consumption, the Blackwell chip is the new lethal weapon for players in generative artificial intelligence.

When you talk to ChatGPT or generate a video with Sora in the coming months, it may be Nvidia’s Blackwell B200 chips operating behind the scenes.

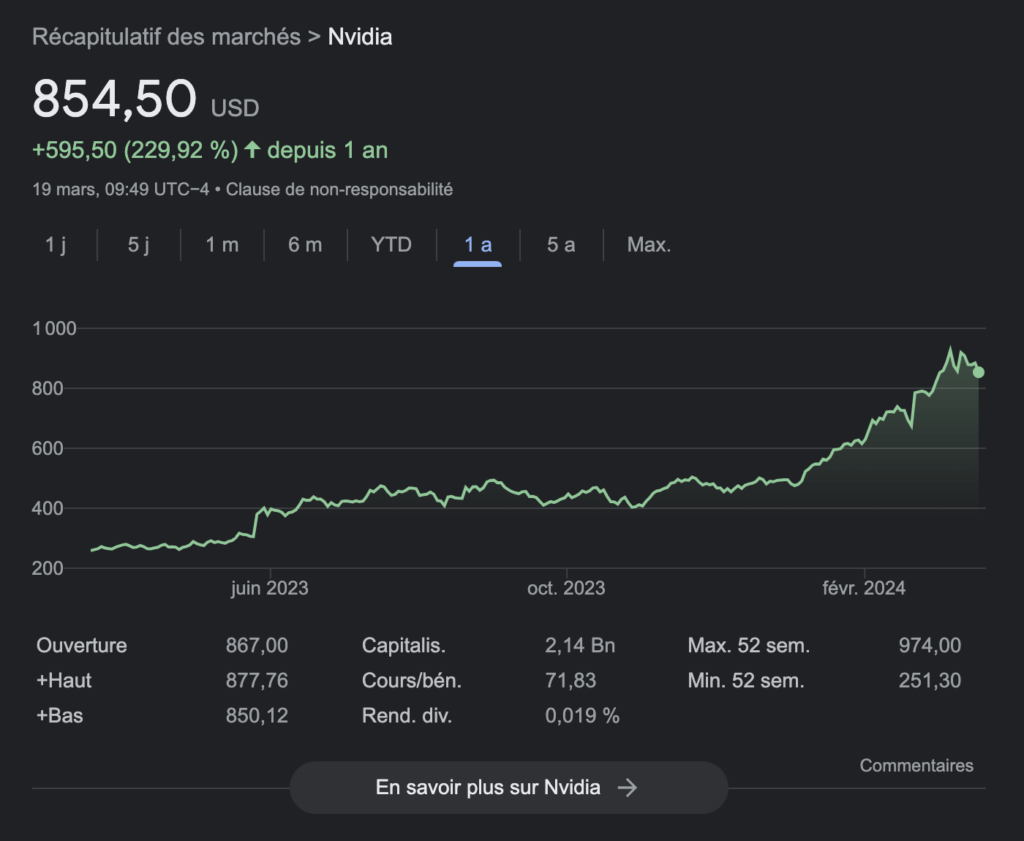

On March 18, during its major GTC conference, Nvidia raised the sail on a new generation of GPUs that creators of generative artificial intelligence will no doubt rush to order by the thousands. Already in a strong position in this sector (its graphics cards run most of the services on the market, which tripled its financial value in one year), Nvidia is widening the gap with the competition with new components designed for AI. The Blackwell B200 is not only more powerful, it is also advertised as much more energy efficient.

Using much less energy: Blackwell’s feat

Today, Nvidia owes part of its success to the H100 chips, which companies like OpenAI, Midjourney, Google and Adobe are competing for. These graphics cards are very well suited to AI, which allowed Nvidia to see its sales explode in just a few months.

With an H100 GPU under the Hopper architecture (the one preceding Blackwell), a large language model (LLM, for large language model) with 1.8 trillion parameters needs 8,000 chips to train, with 15 megawatts of power. With its new Blackwell architecture, Nvidia promises a division by four of the number of chips required (2,000 would therefore be enough). The company also announces an energy consumption of 4 megawatts, for equivalent computing power. Enough to facilitate the operation of LLMs on more modest installations.

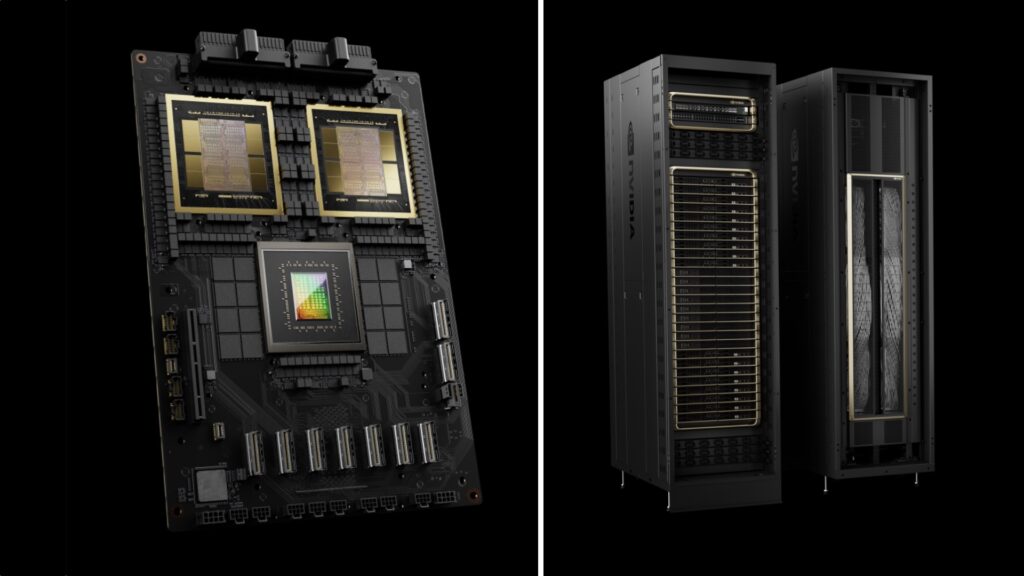

To attract businesses, Nvidia has also developed a superchip called GB200. It’s a combination of two Blackwell GPUs and a Grace CPU, which the heavyweights in the generative AI sector will no doubt be buying in large numbers. The GB200 NVL72 server, also offered by Nvidia, integrates 36 CPUs and 72 GPUs in one go, for a total of 80 petaflops. Some racks can mount up to 576 GPUs, for an overall power of 11.5 exaflops. Customers like Amazon, Google, Microsoft and Oracle have already expressed interest.

Nvidia, the king of AI despite himself

Very popular for several years, particularly in the video game sector, Nvidia has unwillingly become the star of AI, without having publicly taken an interest in this area before the emergence of ChatGPT and its rivals. The new Blackwell architecture will allow Nvidia to continue to grow, while the competition is struggling to match up for the moment. Some rely on local approaches with less power, but Nvidia’s chips become essential as soon as the processing is too heavy.

With 208 billion transistors and a second generation transformation engine capable of using 4 bits per neuron (instead of 8), the B200 consolidates Nvidia’s position. The company predicts Blackwell will be the strongest launch in its history, with first deliveries by the end of the year.

Do you want to know everything about the mobility of tomorrow, from electric cars to e-bikes? Subscribe now to our Watt Else newsletter!