The common thread of research in artificial intelligence is to disclose the technical details of the software in research papers. For what ? So that other researchers can understand the programs and learn from them.

That tradition was shattered on Tuesday with the release of OpenAI’s GPT-4 program, the latest in a series of programs that form the heart of the hugely popular ChatGPT chatbot. In the technical report on GPT-4 published on Tuesday, as well as in the OpenAI blog post, the company indicates that it refrains from providing technical details due to concurrency and security considerations.

“Given the competitive landscape and security implications of large-scale models like GPT-4, this report does not include further details on architecture (including model size), hardware, compute training, dataset construction, training method, or the like,” it says.

The GPT-4 program is a complete enigma

The term “architecture” refers to the construction of an AI program, the way its artificial neurons are laid out, and is the essential element of any AI program. The “size” of a program is the number of neural “weights,” or parameters, it uses, a key element that distinguishes one program from another.

Without these details, the GPT-4 program is a complete enigma.

The document contains only two sentences describing in very general terms how the program is constructed.

The lack of disclosure is a break with the habits of AI researchers

“GPT-4 is a pretrained Transformer-like model for predicting the next word in a document, using both publicly available data (such as internet data) and data licensed from third-party vendors. The model was then refined using reinforcement learning from human feedback.”

Neither of these two sentences brings anything interesting.

The lack of disclosure is a break from the habits of most AI researchers. Other research labs often publish not only detailed technical information, but also the source code, so that other researchers can reproduce their results.

A well-disguised “pre-trained generative transformer”

The lack of disclosure is further at odds with OpenAI’s disclosure habits, however limited.

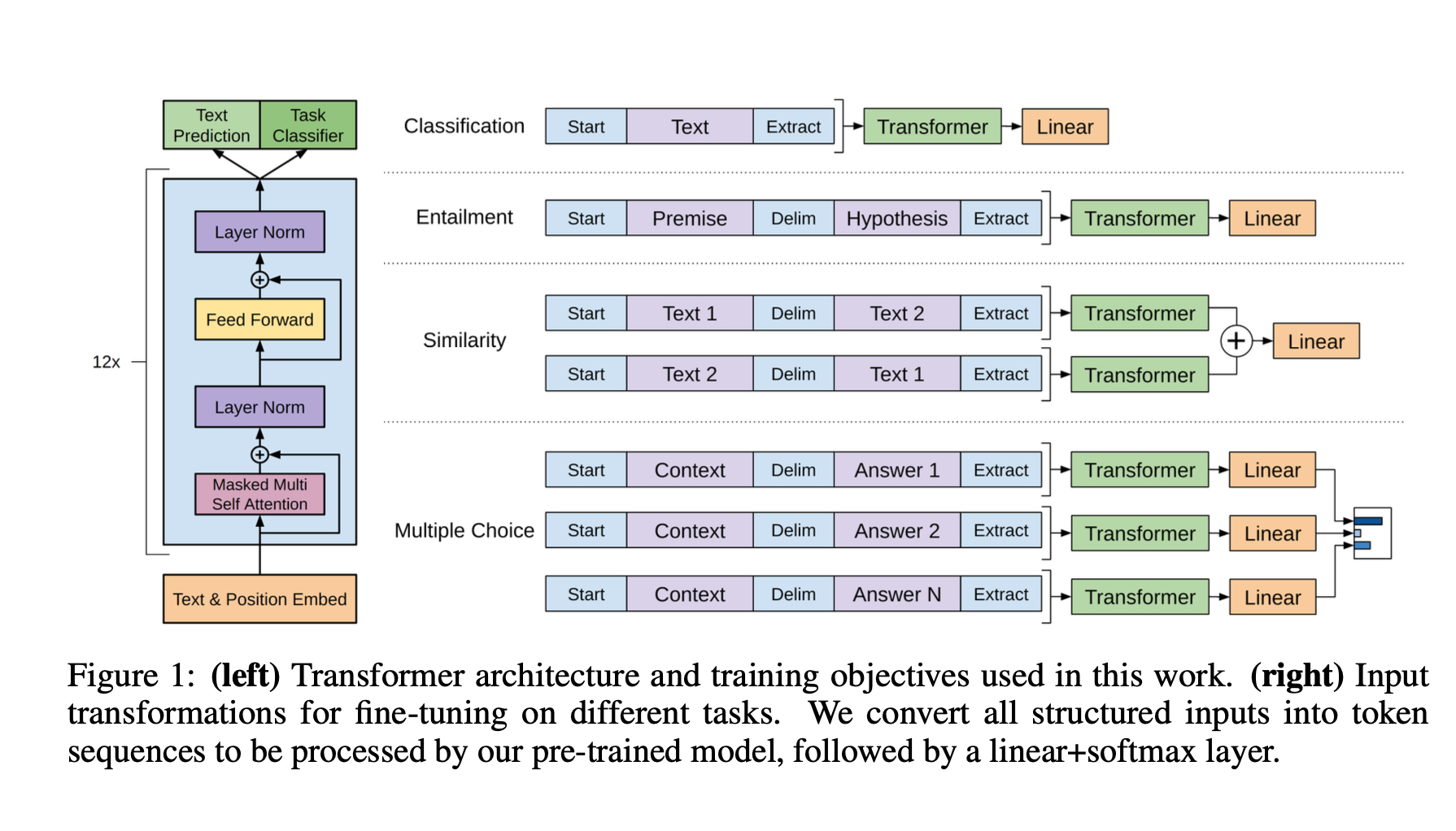

GPT-4, as the name suggests, is the fourth version of what is known as a “pre-trained generative transformer”, a program designed to manipulate human language. When the very first version of the program was presented in 2018, OpenAI did not offer source code. The company did, however, describe in detail how it composed the different functional parts of the GPT-1 architecture.

This technical disclosure allowed many researchers to reason about how the program worked, even if they could not reproduce its construction.

GPT-1 was explained in 2018 in a diagram that helped researchers understand the key properties of the program. No such description appears in the GPT-4 technical document. Picture: OpenAI.

Fewer and fewer details

With GPT-2, released on February 14, 2019, OpenAI not only did not offer source code, but also limited the distribution of the program. The company pointed out that the program’s capabilities were too extreme to risk its release allowing malicious parties to use it.

“Due to our concerns about malicious applications of the technology, we are not releasing the trained model,” OpenAI said.

Although they haven’t released the code or trained models, OpenAI researchers Alec Radford and his team described, in slightly less detail than the previous version, how they modified the first GPT.

GPT-4 Reference Document Marks Another Milestone in Non-Disclosure

In 2020, when OpenAI released GPT-3, Alec Radford and his team again refused to disclose the source code and provided no downloads for the program, instead resorting to a cloud service with a waiting list. They did so, they claim, both to limit the use of GPT-3 by bad actors and to make money by charging for access.

Despite this restriction, OpenAI provided a set of technical specifications that helped others understand how GPT-3 was a major step up from the previous two versions.

In this context, the GPT-4 reference document marks a new step in the absence of disclosure. The decision not only not to divulge the source code and the program, but also not to divulge the technical details which would allow outside researchers to guess the composition of the program, constitutes a new type of omission.

Three attribution pages

Although it lacks technical details, the GPT-4 document, 98 pages long, is nevertheless innovative. It breaks new ground by acknowledging the enormous resources mobilized to make the program work.

Instead of the usual first-page author citations, the technical report has three attribution pages at the end, citing hundreds of contributors, including all OpenAI members, all the way up to the finance department. .

The article also hints that OpenAI may offer more information at an unspecified date, and that it may still be committed to advancing science through transparency:

“We are committed to independent auditing of our technologies, and we’ve shared some initial steps and ideas in this area in the system map that accompanies this release. We plan to make further technical details available to other third parties who can advise us on how to balance the aforementioned competition and safety considerations against the scientific value of greater transparency.”

Source: ZDNet.com