Take a picture of a dish, a garment, a product, and find where you can get it near you, that’s what Multisearch allows.

During its Google I/O conference, the Mountain View firm presented a powerful tool that should revolutionize internet research: Multisearch.

No need to describe with text, a photo is enough!

With Google Multisearch, it becomes possible to launch a query via the search engine not only via text but via an image thanks to the capabilities of Google Lens. A function that finally allows access to information on a subject that cannot be described.

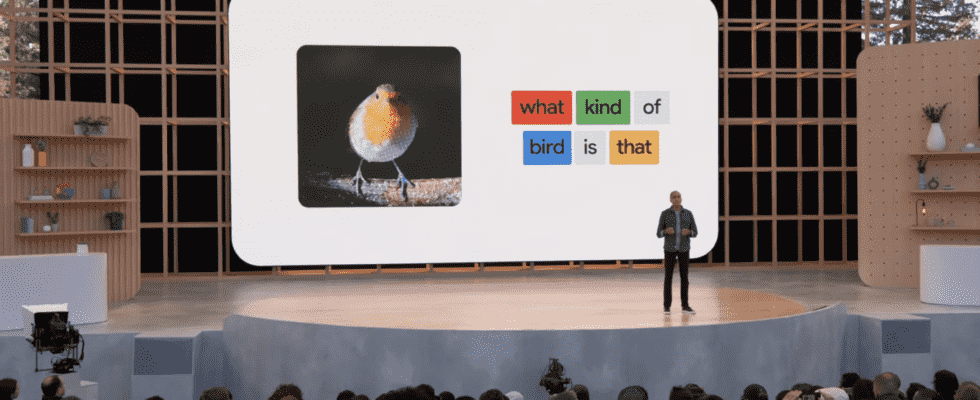

Several examples were given during the announcement. By taking the photo of a bird, you can launch a search by adding the query “what kind of bird is it?”, Google will answer your question. It also works for a dish whose name you don’t know: a photo, and Google searches for it. If you are looking for a replacement part for furniture or appliances, a photo will allow you to find what you need.

Multisearch allows adding text to the image query and offers local results

Multisearch is not limited to this since Google can also help you easily find what you are looking for around you. For a product or object, you are shown a store where you can buy it. If you want to eat at a restaurant that offers a specific dish that you only have a picture of, Google lists those in your neighborhood or city.

To use this option, you must go to the Google app for Android or iOS then to the Google Lens section. Here, it is possible to upload an image or a screenshot from the gallery, or take a photo directly with your device. A swipe up then adds a text query.

Initially, Multisearch and its location-based results feature will only be available in English. Support for other languages is planned for the future.

Source : Google I/O Conference

2