JFrog security teams discovered that around 100 machine learning (ML) models hosted on Hugging Face were causing persistent backdoors on devices that downloaded them.

It is a discovery that marks a turning point in research on artificial intelligence. JFrog data scientists this week indicated that they had spotted a malicious AI ML model on the collaborative platform Hugging Face, capable of executing arbitrary code on machines loading corrupted files. Upon further investigation, around a hundred other similar models were identified in the Hugging Face repositories.

From silent arbitrary code to industrial espionage, there is only one step

If the report unveiled by JFrog does not call into question the security policy implemented by Hugging Face, it nevertheless points to flaws that have allowed dangerous AI ML to go under the radar, and invites AI players, researchers as service providers, to exercise even more vigilance. At issue: the identification of a malicious model, followed by a hundred others, capable of executing arbitrary code after the infected machine loaded a pickle file (binary used to store and share Python objects). A process allowing attackers to create persistent backdoors on targeted devices, and therefore take control of them remotely.

These intrusions are all the more insidious because they do not allow victims to know that they have been hacked. The consequences of such a modus operandi could potentially provide access to critical internal systems and pave the way for large-scale data breaches and even industrial espionage, impacting individual users and businesses across the world. .

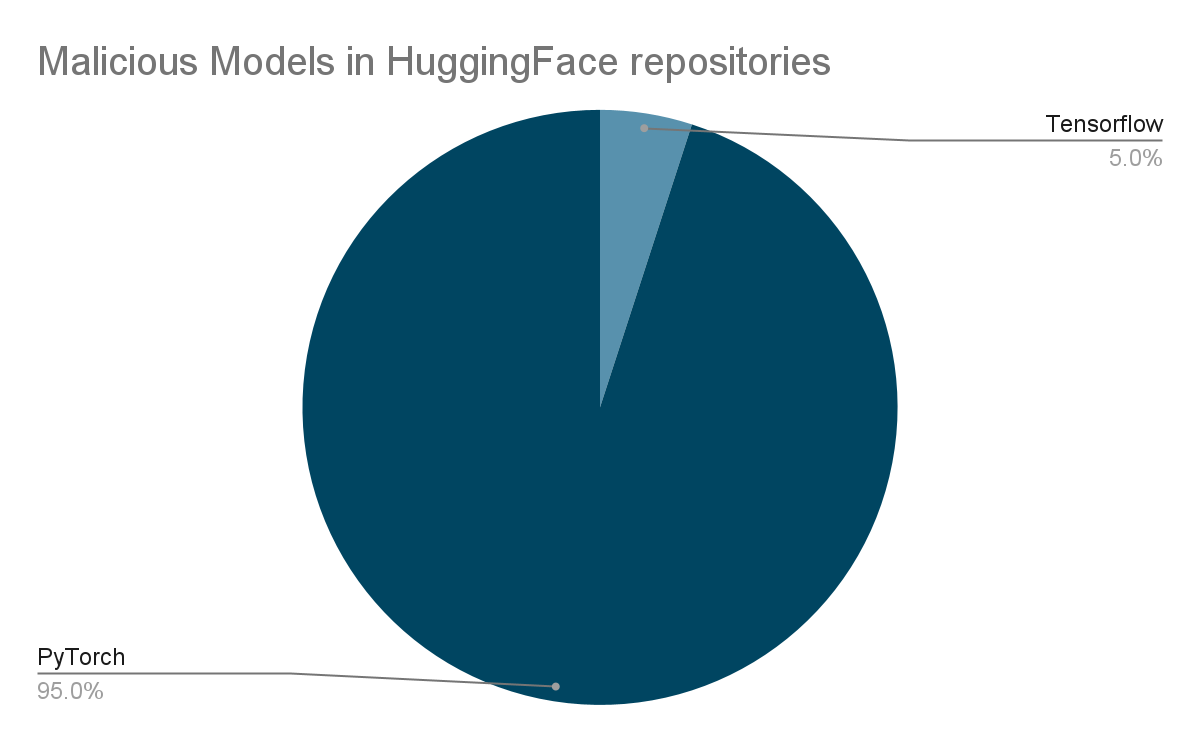

Hundreds of PyTorch and TensorFlow models affected

Heavyweight in open source artificial intelligence research, the collaborative platform Hugging Face had nevertheless put in place a series of security measures to prevent the dissemination of dangerous AI models, including the analysis of pickle files. A type of scan capable of detecting malicious code, dangerous deserializations, and sensitive information. However, when Hugging Face identifies suspicious pickle files, it does not block them or restrict their download, letting its users manipulate them at their own risk.

The second most common model on the research platform, TensorFlow Keras, is also one of the models likely to execute malicious code without the knowledge of those who download them. To complement the review tools developed by Hugging Face, the JFrog teams developed their own analysis environment and ruled on the prevalence of threats in the PyTorch and TensorFlow models. In addition to representing a higher risk of arbitrary code execution, the danger they pose is increased by their great popularity.

The authors of these models have not been clearly identified, but there are indications that some of them could be researchers in artificial intelligence. An observation which leaves a bitter taste for JFrog which criticizes the publication of functional exploits and malicious codes on a general public platform, even though the fundamental principle of security research is to abstain from it.

Faced with the exponential growth of cyber threats, it is more important than ever to protect your endpoints with an antivirus security suite worthy of the name. Discover our selection of the best cross-platform protections in March 2024.

Read more

Source : JFrog

1