Why the devil the robot R2-D2 did not inform Luke Skywalker of what became of his father, if he did not lose his memory through the various Star Wars films?

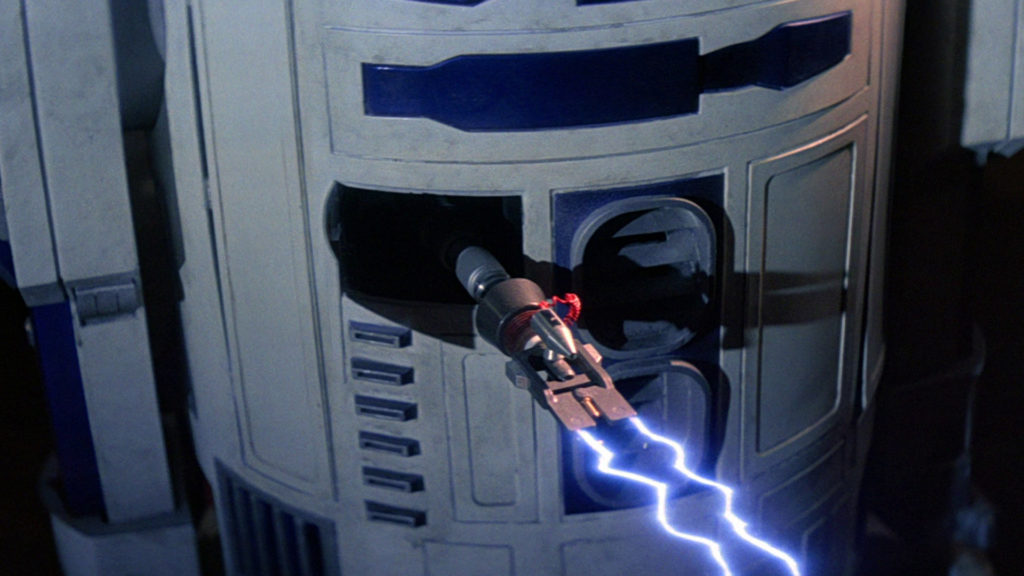

From this paradox, this article shows the persistence of human presence in artificial intelligence. It is a question of unrolling and deciphering an inconsistency – or a non-time – which refers to the attitude of the robot R2-D2. This one, throughout the saga Star wars, played with the human being, with the benevolence or the lightness of the writers of the epic, and was able to rely on a memory that has never been erased.

But if R2-D2 doesn’t have amnesia then, why didn’t he warn Luke of his father’s mortal danger?

What paradox are we talking about?

The great contemporary mythology that is the saga Star wars takes us into a seductive inconsistency. She puts in tension the Jedi knight Luke Skywalker and his father, Anakin Skywalker, who will become the evil and brilliant Darth Vader. It was in 1980, in The Empire Strikes Back, directed by Irvin Kershner, that the famous “I am your father” will be proclaimed in the face of the world. It features on one side the father (Darth Vader) and on the other his son (Luke Skywalker), but he should also have mobilized the omnipresent and all in curves robot R2-D2 (Reel2 / Dialogue2).

The question that will pose a problem was asked by a little girl a few years ago.

“Since R2-D2 was there from the start of the story and his memory has never been erased, then why didn’t he just tell Luke, at some point, that Vader was his father ? “

It was then that her father – who tells the story – “just stood there, dumbfounded, until she shrugs her shoulders and leaves”.

How to explain that R2-D2 – who had been lucky enough to be able to count on his memory while that of his bipedal companion the droid C-3PO had been damaged – had the idea, the opportunity and the possibility of hiding such a secret? Why hasn’t this endearing and ubiquitous droid – whose former ruler is Obi-Wan Kenobi – not acted in AI mode?

What avenues to tackle this paradox?

Many hypotheses are available on the web. They all come down to accepting the idea of a certain “humanity” of the robot. We will only offer 6 here. H1: R2-D2 wouldn’t have wanted to break Luke’s heart. H2: R2-D2 would not immediately have understood that Luke was the son of his former human companion as the name “Skywalker” could be widely used on Tatooine. H3: R2-D2 wanted to let him discover his fate on his own. H4: R2-D2 forbade himself spoiler Darth Vader’s replica of an inelegant beep-beep! H5: R2-D2 is fascinated by the sulphurous Darth Vader whom he favors over his son whom he ultimately finds tasteless. H6: Nobody asked him the question. In the six cases, the robot absolutely does not act like a robot whose mission is to protect its “owner”.

Paradoxically, the most probable hypothesis is that of the screenwriters forgetting. This hypothesis is also the most encouraging … there is no artificial intelligence without (fallible) human intervention!

No intelligence without learning

Artificial intelligence, as we now understand it, learns by drawing more or less directly from the learning logic of human beings. It will nevertheless change our lives. It proceeds based on the most classic cognitive functions: natural language, vision, memory, calculation, analysis, comparison, reproduction, translate, play, etc.

The intersection of artificial intelligence with cognitive psychology and cognitive sciences explains the human part of AI – and therefore the human part of the R2-D2 robot – by drawing inspiration from biological intelligence and human mechanisms of knowledge development.

We can then note that learning by observation is common to humans and AI and that a complementarity appears. Supervised machine learning allows AI processes to learn and even improve thanks to errors and human feedback, offering them to better categorize input data. It will then improve the accuracy and reliability of the output data. We also note that unsupervised learning is also possible in AI. This is autonomous learning which does not need an error signal to correct itself. In the human case, we refer to Hebb’s law to understand certain cognitive functions such as memory for example, this law refers to neurons that activate together and connect to each other, to form connections that are reinforced over time .

We also evoke learning by reinforcement which refers to learning by reward (the carrot) or by punishment (the stick). Then again, humans and intelligent machines are close. The reward is associated with an objective determined by the programmers, which will lead to a multiplication of attempts and calculations to explore all the possibilities and achieve this goal and therefore, the reward. Finally, deep learning is also a type of learning. It is inspired by the functioning of the human brain by using artificial neural networks coupled with algorithms. This approach shed light on the strike force of expert systems. These neural interface technologies, coupled with the data massively collected by the platforms are already deployed in many sectors such as the military, industry and health.

No artifice without (a little) humanity

At this stage of AI applications, it is important to highlight 4 points in common between artificial and human intelligences:

- It is advisable to rely on knowledge communicated by the entourage or by the immediate environment

- It is important to take into account past experiences

- It is advisable to produce outputs depending on the input data

- Cognitive biases should be doubted and identified

Finally, to come back to R2-D2 and its humanity, it seems important to point out that ethical constraints and challenges are also present in so-called intelligent machines. So a machine can’t – shouldn’t? – be alone in the capacity to decide on the death of a human. We believe that it is undesirable for a machine to be able to become a completely autonomous weapon.

Humans must therefore be careful not to exclude themselves from the decision-making processes of machines. He must be able to retain control in fine, especially when faced with the use of specific languages (emotions, hatred, humor, irony, provocation, Brassens’ poetry, etc.) which does not necessarily imply understanding of the meaning of words, of the sentence, of the image, of the context by the intelligent machine.

No autonomy without (a little) human control

The individual learns to adapt and survive. He learns the first words from his parents and those around him, in well-defined contexts, then he tries to understand them, to recontextualize them. The intelligent machine learns to produce a good or a service. Since the first experiments in 1955, it relies on a page which is never blank, but which looks like a vast database of which it does not understand the meaning. Its purpose is to try to identify correlations, to characterize similarities and to try to provide answers in language understandable by a human and / or by another machine. The understanding, decisions and feedback of artificial intelligence are purely statistical, logical and mathematical. This is also why the European Commission recently published the “harmonized rules” of the AIA to regulate its uses and modify certain legislative acts of the union.

However, in extreme situations, it is often difficult to explain the decisions made by an AI, because the black box that offers us the output data is not able to explain the logic followed – except for logic such as that of Hoare – from the input data. The paradox of an R2-D2 a little more fallible than the machine it is supposed to be, remains in this good news, it is the proof that a human is thus well behind this scenario!![]()

Marc Bidan, University Professor – Management of information systems – Polytech Nantes, Historical authors The Conversation France and Meriem Hizam, PhD student in information systems management, University of Nantes

This article is republished from The Conversation under a Creative Commons license. Read the original article.