Database giant Oracle wants to send a message: you will have a better experience with generative artificial intelligence if it does not involve moving your data out of Oracle’s data centers and databases…

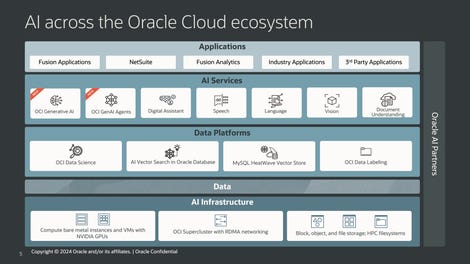

This week Oracle announced the general availability of OCI Generative AI Services, a managed service for AI first offered in beta in September. The company also unveiled two new offerings, still in beta: OCI Gen AI Agents and OCI Data Science AI Quick Actions.

The company explains that building an enterprise generative AI application on top of the data warehouse will be both more efficient in terms of data usage and more cost-effective than purchasing additional infrastructure.

Avoid Pinecone Temptation

The acronym “OCI” refers to the Oracle Cloud Infrastructure, i.e. all network and computing resources, as well as corresponding software such as the Oracle Autonomous Database, which the company uses to provide cloud services. This includes what Oracle calls “super-clusters” of Nvidia GPU chips that Oracle has spent billions of dollars on.

“Our Fusion applications, like ERP and HCM, contain exabytes of data – we’re bringing AI to it,” Erik Bergenholtz, Oracle vice president, told ZDNET. Hence the idea of then building uses of Gen AI on top of Oracle’s database, middleware and Fusion application suite, explains Bergenholtz.

Oracle

Certainly, according to Bergenholtz, companies might be tempted to purchase additional software for data management, for example a vector database like Pinecone.

“We don’t want customers moving data”

“The downside (with this approach), of course, is that you have yet another piece of infrastructure that increases the cost of cloud computing, and you have to move and potentially synchronize data across your data warehouse, which it s whether it’s applications or your Oracle database.

Using OCI services, Bergenholtz adds, “just eliminates that barrier, that friction, for our customers.”

“We don’t want customers to move data, because the last thing they want is to move 500 terabytes just to get the benefit of generative AI,” said Steve Zivanic, vice -president of Oracle.

Data used in OCI cannot be seen by other Oracle customers

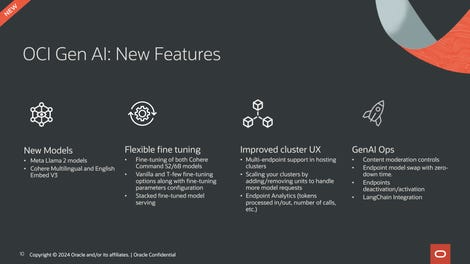

The OCI Generative AI service, consists of pre-built Large Language Models (LLMs), including Meta’s 70 billion parameter open-source Llama 2 model. In addition to Meta, Oracle has partnered with the startup Cohere (Oracle is an investor in this startup). Cohere has three models that will be integrated into the Oracle service: Command, for common text functions; Summarize, for summarizing documents; and Embed, for multilingual functions.

Customer data used in OCI to train or refine models cannot be seen by other Oracle customers, Bergenholtz emphasized.

Since being beta tested, the service has received new features, such as content moderation. “The most important thing is that we do this before submitting the prompts” to a language model, “and we also evaluate the response that comes out of the model,” Bergenholtz noted, “so we don’t wait until the all the way, because then you have already borne the cost of processing this request.”

The service now integrates with the LangChain development framework.

Oracle

OCI Gen AI Agents to link an LLM to other resources

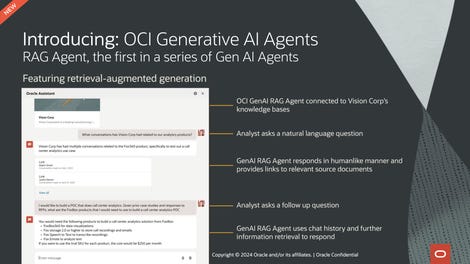

The OCI Gen AI Agents product is also intended to link an LLM to other resources, such as a client’s proprietary database. The first agent proposed is intended for retrieval-augmented generation (RAG), an increasingly popular approach in the world of generative AI to link the language model to a source.

An LLM “may not be as useful as it could be if you’re not able to leverage the data you’re managing in your various Oracle applications or Oracle databases,” Ms. .Bergenholtz. Initially, the RAG agent service can leverage OCI’s OpenSearch offering, a managed service based on the OpenSearch open source platform. In the near future, the RAG offering will be amplified by the possibility of connecting to Oracle’s 23c AI Vector Search Database, as well as to the MySQL Heatwave Vector Store.

The AI agent service begins beta testing this month.

Oracle

OCI Data Science Quick Actions: a no-code approach to deploying and refining language models

The OCI Data Science Quick Actions offering emerged from Oracle’s 2018 acquisition of startup DataScience, which brought Oracle expertise in Jupyter Notebooks and statistical techniques for machine learning.

The benefit of Quick Actions is a no-code approach to deploying and refining language models. Several frameworks are available for fine tuning, for example, including distributed training with PyTorch, Hugging Face Accelerate and Microsoft’s DeepSpeed. Mr. Bergenholtz also focused on using object and file storage to help organize model “weights,” the parameters that take up a lot of memory and give a model its shape. neural network.

Quick Actions will begin beta testing next month.

Use cases: HR, health insurance and customer support

The most common use case Oracle cites for the OCI Gen AI service is providing automated responses to HR policy questions. “The most common question is how many vacation days I have left,” says Bergenholtz. “You need two pieces of data, the company policy and the number of vacation days you used, and then you need to calculate the answer based on those two things.”

“With RAG you can very easily answer this question because the RAG system knows your identity and company policy.” Questions about health insurance benefits are another growing use case, according to Bergenholtz.

Oracle

Another common application is customer support. For customer support that uses RAG to tap into customer data, the language model “can easily summarize what case you called about, what the current situation is, and provide a script of next steps to recommend or walk through with the customer to be able to provide a much richer user experience,” Bergenholtz said.

Vector search capabilities for those who don’t want to put their data in the hands of AI

What about customers who don’t want this new GenAI technology touching their valuable data?

“There are several ways to do this,” Mr. Zivanic said. By integrating features like vector search into the Oracle database and vector storage into Heatwave, “we’re bringing the technology directly to them,” he said.

There will be, he concedes, “organizations that will carry out various pilot projects with Gen AI to familiarize themselves with the technology.”

“But I think over time, as generative AI becomes more prevalent, the power of a converged database where everything is merged into a single database will prove advantageous over subscribing to several databases and looking for an answer.

Source: “ZDNet.com”