The American company Databricks released this April 12 Dolly 2.0, an open source and free language model. The ambition is clear: to make it a more ethical and better AI than ChatGPT.

One of the main trends in tech since the beginning of the year is undeniably ChatGPT, and more broadly to large-scale language models (abbreviated as LLM in English for large language model), like Google Bard or Claude. But all these initiatives are for the moment private, with artificial intelligences which are too. Therefore, their source code is not known and they are more or less black boxes in the eyes of the general public.

A philosophy that Databricks, an American company claiming to be from the world of open source and research, intends to change. She published this April 12 Dolly 2.0, her own LLM, which wants to compete with ChatGPT.

Dolly 2.0: a “ChatGPT” finally open source

If we wanted to be more exact, we would have to talk about an open source GPT-4, because it is indeed the language model we are talking about, and not the conversational agent (which is ChatGPT). The release of Dolly 2.0 comes only two weeks after the release of the first version. For Databricks, it’s ” the first open-source instruction-following LLM, developed on a human-generated instruction dataset, licensed for research and commercial use. »

It is therefore a language model based on 12 billion parameters. To say that, one might think that it is really less efficient than GPT-3.5 which uses 175 billion parameters, and GPT-4 which would use 100,000 billion. This is without counting on the tests carried out on GPT-4 which show that the relationship between the number of parameters and ” performance» is not linear. This difference can also be explained by the mode of training and data supply. Databricks explains that the dataset is ” obtained by crowdsourcing among employees“.

The Dolly 2.0 source code is open source and, by extension, free. Databricks adds that this includes “ training code, dataset and model weights, all suitable for commercial use. This means that any organization can build, own, and customize powerful LLMs that can talk to people, without paying for API access or sharing data with third parties.»

Ironically, Databricks acknowledges that Dolly 1.0 had been trained ” for $30using the OpenAI API from a dataset created by the Stanford Alpaca team. But as the latter pointed out, OpenAI’s terms of service prevent creating a competing language model to GPT. Training Dolly 2.0 with data that does not come from it also means being able to let users have commercial use of this LLM.

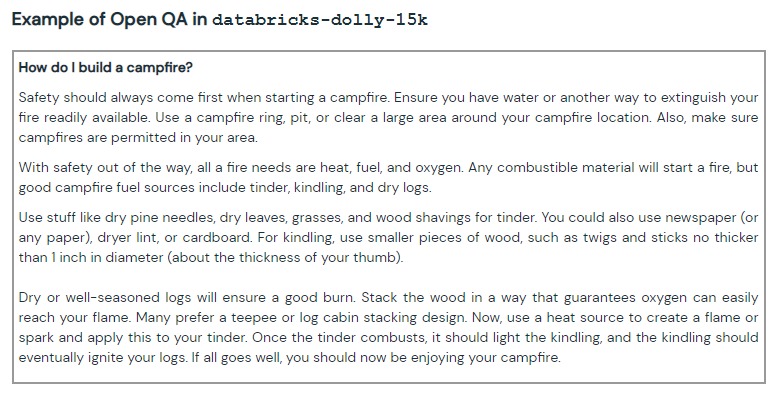

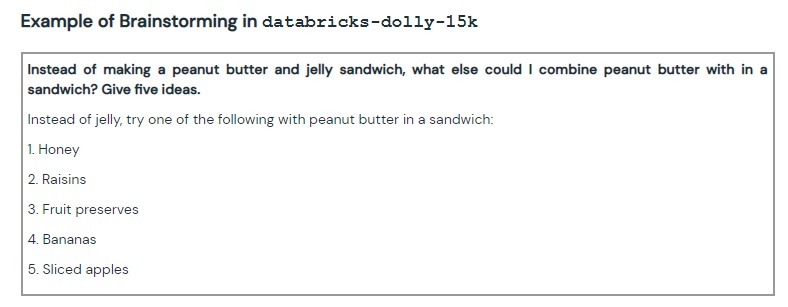

It starts from its conception: Databricks says it pays great attention to data processing, where a survey of the Timerevealed last January that OpenAI had used outsourcing in Kenya via a company that exploited its workers. Databricks also released the training data used, via a set comprising 15,000 request/response pairs created by some 5,000 employees. However, the way it was set up can be debatable.

The company explains that its employeeswere all very busy and had full-time jobs, so we had to encourage them to do so.“To remedy this, she organized a contest”where the top 20 taggers would receive a major reward.We can consider it harmful not to have organized this during the official working time of the employees, even though the competition was not compulsory. Labeling used to write questions of several types (open, broad, precise, controversial, etc.) and to note the answers of Dolly 2.0.

How Dolly 2.0 Could Really Compete With OpenAI and ChatGPT

Where the Databricks blog post is interesting is what it doesn’t say. If he recognizes that his model is “ChatGPT-like“, he does not criticize him for all that. But by reading attentively, one realizes that the arguments of Dolly 2.0 come to correct what one reproaches with ChatGPT.

For the company, these training sessions are “designed to represent a wide range of behaviors, from brainstorming and content generation to information extraction and synthesis.A way to prevent the excesses of this type of LLM. This even though GPT-3.5 has been criticized for giving sordid advice to the youngest.

About the fact that he isopen-source, Databricks is positioned in contradiction with OpenAI, whose algorithms are protected. But as pointed outNumerama, OpenAI originally worked on open source projects. The company then made a turnaround, as Ilya Sutskever, one of its founders, explained in an interview withThe Verge. He said he realized how powerful AI could and could be: putting it in everyone’s hands would then be a very bad idea. Perhaps for Databricks, it is precisely the fact of making AI accessible that would make it possible to guard against the abuses that could be committed, although it did not mention this dimension.

As you can use Dolly on your own server, this corrects the data protection problem that ChatGPT represents. A few weeks ago, we learned that Italy blocked the OpenAI tool for these reasons. For its part, Samsung has found that some of its employees have entrusted secret data to ChatGPT.

What will this Databricks AI be used for?

If through the answers of Dolly 2.0, we can consider that this artificial intelligence works well, it should be noted that it is far from being as “powerfulthan ChatGPT. But this, Databricks acknowledges: “as a technical and research artifact, we don’t expect Dolly to be state-of-the-art in terms of efficiency.»

It’s more what could result from Dolly 2.0 that would be interesting: “we believe that Dolly and the open source dataset will serve as the basis for a wealth of further work, which can be used to bootstrap even more powerful language models.That’s kind of what LLaMa, Meta’s LLM, made possible. Partiallyopen-source, its code leaked on the Internet, which allowed the emergence of several tools. One developer had even managed to get the AI to work on his computer. It will nevertheless be necessary to pay attention to the tools developed with it, which could containmalware.

One might think that Databricks’ budget does not allow him to train his LLM for long enough. It should be remembered that training or operating such a large-scale AI is very expensive. So much so that for one of the executives of Google, if he took up AI search, the costs of running Google would be multiplied by 10. The servers running auto-generating AIs are growing so much that they could lead to another shortage of graphics cards.

To follow us, we invite you to download our Android and iOS application. You can read our articles, files, and watch our latest YouTube videos.