Image: Meta.

Thanks to generative artificial intelligence, it has become very simple to create realistic, even believable, images that could have been taken by a human being. Differentiating real images from images generated by AI is therefore becoming more and more difficult, which favors the diffusion of fake news.

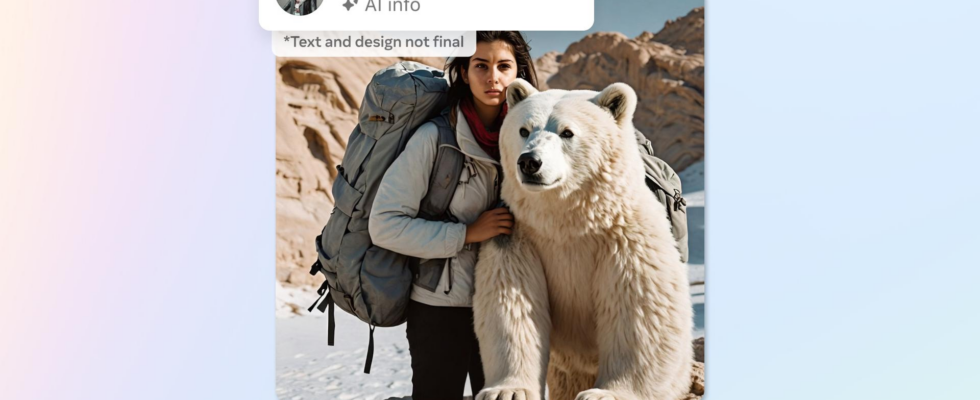

It is in this context that Meta announced several initiatives on Tuesday to combat disinformation. On its blog, the company notably indicated that in the coming months, labels would indicate on its networks – Instagram, Facebook and Threads – when an image was generated by an AI.

Fighting misinformation

Meta is currently working with AI industry partners to determine common technical standards that would indicate that content was created using generative AI. Thanks to these signals, the social media giant will then be able to automatically generate labels – understandable in all languages – on articles published on its platforms. Like what you can see in the image above, the labels will indicate that an image was created by an AI.

“As the line between human-created and synthetic content becomes blurred, there is a growing desire to know where the line lies,” said Nick Clegg, president of global affairs at Meta. “So it’s important for us to help people know if the photorealistic content they’re seeing was created using AI. »

Meta’s new tagging feature would work like the one already implemented by TikTok in September: on the Chinese social network, tags appear on videos containing realistic images, sounds or videos generated by AI.

Metadata and labeling

Every image generated by Meta AI, the brand’s AI image generator, already includes visible markers, invisible watermarks and IPTC metadata. The company then puts an “AI-envisioned” label on these images to indicate that they were artificially created.

Meta also said it was working on cutting-edge tools capable of detecting invisible watermarks, such as IPTC metadata, which would have been implemented by other companies – OpenAI, Google, Microsoft, Adobe, Midjourney and Shutterstock – for their AI image generators. The goal is to add a similar label to images for which this type of watermark was detected.

This way of proceeding of course leaves room for malicious acts: indeed, if a company offers an AI image generator that generates images without this metadata, Meta will have no way of detecting that the image has was created by an AI. However, even if this technique has flaws, we can say that the company is moving in the right direction.

Furthermore, Meta warns that while efforts are being made by many companies to include signals in the images generated by their AIs, the same is not true for AI tools that generate sound and video – at least not on the same scale. Therefore, the company does not guarantee this type of content, which is more difficult to detect – and therefore to label – for the moment.

Mandatory voluntary labeling

Until it can automatically detect them, Meta has added a feature allowing users to indicate that they used AI to generate an image. The social network then adds a label like “imagined with AI” to the image.

“We will require users to use this identification and SEO tool when posting organic content with photorealistic video or realistic audio that has been digitally created or edited. We can apply sanctions if they do not do so,” underlines Nick Clegg.

The executive adds that a more visible label may be added by the social network if it determines that a modified or artificially generated image, video or sound “presents a particularly high risk of misleading the public on an important subject”.

A tool of political influence

This last point is of crucial importance for this exceptional year in terms of elections: as a reminder, more than one in two people will be called to vote on the planet this year. In particular, France will be attentive to an election which will take place across the Atlantic in a few months: the presidential election in the United States.

As advances in artificial intelligence, particularly generative AI, make the creation and dissemination of false information easier, its influence on public opinion – and therefore potentially on elections – is significant. This technology could well hinder the democratic process.

This is why some companies, including OpenAI, have already taken steps to put safeguards in place, particularly before the start of the US presidential election.

Source: ZDNet.com