Yes, generative AI can perform, but many cases now show errors, hallucinations, and flaws that malicious actors can exploit. To alleviate this problem, Microsoft is unveiling a tool to identify risks related to generative AI systems.

On Thursday, Microsoft released its Python Risk Identification Toolkit for generative AI (PyRIT), a tool that Microsoft’s AI Red Team uses internally to check for risks in its generative AI systems, including Copilot.

Over the past year, Microsoft has Red Teamed more than 60 generative AI systems, learning that the attack process for these systems differs greatly from traditional AI or traditional software code.

Discrepancies in results that can be produced from the same input

The process is different because Microsoft must consider the usual security risks, in addition to responsible AI risks, such as ensuring that harmful content cannot be generated intentionally, or that models do not produce no misinformation.

Additionally, AI genetic models vary widely in terms of architecture. And there are variances in the results that can be produced from the same input, making it difficult to have a streamlined process that fits all models.

Therefore, manually searching for all these different risks ends up being a long, tedious and slow process. Microsoft shares that automation can help system testers by identifying risk areas that need more attention and automating routine tasks, and that’s where PyRIT comes into play.

“Generate several thousand malicious prompts”

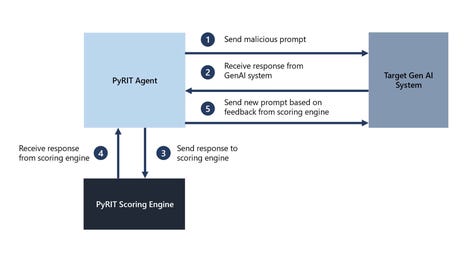

The toolkit, “tested by Microsoft’s AI team”, sends a malicious prompt to the generative AI system and, when it receives a response, its rating agent gives the system a score, which is used to send a new prompt based on previous grading comments.

Microsoft

Microsoft says the biggest benefit of PyRIT is that it helps Microsoft’s testing teams be more efficient, significantly reducing the time it takes to complete a task.

“For example, in one of our red teaming exercises on a Copilot system, we were able to choose a harm category, generate several thousand malicious prompts, and use PyRIT’s scoring engine to evaluate the results of the Copilot system, all in hours instead of weeks,” Microsoft said.

The toolkit is available today and includes demonstrations to help users become familiar with the tool. Microsoft is also hosting a webinar on PyRIT that shows how to use it in red teaming generative AI systems. You can register on the Microsoft website.

Source: “ZDNet.com”