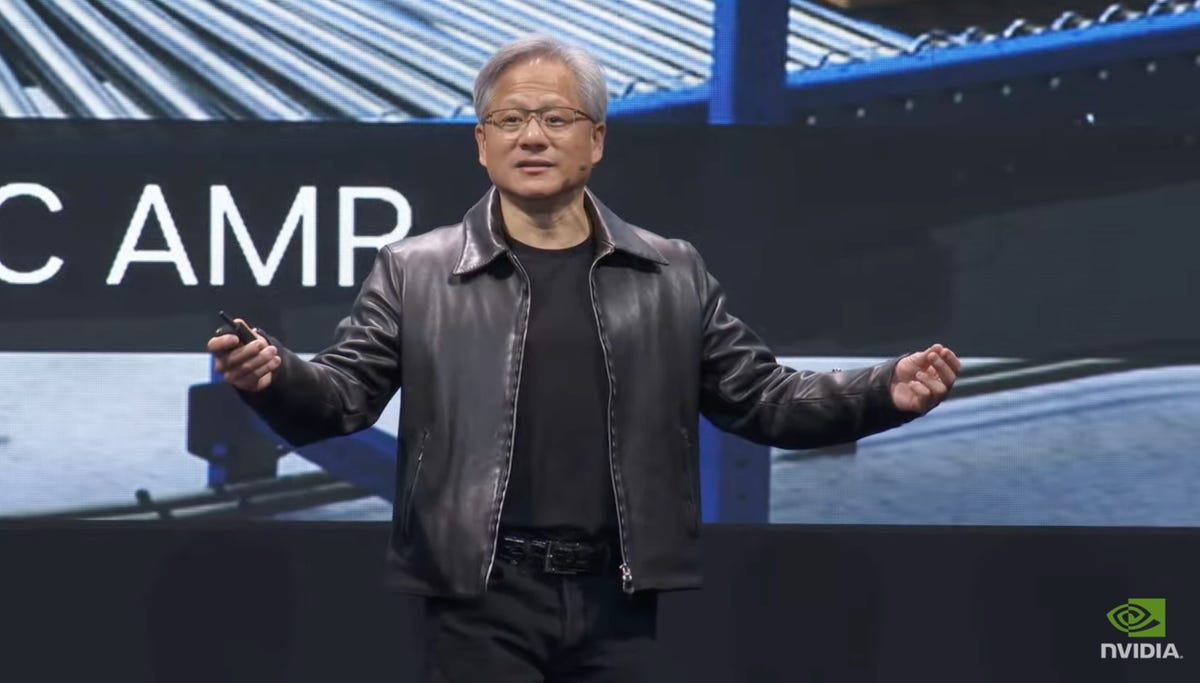

Jensen Huang, CEO of Nvidia, introduced the first iteration of Spectrum-X, the Spectrum-4 chip, with one hundred billion transistors in a 90 millimeter by 90 millimeter die. Nvidia

>

Nvidia CEO Jensen Huang at the Computex trade show opening conference on Monday in Taipei, Taiwan, unveiled a slew of new products, including a new type of Ethernet switch designed to carry large volumes of data for artificial intelligence tasks.

“How do we introduce a new Ethernet, which is backwards compatible with everything else, to turn every data center into a generative artificial intelligence data center?” Huang asked in his keynote. “For the first time, we are bringing the capabilities of high-performance computing to the Ethernet market,” Huang said.

According to Nvidia, Spectrum-X, the name given to this family of Ethernet connectivity, is “the world’s first high-performance Ethernet for AI”. One of the key features of this technology is that it “doesn’t drop packets,” said Gilad Shainer, senior vice president of networks.

Spectrum-4, the first iteration of Spectrum-X

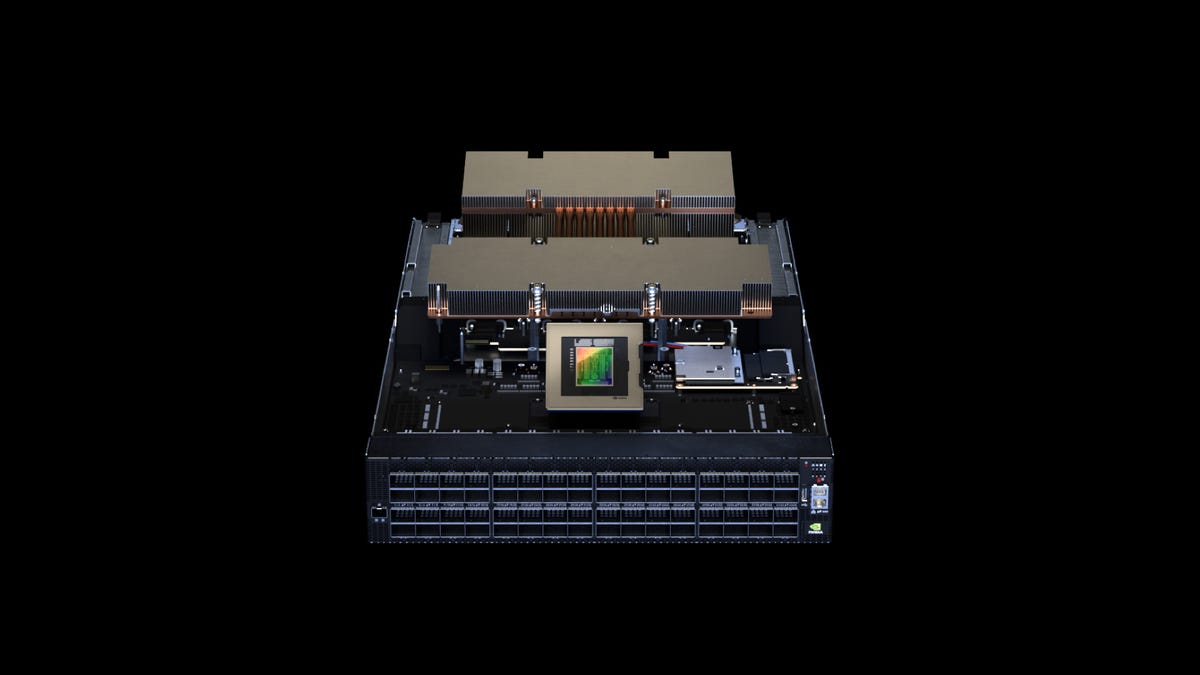

The first iteration of Spectrum-X is Spectrum-4, said Nvidia, which called it “the world’s first 51 TB/sec Ethernet switch designed specifically for artificial intelligence networks.” The switch works in conjunction with Nvidia’s BlueField data processing unit, or DPU, chips that handle data seeking and queuing, and Nvidia’s fiber optic transceivers. The switch can route 128 400-gigabit Ethernet ports, or 64 800-gigabit ports, across the network, the company says.

Huang introduced the Spectrum-4 ethernet switch’s silver chip on stage, saying it was “gigantic,” consisting of one hundred billion transistors on a 90-millimeter-by-90-millimeter chip built with “4N” processing technology. from Taiwan Semiconductor Manufacturing. The room operates at 500 watts, Huang said.

“For the first time, we are bringing high-performance computing capabilities to the Ethernet market,” Huang said. Nvidia

Spectrum-4 is the first in a line of Spectrum-X chips that are a new type of Ethernet designed to provide lossless packet transmission for artificial intelligence workloads. Nvidia

The Spectrum-X family is designed to address data center bifurcation in two directions. The first is what he calls “AI factories,” facilities that cost hundreds of millions of dollars for the most powerful GPUs, based on Nvidia’s NVLink and Infiniband, used for AI training. AI. The other data center is the AI cloud (multi-tenant, Ethernet-based), which focuses on delivering predictions to AI consumers, which will be served by the Spectrum-X.

The Spectrum-X, VP Shainer said, is able to “distribute traffic across the network in the best possible way,” using “a new congestion control mechanism,” which avoids packet stacking. which can occur in the buffer of network routers. “We are using new telemetry to understand latencies across the network to identify hotspots, to keep the network free of congestion.” Nvidia said “the world’s leading hyperscalers are adopting NVIDIA Spectrum-X”.

As a demonstration, Nvidia is building a test computer in its offices in Israel, called Israel-1, a “generative AI supercomputer”, using Dell PowerEdge XE9680 servers consisting of H100 GPUs running data through Spectrum- 4.

DGX, a computer for AI

In addition to this new Ethernet technology, Huang introduced a new model in the “DGX” series of AI computers, the DGX GH200, which the company describes as “a new class of large-memory AI supercomputer for giant generative AI models”.

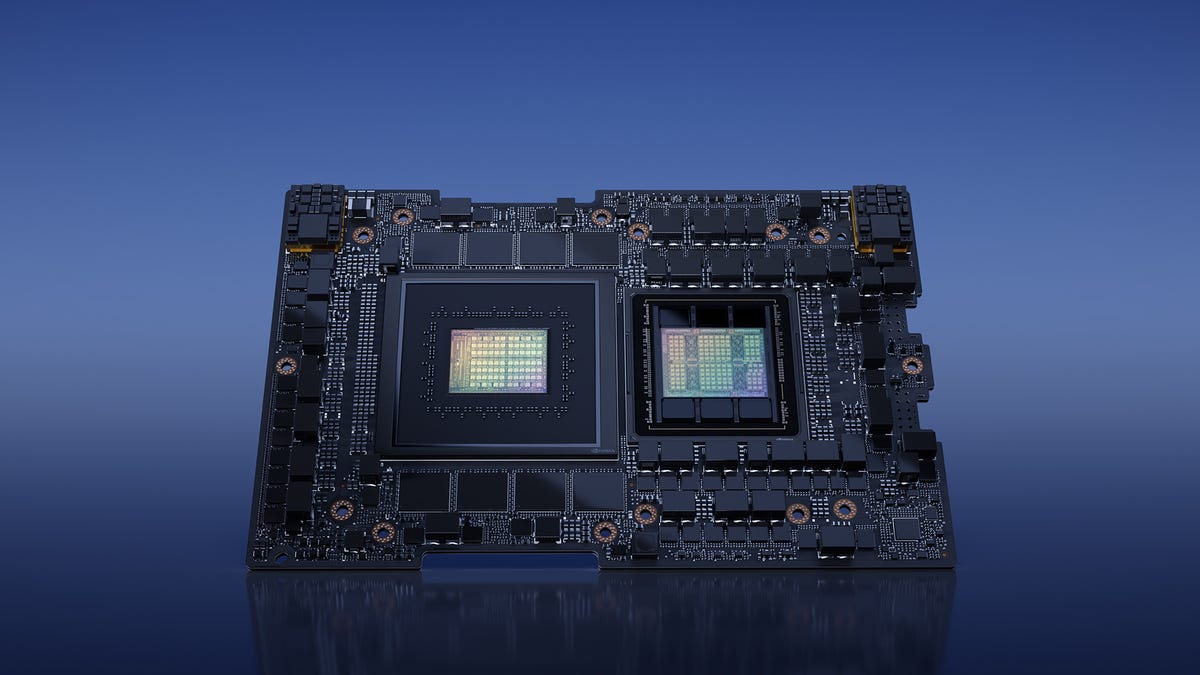

Generative AI refers to programs that produce more than a score, sometimes as text, sometimes as images, or other artifacts, like, for example, OpenAI’s ChatGPT bot. The GH200 is the first system to ship with what the company calls its “super-chip”, the Grace Hopper board, which contains on a single circuit board a Hopper GPU and the Grace CPU, a processor based on the game of ARM instructions intended to compete with x86 processors from Intel and Advanced Micro Devices (AMD).

Nvidia’s Grace Hopper “superchip”, a board containing the Grace processor (left) and the Hopper GPU, is now in full production, the company said. Nvidia

Grace Hopper’s first iteration, the GH200, is “in full production,” Huang said. Nvidia said “global hyperscalers and supercomputing centers in Europe and the United States are among the customers who will have access to GH200-powered systems.”

According to Nvidia, the DGX GH200 combines 256 superchips to achieve a combined power of 1 exaflops – ten to the power of 18, or a billion billion floating point operations per second – using 144 terabytes of shared memory. According to Nvidia, the computer is 500 times faster than the original DGX A100 machine released in 2020.

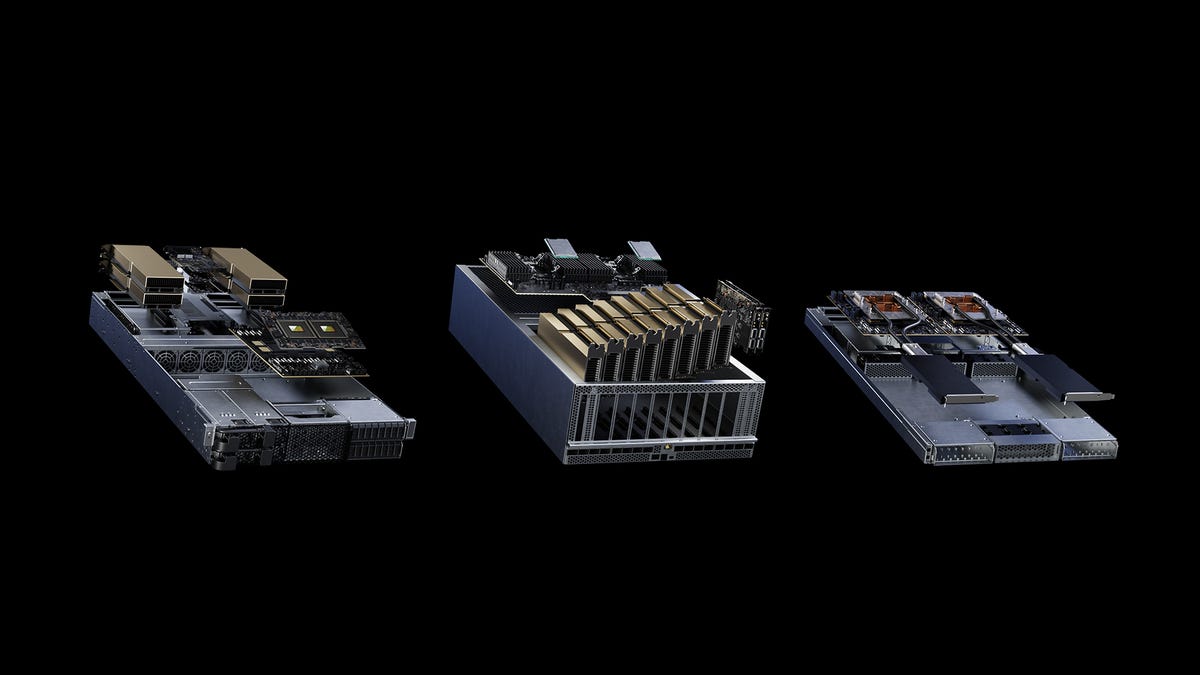

MGX, a reference architecture for creating server variants

The conference also unveiled MGX, a reference architecture allowing system manufacturers to create server variants larger than 100 units. The first partners to use this specification are ASRock Rack, ASUS, GIGABYTE, Pegatron, QCT and Supermicro. QCT and Supermicro will be the first to ship systems in August, Nvidia said.

MGX is a reference architecture that allows computer system manufacturers to quickly and cost-effectively create more than 100 server variants using Nvidia chips. QCT and Supermicro will be the first to ship systems, in August, Nvidia said. Nvidia

Source: “ZDNet.com”