Forget the H100 GPU with its 80 GB of memory and bandwidth of just over 3 TB/s; here is the H200, a version supercharged with HMB3e, which offers even more throughput.

Everyone knows NVIDIA for its GeForce graphics cards (including the RTX 40 soon to be available in a Super version), but the company also has great expertise in GPUs for servers.

Its Hopper H100, a GPU with 80 billion transistors offering 132 stream multiprocessors and 18,432 CUDA cores, all supported by 80 GB of HBM2e or HMB3, and which devours 700 watts (W), is a good illustration of this.

It now has a little brother in chronology, but big brother in specifications, the H200, presented by NVIDIA on the occasion of the Supercomputing 23. The company also introduced a GH200 server card.

More memory, but also bandwidth

NVIDIA has not detailed all the characteristics of the H200, but it is clearly an H100 powered by HBM3e memory. Moreover, the company rightly presents it as the first GPU to benefit from this type of memory.

The H200 inherits 141 GB of memory and no longer just 80 GB. Clocked at 6.25 Gbit/s, this HBM3e would offer a memory bandwidth of 4.8 TB/s.

For comparison, the H100 PCIe integrates HBM2e memory at 3.2 Gbit/s operated via a 5120-bit bus for a bandwidth of 2 TB/s; the H100 SMX5 benefits from HBM3 modules at 5.23 Gbit/s, for a memory bandwidth of 3.35 TB/s.

Putting aside hybrid solutions like the H100 NVL (a combination of two H100 GPUs with 94 GB of memory each for bandwidths of 3.9 TB/s x2), the H200 thus offers 76% memory and 43% bandwidth more than the SXM version of the H100.

This remains to be confirmed, but the rest of the characteristics (the quantity of cores) do not change. However, for large language models that are very hungry for memory and bandwidth, this H200 overloaded with HBM3e should logically provide a significant performance boost.

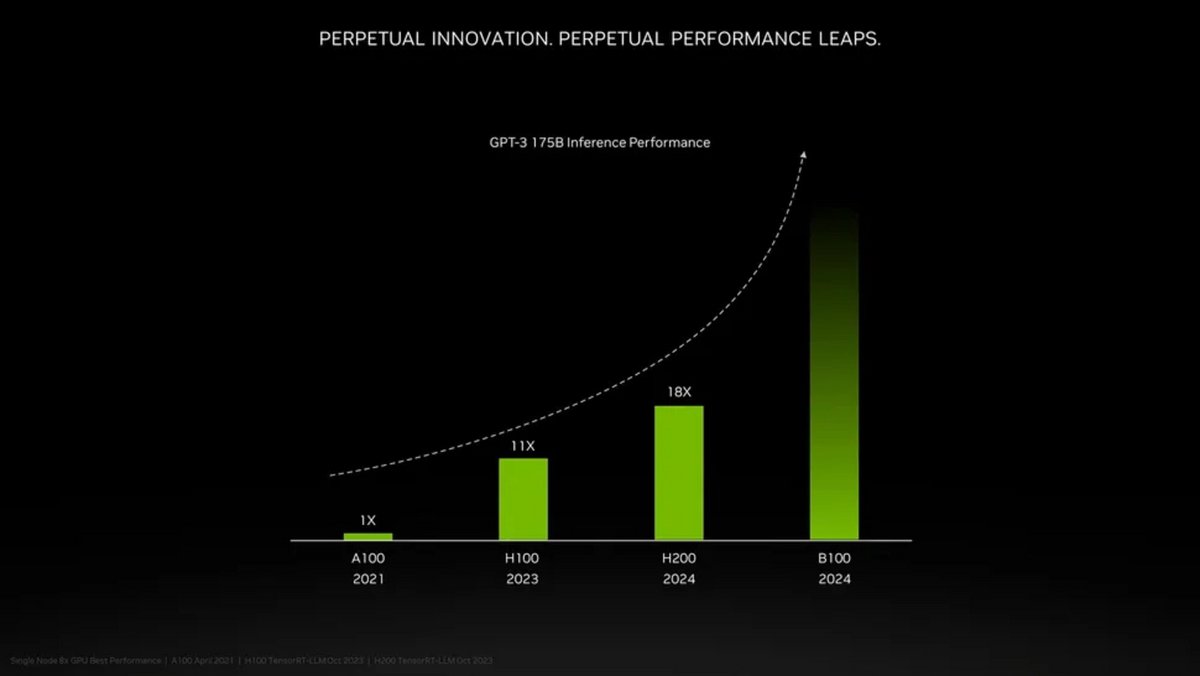

NVIDIA illustrates to what extent under the GPT-3 model (175 trillion parameter version). The company argues that a node of 8 H200 GPUs is 18 times more efficient than one of A100, while the H100 is “only” 11 times more efficient. You will also note the allusion to the next generation of NVIDIA GPUs, codenamed Blackwell.

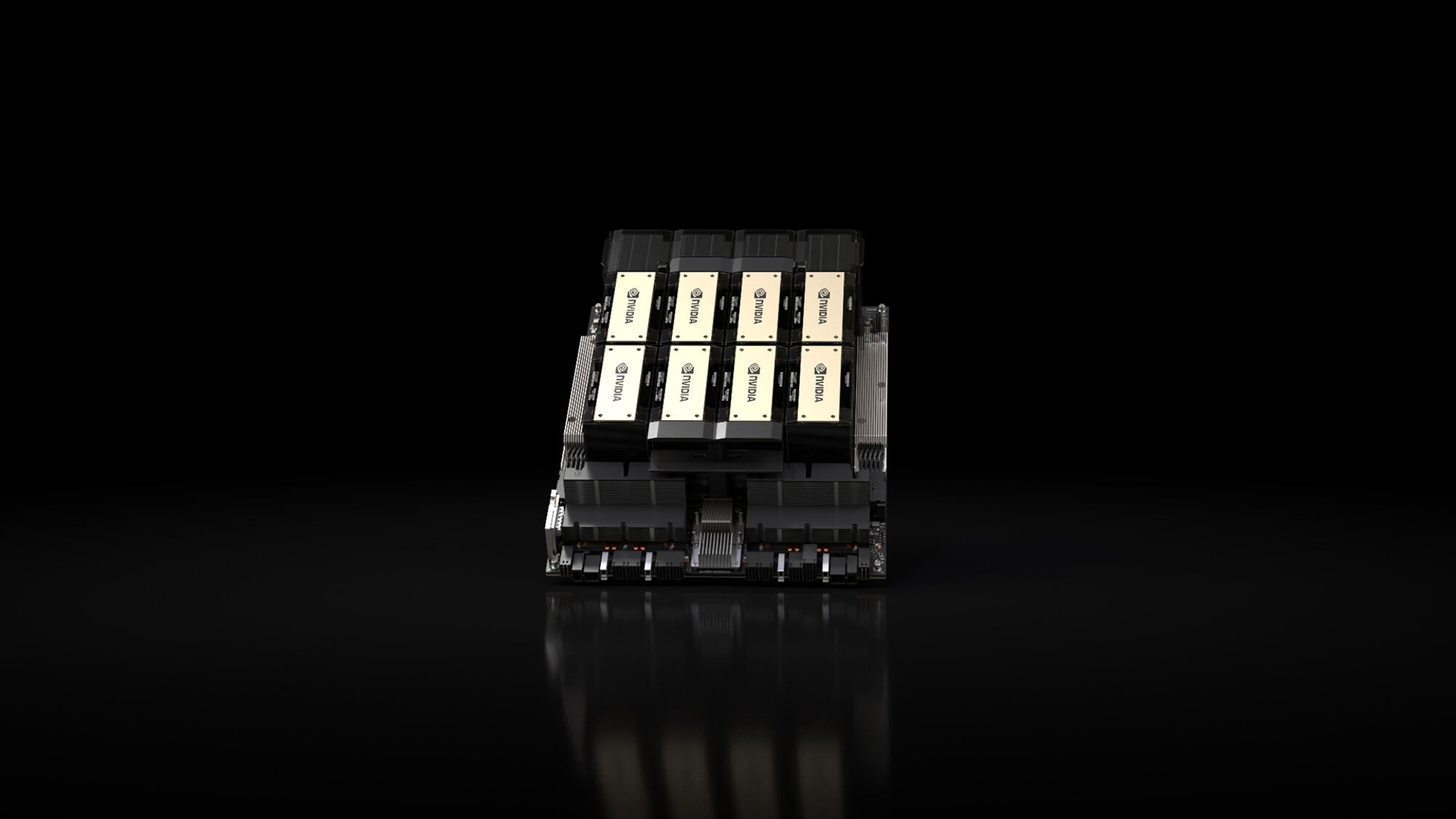

HGX systems that benefit from this new GPU

The H200 will notably be offered within NVIDIA HGX H200 servers, which will remain compatible with both the hardware and software of the HGX H100 systems.

It will also be available via the NVIDIA GH200 Grace Hopper Superchip with HBM3e. Presented last August, this version will therefore combine cGPUs (H200) and Grace CPU Superchip on the same chip.

The NVIDIA H200 will debut during the second quarter of 2024. It should be used in several supercomputers. These include Alps from the Swiss Center for Scientific Computing, Venado from the Los Alamos National Laboratory in the United States and Jupiter, the supercomputer from the Jülich Supercomputing Center in Germany.

In the journey of a gamer or creator, the choice of a graphics card is not one that should be taken lightly. An essential component of a machine designed for gaming or production, the graphics card plays an increasingly important role. Let’s try to guide your choices during this period when all models are quite easily found.

Read more

Source : NVIDIA

9