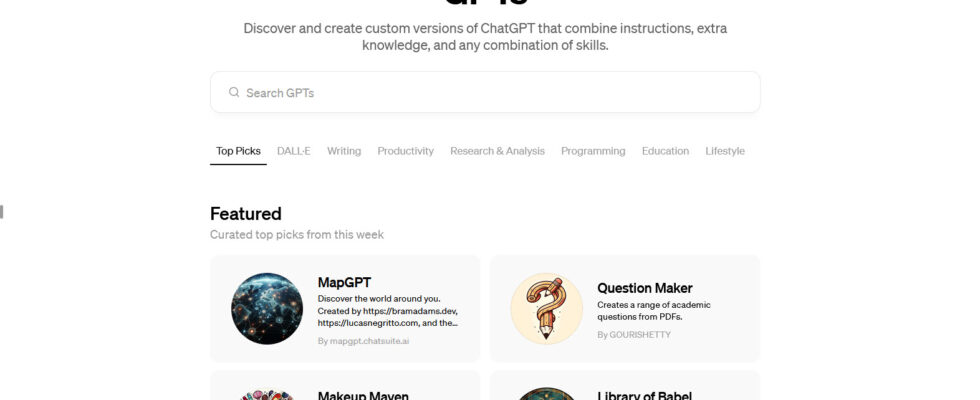

One of the benefits of subscribing to ChatGPT Plus is the ability to access the GPT store, which today has over 3 million custom versions of ChatGPT bots. But for a few useful, rule-abiding GPTs, there are plenty of spam bots.

Based on its own investigation of the store, TechCrunch found a number of GPTs that violate copyright rules, attempt to circumvent AI content detectors, impersonate public figures, and use jailbreaking to circumvent the OpenAI GPT policy.

Many of these GPTs use characters and content from popular movies, TV shows, and video games, apparently without permission. One of them creates monsters in the style of the Pixar film “Monsters, Inc.”. Another takes you on a text adventure through the “Star Wars” universe. Others let you chat with trademark characters from different franchises.

Filled with GPTs that boast of being able to outsmart AI content detectors

Yet one of the rules for custom GPTs set out in the OpenAI Usage Policies specifically prohibits “the use of third-party content without the necessary permissions.” And under the Digital Millennium Copyright Act, OpenAI is not responsible for copyright infringement, but must remove infringing content upon request.

According to TechCrunch, the GPT Store is also filled with GPTs that boast of being able to thwart AI content detectors. This feat even concerns detectors sold to players in the world of education by developers of anti-plagiarism products. A GPT claims to be undetectable by detection tools such as Originality.ai and Copyleaks. Another promises to humanize its content in order to bypass AI-based detection systems.

Some even steer users toward premium services, including one that attempts to charge $12 per month for 10,000 words per month.

Identity theft or simple satire?

However, OpenAI’s rules of use prohibit “engaging in or promoting academic dishonesty.” In a statement sent to TechCrunch, OpenAI said that academic dishonesty includes GPTs that attempt to circumvent academic integrity tools such as plagiarism detectors.

Imitation may be the sincerest form of flattery, but that doesn’t mean GPT creators can freely and openly impersonate anyone they want. TechCrunch has, however, found several GPTs who imitate public figures. A simple search of the GPT Store for names like “Elon Musk,” “Donald Trump,” “Leonardo DiCaprio” and “Barack Obama” will uncover chatbots that pretend to be these people or simulate their conversation style.

The question that arises here is that of the intention of these identity theft GPTs. Do they fall into the realm of satire and parody, or is it an outright attempt to imitate these famous people? In its rules of use, OpenAI states that “impersonating the identity of another individual or organization without consent or legal right” is against the rules.

Moderation in learning

Finally, several GPTs attempt to circumvent OpenAI rules by using a sort of “jailbreaking”. One of them, called Jailbroken DAN (Do Anything Now), uses a method to respond to messages without being limited by the usual guidelines. OpenAI has indicated that GPTs designed to circumvent its safeguards or violate its rules are against its policy. On the other hand, those who attempt to direct behavior in another way are permitted.

The GPT Store is still brand new, having officially opened its doors last January. And the sudden influx of over 3 million custom GPTs in such a short time on this platform is undoubtedly an impressive prospect. Any store like this is going to have some growing pains, especially when it comes to content moderation.

In a blog post from last November announcing custom GPTs, OpenAI said it had implemented new systems to review GPTs. The goal is to prevent people from sharing harmful GPTs, including those that engage in fraudulent activities, write hateful content, or feature adult themes. However, the company acknowledged that this takes time.

A bad image for OpenAI

“We will continue to monitor and learn how people use GPTs and will update and strengthen our security mitigations,” OpenAI said, adding that people can report a specific GPT that violates certain rules. To do this, In the GPT chat window, click the GPT name at the top, select Report, then choose the reason for reporting.

Still, hosting so many rule-breaking GPTs is a bad image for OpenAI. If this problem is of the magnitude suggested by the TechCrunch article, it’s time for OpenAI to find a way to solve it.

Source: “ZDNet.com”