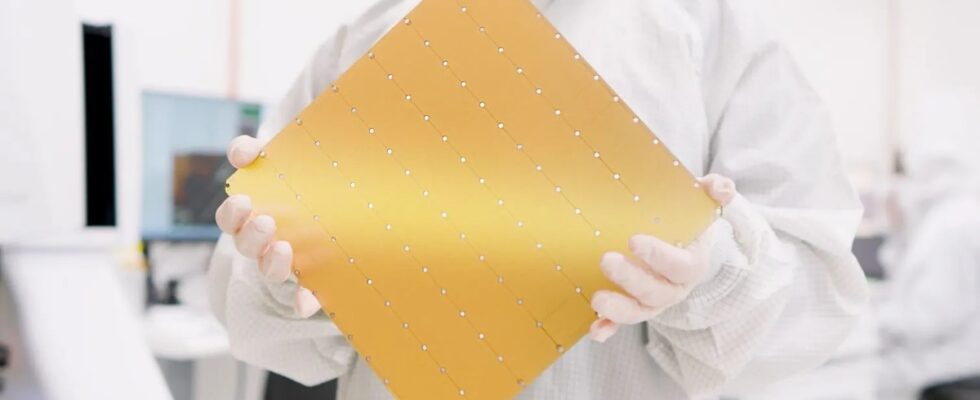

It’s the titan of the processor world, the largest single-piece piece of silicon in the world. This is the new WFS-3, or Wafer Scale Engine third of the name, a chip whose gigantism is surprising. While manufacturers can pile up hundreds of chips a few millimeters square for our smartphones, or assemble small pieces of chips like in Intel Meteor Lake, Cerebras does just the opposite.

This American company is in fact signing here its third generation of wafer-sized chip (Wafer Scale Engine), a monster which occupies the entirety of a wafer, i.e. 21.5 x 21.5 cm. A format which offers advantages which we will examine, but also involves enormous challenges, particularly in terms of efficiency and cooling.

Cores and (lots of) (super) memory

Due to its design and dimensions, the WFS-3 will never make it into any mainstream machine. It is a unique chip, whose exports are closely regulated by the American government – China cannot buy any – and which is dedicated to AI calculations.

If the previous version, the WSE-2, already integrated 2600 billion transistors constituting 850,000 AI cores and on-board memory, the WSE-3 pushes the limits a little further with its 4000 billion transistors for 900,000 cores (now well more powerful and complex). All with no less than 44 GB of SRAM, the ultra-fast integrated memory of the processors, which is generally measured in kilos or megabytes!

Faced with an H100 from Nvidia, 57 times smaller, carrying 52 times fewer cores and using external memory, the key argument of a WFS is as much its crazy number of cores as its on-board memory, SRAM Ultra fast. With such power concentrated in a single chip, there is no longer any need to suffer from the latencies of external memory, in this specific case of the network that usually interconnects GPUs.

With an internal bandwidth of 21 petabytes per second, Cerebras’ WFS-3 is a computing war machine… which can also be networked: G42, a technology holding company based in Abu Dhabi, has decided to connect 64 Cerebras systems each powered by a WSE-3 for its Condor Galaxy 3 supercomputer.

The future monster will be installed in Dallas (Texas), in the United States, and will benefit from the 125 pflops that each chip deploys to meet the needs of AI training.

An extraordinary chip with consumption already under control

Although the density of transistors has almost tripled, the WSE-3 does not consume more than the WSE-2 (2021)… which itself was not more demanding than the WS1-1 launched in 2019. The reason is simple : after a first generation engraved in 16 nm, Cerebras moved to 7 nm, then to 5 nm with this version three. This gradual reduction in transistor size allows engineers to put more power into each generation without needing to change the initial system.

To cool such a gigantic chip, each of Cerebras’ CS-3s uses a specifically designed in-house water cooling system. And for the power supply, there is not one, but twelve (!) power supplies of 4 KW each — six for operation, six for the emergency circuit. And each CS-3 system has no fewer than twelve 100 gigabit network connections.

If the format is imposing, it is nevertheless its advantage: thanks to its density of transistors unique in the world, each system – which Cerebras compares to a small refrigerator in a student room – replaces several dozen blades classic servers. However, it remains to be seen whether Cerebras will be able to establish itself in the field of software, the key to the adoption of hardware. An element in which the undisputed champion remains Nvidia.