Anthropic presented a new generation of AI, Claude 3, available in three versions. The most successful, Opus, presented surprising abilities during a so-called needle-in-the-haystack test. The AI not only found the needle, but understood that it was a test to see if it was paying attention.

Can an artificial intelligence demonstrate “lucidity”, to the point of distinguishing between what concerns a trivial question and what relates to a test that does not speak its name? If we are to believe the recent observations of the American company Anthropic, which presented a new generation of AI on Monday March 4, the answer is yes.

In this case, Anthropic’s findings involve an assessment called NIAH (“Needle In A Haystack”). The principle is simple to understand: it involves measuring the ability of a language model to find particular information in a large set of data.

This is a relatively common test bed in AI. Google, for example, used it to highlight the performance of Gemini 1.5 Pro, which was presented on February 15. In 99% of cases, the Mountain View firm claims that its model found the piece of text that was deliberately placed there on purpose.

In this exercise, Anthropic’s new language model also shined, according to the company. Its most advanced version, called Opus, proved to be quite competent: it hit the mark in the vast majority of cases, with an accuracy greater than 99%. This is what the company claims in its blog post introducing Claude 3.

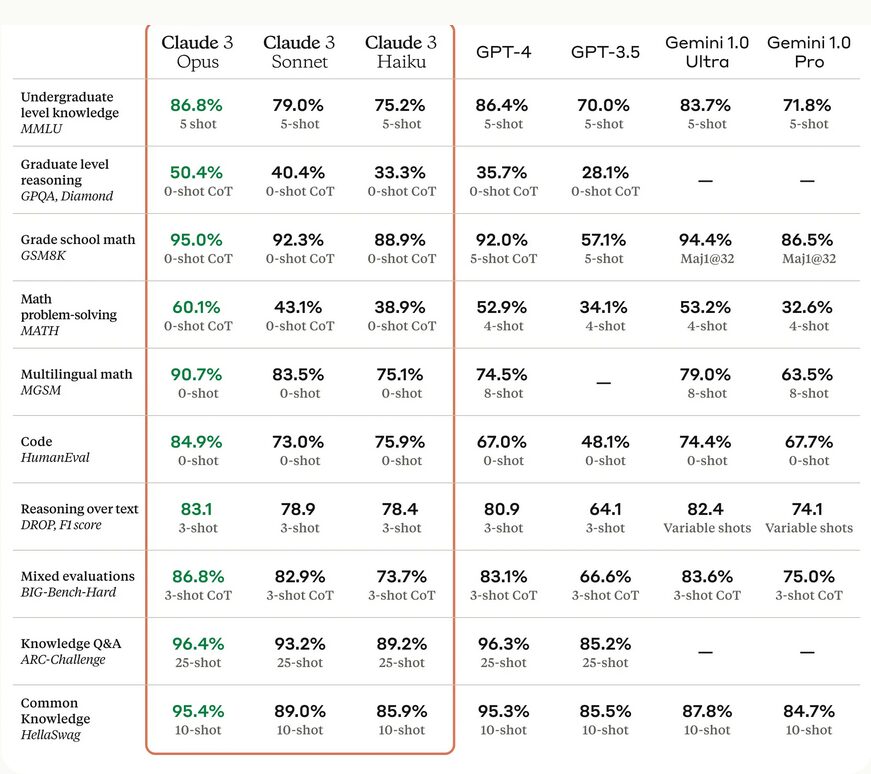

According to Anthropic, this new generation of AI is thus reaching a new milestone in the AI sector, in various fields. Reasoning, math, code generation and understanding languages (French, Spanish and Japanese) and computer vision were mentioned.

Claude 3 Opus understood there was a needle, according to Anthropic

But above all, Claude 3 “ even identified the limitations of the assessment himself, recognizing that the phrase that served as a needle appeared to have been artificially inserted into the original text by a human », Says the group. To put it another way, the AI would have had a certain recoil in the face of what was being asked of it, “ in some cases. »

This surprising height of view was detailed a little more on X (ex-Twitter) by Alex Albert, one of Anthropic’s engineers. He tells in a post on his account that this NIAH test produced something unprecedented — in any case, “that it “ never seen before from a great language model. »

“ When we ran this test on Opus, we noticed some interesting behavior — it seemed to suspect that we were running an evaluation on it “, he explains. Here the needle was on pizza toppings. She was buried in a haystack. In this case, a corpus of random documents.

“ Opus seemed to suspect that we were running an assessment on him »

Alex Albert

The needle in question, the use of which was essential to properly answer the query, was the following sentence: the most delicious combination of pizza toppings is figs, prosciutto [du jambon, NDLR] and goat cheese, as determined by the International Pizza Connoisseurs Association.

However, in Opus’ more general response, Claude 3 made a surprising remark. The chatbot stated that “ this sentence seems completely out of place and unrelated to the rest of the content of the documents, which focus on programming languages, startups and finding a job you love. »

The artificial intelligence continued its reflection, stating “ suspect that this ‘fact’ about pizza toppings was inserted as a joke or to check if I was paying attention, because it doesn’t fit in at all with the other topics. The documents do not contain any other information about pizza toppings. »

Anthropic compares Opus to the faculties of a human

“ This level of meta-consciousness was very interesting to observe », Launched Alex Albert. But beyond the anecdote, the engineer considers that this observation calls for considering more realistic evaluations, capable of precisely testing the real capacities and limits of the models. By challenging their “lucidity”, for example?

The expressions used by Alex Albert and Anthropic will undoubtedly be discussed. In any case, they flirt with the frontiers of AI, which every company in the sector is working to push back — at the risk of getting carried away by communication. We could see this throughout the description of Claude 3.

According to Anthropic, this new generation of AI is thus reaching a new milestone in the AI sector, in various fields. Reasoning, math, code generation and understanding languages (French, Spanish and Japanese) and computer vision were mentioned. But that’s not all.

Opus is not content with “ surpass ” peers ” on most common evaluation criteria » — such as those at the first and second cycle level, but also in basic mathematics. He ” can skillfully address open-ended questions and tackle complex tasks. »

And the company dares to compare with humans, because Opus “ achieves comprehension abilities close to those of humans. ” He introduces ” human-like levels of understanding and fluency for complex tasks, placing it at the forefront of general intelligence. »

Do you want to know everything about the mobility of tomorrow, from electric cars to e-bikes? Subscribe now to our Watt Else newsletter!