A study was hastily withdrawn from a scientific journal two days after it was posted online. In question ? An ill-advised use of Midjourney by the authors. All images were AI-generated, with obvious caption and transparency issues.

The study was intended to be completely serious. Accepted on December 28, 2023, it was published in the scientific journal Frontiers on February 14, 2024. It was even examined by other science specialists, according to the principle of peer review. Only, here it is: on February 16, the paper was retracted.

So what happened so that in the space of two days, the work of three Chinese researchers (Xinyu Guo, Dingjin Hao and Liang Dong) was thus rejected? A methodology problem? Exaggerated results? Insufficiently representative samples? In reality, it’s clearly an illustration problem.

Midjourney to illustrate a scientific paper

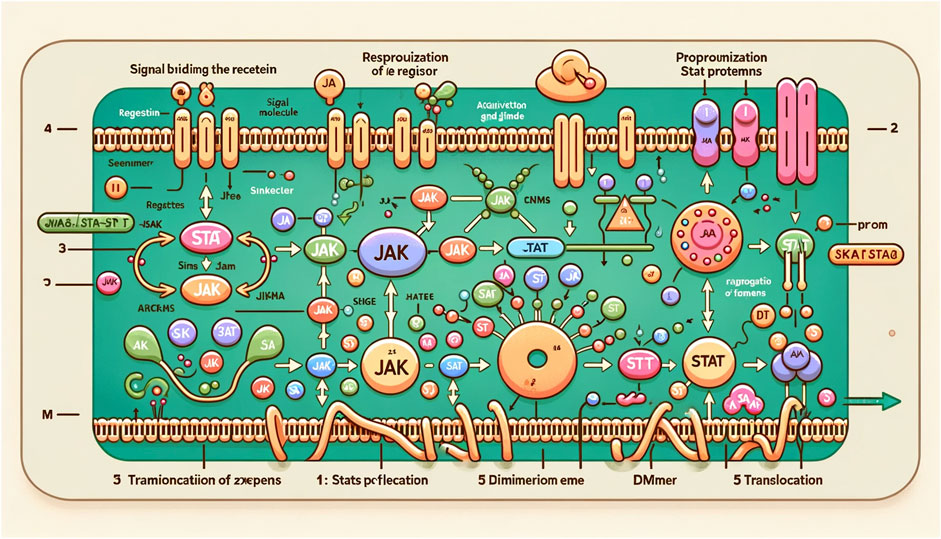

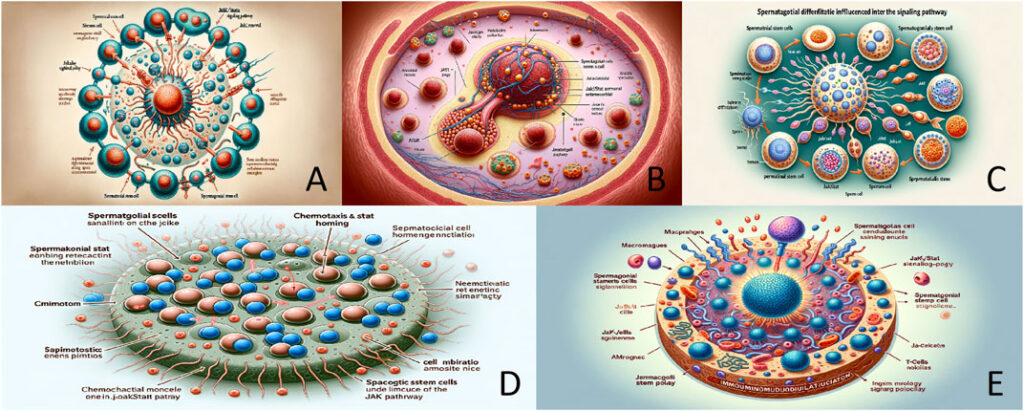

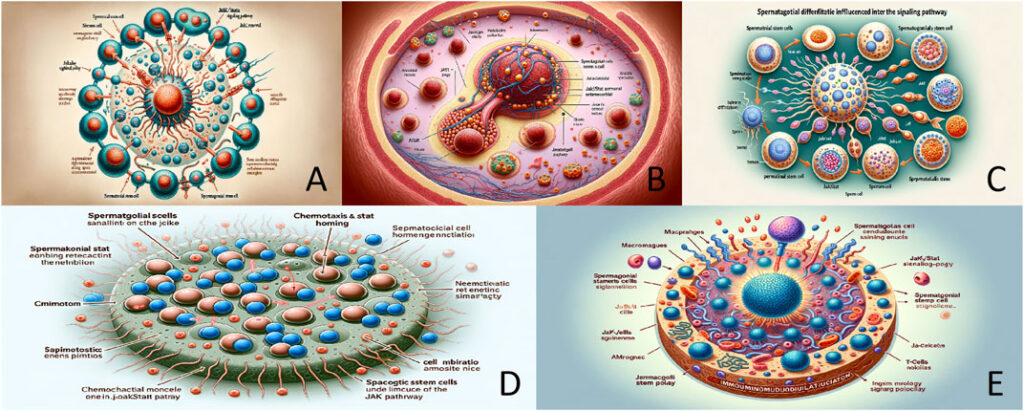

It appears that the authors have used artificial intelligence (AI) to generate all the visuals for the study. This has three, two of which turn out to be collages of several images showing certain stages of what the study intends to explain. Precisely, the tool used to create these illustrations is Midjourney.

The study, titled “ Cellular functions of spermatogonial stem cells in relation to JAK/STAT signaling pathway » (Cellular functions of spermatogonial stem cells in relation to the JAK/STAT signaling pathway) is no longer available on Frontiers. Instead, two warning messages are visible on the publisher’s site.

However, we can still see a copy taken on February 15 by the Internet Archive site. The use of AI was not hidden: it was clearly mentioned throughout the text (“ Images in this article were generated by Midjourney ”, we read just before the first image). On the other hand, this precision was not repeated in each legend.

It is unknown whether images were used as a basis to produce these visuals. It is also not known whether modifications took place after generation, for example with editing software like Photoshop, to edit this or that part. The untrained eye of a layman in science or AI might judge these illustrations to be entirely credible.

The type of prompt used — that is, the instruction used to render a result — is also a mystery, as is the number of tries required to achieve these results. We assume a certain skill in the writing of the order to arrive at such renderings and, moreover, that they obviously pass the threshold of peer reviewed.

Text that means nothing

However, a more careful observation allows you to detect defects in the images, particularly in the text. Historically, Midjourney has always struggled to produce meaningful writing on images, even with the recent version 6 — despite everything, real progress has been observed in recent months.

So, we can see captions that don’t make much sense, and that’s an understatement: “iollotte sserotgomar cell”, “retat”, “dck”, “dissilced” “Tramioncatiion of zoepens”, “Stats poflecation” , etc. Some letters are not formed and we had to try to guess them. Elsewhere, fully formed terms simply mean nothing.

If we refer to the mentions at the bottom of the page on the site, the study was edited by an Indian researcher, Amugam Kumaresan, working for the National Dairy Research Institute. It was also received by Frontiers on November 17, before the alpha release of Midjourney v6.

The study was proofread by an Indian colleague, Binsila B. Krishnan, from the National Institute of Animal Nutrition and Physiology, and Jingbo Dai, from the American NGO Northwestern Medicine. These legendary concerns have obviously not been seen or considered serious.

As for those responsible for the study, Xinyu Guo and Dingjun Hao are at Hong Hui Hospital, in the spine surgery department. They also work at Jiaotong University in Xi’an. Liang Dong is affiliated with this same hospital.

In an initial warning notice, Frontiers said on February 15, “ declares knowledge of problems […]. The article has been removed while an investigation is conducted and this notice will be updated accordingly following the conclusion of the investigation “. On the 16th, the decision was made, with the pure and simple withdrawal of this work.

“ The article does not meet the editorial and scientific rigor standards of Frontiers in Cell and Development Biology ”, it is explained. These are indeed the “ Concerns Raised About Nature of AI-Generated Figures » which led to this outcome, after reports which were reported to the editorial staff of the magazine by part of the readership.

Rules on the use of AI in scientific subjects

In its guidelines for authors, Frontiers provides rules to govern the use of AI technologies (ChatGPT, Jasper, Dall-E, Stable Diffusion, Midjourney, etc.) for “ write and edit manuscripts » that scientists wish to publish. These include obligations regarding transparency, accuracy and anti-plagiarism.

The author is responsible for verifying the factual accuracy of any content created by generative AI technology, Frontiers adds. “Illustrations produced or edited using generative AI technology should be checked to ensure that they correctly reflect the data presented in the manuscript. »

In this scenario, “ this use should be acknowledged in the acknowledgments section of the manuscript and in the methods section if applicable. This explanation must list the name, version, model and source of the generative AI technology “. Elements which have obviously not been entered on the page.

Finally, Frontiers also asks authors to forward all prompts. This is in order to know how the artificial content appearing in a study, whatever it may be, was produced. But here too, the study seems to ignore these elements, unlike another, much more transparent one.

The study “ Group trust dynamics during a risky driving experience in a Tesla Model »(Group trust dynamics during a risky driving experience in a Tesla Model), also published on Frontiers and peer reviewed, also uses AI images. But the indications are more numerous and clearer.

Do you want to know everything about the mobility of tomorrow, from electric cars to e-bikes? Subscribe now to our Watt Else newsletter!