If you’ve ever searched for a restaurant on Google Maps, you might have an idea of the menu and decor. But it can be difficult to anticipate what the overall experience of your outing will be. Will there be too many people when you arrive? Does the lighting create the right atmosphere?

These are the kinds of questions that Google is trying to answer with the new “immersive view” offered in Google Maps. This new feature, announced last year, launched Tuesday in London, Los Angeles, New York, San Francisco and Tokyo. It will be available in the coming months in other cities.

Using artificial intelligence, the feature merges billions of Street View and aerial images, creating an “immersive view” of the world. It uses Neural Radiance Fields (NeRF), an AI technique used to create 3D images from ordinary photos. The user can thus get an idea of the lighting of a place, the texture of the materials or the elements of context, such as what is in the background.

Picture: Google.

Something new for Lens

Google will also soon expand its search tool with Live View in Maps to other locations in Europe, including Barcelona, Madrid and Dublin. Additionally, the Indoor Live View feature is expanding to over 1,000 new airports, stations and shopping malls in various cities including London, Paris, Berlin, Madrid, Barcelona, Prague, Frankfurt, Tokyo, Sydney, Melbourne, São Paulo and Taipei.

Maps is also enriched with new features for drivers of electric vehicles. The platform will now show locations that have on-site charging stations and help drivers find chargers of 150 kilowatts or more.

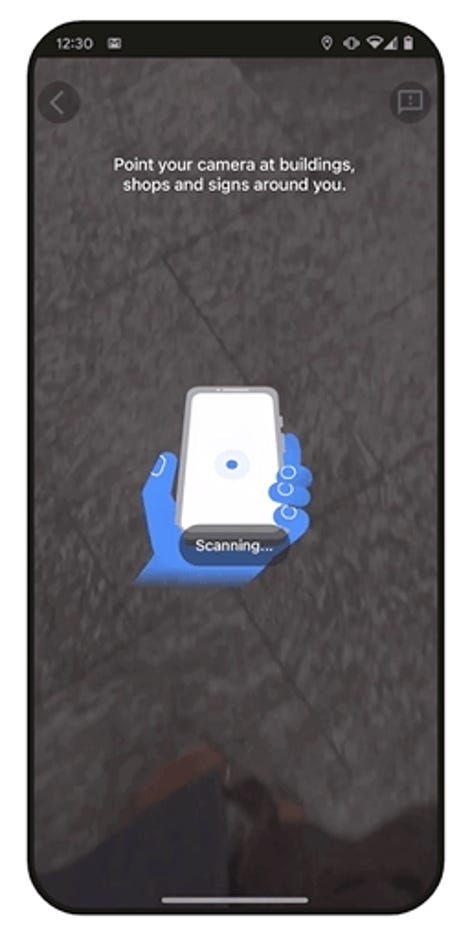

Google is also stepping up its ways to explore the world with Lens, the AI-powered tool that lets you search for images from a camera or photo. First launched in 2017, Lens is already used more than 10 billion times per month, Google claims.

More context for Google Translate

Thanks to the multiple search with Lens, it is possible to carry out searches using text and images at the same time. Just a few months ago, Google launched “multisearch near me” to take a picture of something (like a specific meal) and find it near where you are. In the coming months, Google said it will roll out “multisearch near me” in all languages and countries where Lens is available.

Multiple search also extends to images on the web on mobile. Additionally, Google offers a “search on your screen” feature with Lens on Android. Users will be able to search for photos or videos on their screen, no matter what app or website they are using, without leaving the app.

Google Translate is also getting an update. Among other things, the tech giant announced on Wednesday that the tool will provide more context for translations. For example, it will tell you if words or phrases have multiple meanings and help you find the best translation. This update will be available in English, French, German, Japanese, and Spanish in the coming weeks.

Source: ZDNet.com