GPT-4, just unveiled, offers real possibilities in terms of accessibility, which could make life easier for the visually impaired.

OpenAI has just unveiled its brand new multimodal artificial intelligence model, GPT-4. AI has made impressive progress thanks to its new flagship property, vision, which allows it to understand text, but also images. This is also used by the start-up Be My Eyes, which wants to make the world around them more accessible to visually impaired or blind people through technology.

GPT-4, at the service of accessibility for the visually impaired

If the capabilities of GPT-4 are currently limited, even on ChatGPT Plus (the paid version of the chatbot does not currently offer image processing and is limited to 100 requests per 4 hour period), Be My Eyes is the first OpenAI partner to be able to take advantage of new AI capabilities.

The Danish mobile app now uses GPT-4 to help visually impaired and blind people view image content. The publisher, born in 2015, is known for having brought together a community of 6.3 million volunteers who help app users perform their daily tasks, such as finding their way around an airport or identifying a product.

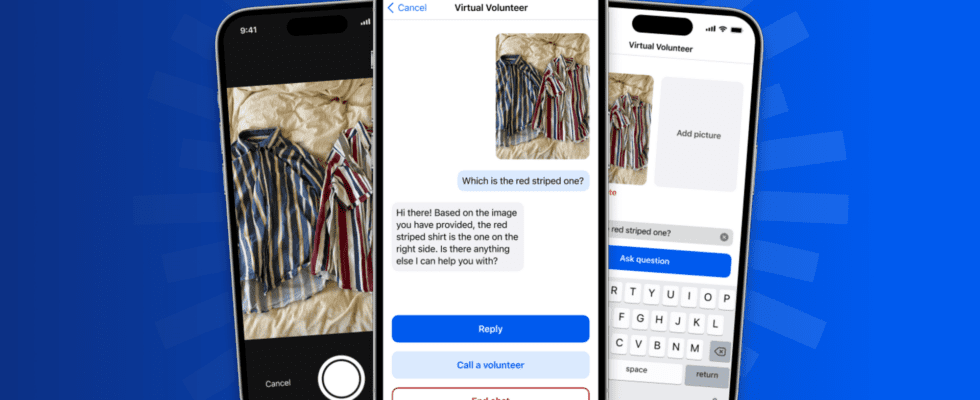

Be My Eyes has just designed a new tool, Virtual Volunteer, which is in beta phase and aims to improve usability, accessibility and access to information for its users around the world. It can generate the same level of context and understanding as a human volunteer. All, therefore, powered by the GPT-4 model of OpenAI.

Which of these two shirts is red?

Regarding the operation of this tool, let’s take the case of a user who sends an image through the application to a volunteer who would then be virtual and powered by artificial intelligence. The AI will answer any question posed to it related to the image to provide the visually impaired with instant visual assistance.

Be My Eyes takes two simple everyday examples. Suppose the user sends a photo of the inside of his refrigerator and wants to make a kind of inventory of fixtures to be able to do or order his groceries, the AI will tell him what it contains. It is even possible to push your skills to the point of asking it for one or more recipes that could fit with the ingredients still stored in the fridge. The AI then provides a list of recipes, with all the steps to complete.

It will also be possible to ask the application which of these shirts is red, for example. Imagine the possibilities. Let’s also add that by going through Be My Eyes, the user will also have a backup solution. If he does not get a response from the AI or it does not bring him satisfaction, he can always request a connection with a human volunteer.

The start-up promises to put its new feature, free, in the hands of users here ” some months “. It remains for the moment in beta version with its client companies. Nevertheless, the group of beta testers will be rapidly expanded in the coming weeks, promises Be My Eyes.

Sources: Be My Eyes, Open AI

6