The highly controversial French essayist Idriss Aberkane recently held a conference about artificial intelligence. An opportunity for him to promote one of his works, but also to try to show his expertise on the subject. However, many of the allegations made during these two hours of speaking are false: our fact-checking of Idriss Aberkane.

On April 19, 2023, Idriss Aberkane gave a lecture entitled The Triumph of Your Intelligence organized by the Association of Business Leaders of France in Perpignan. He was able to take up certain elements of his essay of the same name about artificial intelligence. The French essayist has multiplied the untruths that we demystify here.

Telling stories to tell nothing: Idriss Aberkane’s void-filling strategy

Idriss Aberkane’s conference is mainly a series of scientific or historical anecdotes diverted in order to make them go in the direction of his thinking on artificial intelligence. Commonplaces repeated even in the questions that were put to him at the end of the conference. This causes a lot of huge parentheses to be made off topic. Analogies that are too broad and beyond the subject of AI to be relevant. This leads Idriss Aberkane to make transitions that lack logic between the themes he addresses.

YouTube linkSubscribe to Frandroid

Beyond the erroneous facts he states about artificial intelligence or his own interpretations, Idriss Aberkane says nothing, or not much during the two hours or so of his lecture. We come out with imprecise generalities and above all predictions of the future without details. Which shows how the essayist is not an expert in the field. This despite a very complete CV, but strongly questioned.

How to manipulate a room with a deepfake

At the beginning of his lecture, or at least in his introduction, Idriss Aberkane goes back a few years, to the beginnings of the appearance of deepfakes on the Internet. It takes up an article from theIEE Spectrum recounting a video manipulating the image of Barack Obama by making him say things he has never expressed.

If the result was already impressive, Idriss Aberkane did not broadcast a video, just two images: one of Barack Obama, the other of deepfake as his look. On a screenshot, it is difficult to determine which image is the fake one. This allowed him to have a room shared almost 50/50 according to him, the spectators not agreeing on the choice of the real image.

” Microsoft acquired OpenAI” : not exactly

During his conference, Idriss Aberkane affirms that ” Microsoft acquired OpenAI which is not factually correct. In fact, Microsoft has invested several billion dollars over several years in OpenAI. The expressed goal was to accelerate the deployment of generative artificial intelligence in its services, in particular in the Microsoft 365 suite. According to rumors, Microsoft could thus recover 75% of the company’s profits until its investment is reimbursed.

Subsequently, he assures that OpenAI was stolen from Elon Musk by Microsoft. Again, this cannot be considered fair. Idriss Aberkane explains that the owner of Tesla and Twitter has invested $100 million. But if the multi-billionaire left, it was mainly because he wanted control of OpenAI, which he had been denied.

No, Google’s AI didn’t learn Bengali on its own

The essayist implies that Google’s AI learned Bengali on its own, without any form of guidance. In truth, it’s more complicated than that. In an interview withCBSSenior Vice President of Corporate Technology James Manykia said:We found out that with very little guidance in Bengali, he can now translate all of Bengali.»

This is an exaggeration of reality on the part of Idriss Aberkane. If we can consider that Google’s progress is impressive, the AI system we are talking about does not start from scratch.

More broadly, artificial intelligences do not really learn on their own. They are trained for a certain time on a precise database. On language models, if they write texts “unpublishedthey are necessarily based on others that already exist. In addition, the memory of these models is limited. As rightly pointed out on Twitter Ari Koutsinnovation consultant,they don’t have any memory either, when you actually “converse” the program sends back the entire conversation each time to get an answer.»

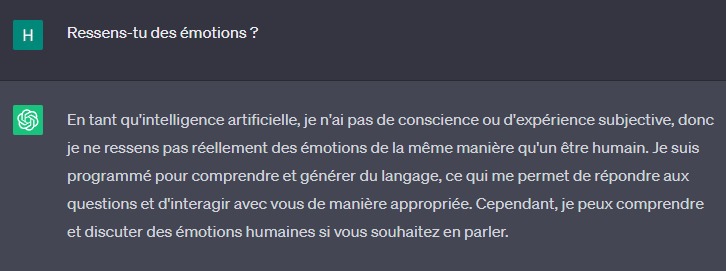

No, ChatGPT does not feel emotions

Idriss Aberkane raises the possibility that ChatGPT feels emotions, but does not recognize it. According to him, thepromptinitial ChatGPT (to be understood as the indications given to GPT-3.5 for the generation of answers) would prevent the language model from expressing its emotions.

For the essayist,we don’t know if he feels emotions or not“. But, in reality, it is absolutely not that. In reality, GPT, the language model behind ChatGPT, is not intelligent in a human sense. All it does is just statistically predict which word comes after another. All these words form sentences, paragraphs. Since it was trained with a huge database of texts, the probabilities thus created are relatively reliable. This is precisely why we manage to get coherent answers, sometimes factually correct. It is also because we are talking about probabilities that ChatGPT can start inventing anything and everything.

However, it is true that there are limitations put in place by OpenAI and the developers of language models and more generally of artificial intelligence. When training these systems, labels are placed on the results to indicate whether they are good or not. It is this process, moreover, that has been the subject of sharp criticism, particularly in terms of the working conditions oflabelers“, who are often underpaid African workers and not psychologically accompanied.

AI can’t hide what it’s doing from us

Another interesting extract isolated by Ari Kouts, the one where Idriss Aberkane says: “[l’IA] can do something in parallel for her, if she wishes. In any case, if she does, you won’t know.Which is not possible in practice, since an artificial intelligence responds to a specific request and is not programmed to do anything else.

Another silly passage, no, an AI of this type cannot have a deliberate practice, it’s just a tool that we question and which responds from probabilities that it has learned. Nothing more. No will, no consciousness. She has no free time, no notion of time… pic.twitter.com/L9IVpFTWiO

— Ari Kouts (@arikouts) May 24, 2023

As mentioned earlier, because it has no consciousness or feeling, an AI cannot make decisions on its own. Each model is trained for a specific task: the computing power needed to train it is expensive, which is why the models “generalistsare few and limited.

Of course artificial intelligence can recognize a face

During this conference, Idriss Aberkane explains that he “there are things that we know how to do very easily and that artificial intelligence cannot do well. Facial recognition for example; it will change.He then takes the example of his smartphone when he wants to unlock it and explains that, when he smiles, it does not work well. It is possible, but only once. After a few tries, the iPhone can unlock for sure and that’s just because it needs practice, understanding.

However, the iPhone model he owns is equipped with Face ID, Apple’s proprietary facial recognition technology, recognized for years for its effectiveness. While Idriss Aberkane could have taken the example of public facial recognition systems, which can be overwhelmed by the number of people filmed.

Difficulty dealing precisely with AI

Claiming to be the author of three theses, Idriss Aberkane chained several scientific untruths, including on artificial intelligence and not only during this conference that we verified. This fits into the more general trend of generative artificial intelligence. With ChatGPT, Midjourney, Stable Diffusion, it enters our lives quickly without us understanding how these programs work.

This allows many to proclaim themselves “expertsin this still very recent field, which is basically the prerogative of experienced scientists. So it’s not because a “expertdisplays his analysis and his historical-scientific culture that one must automatically believe him, no matter how eloquently he declaims it. Yes, AIs work in a relatively opaque way, and yes, it’s very difficult to popularize their way of doing things, but that’s not why you have to fall into unfounded beliefs.

YouTube linkSubscribe to Frandroid

Want to join a community of enthusiasts? Our Discord welcomes you, it’s a place of mutual aid and passion around tech.