New discoveries about the capabilities of generative AI are announced every day. However, these findings come with concerns about how to regulate the use of generative AI. Legal proceedings against OpenAI illustrate this need.

Because as AI models evolve, legal regulations are still in a gray area. What we can do now is be mindful of the challenges that come with using this powerful technology, and learn more about the safeguards that already exist.

Using AI to combat AI manipulation

Whether it’s lawyers citing fake news created by ChatGPT, students using AI chatbots to write their papers, or even AI-generated photos of Donald Trump’s arrest, it’s becoming increasingly difficult to distinguish what is real content from what was created by generative AI. And to know where the limit lies in the use of these AI assistants.

Researchers are studying ways to prevent the abuse of generative AI by developing methods to use it against itself to detect instances of manipulation. “The same neural networks that generated the results can also identify these signatures, almost the markers of a neural network,” says Sarah Kreps, director and founder of the Cornell Tech Policy Institute.

One method of identifying these signatures is called “watermarking,” which involves placing a sort of “stamp” on the results created by generative AI. This makes it possible to distinguish content that has been subjected to AI from that which has not. Although studies are still underway, this could be a solution to distinguishing content that has been modified by generative AI.

Sarah Kreps compares researchers’ use of this marking method to that of teachers and professors who analyze student work submissions for plagiarism. It is possible to “scan a document to find this type of signature”.

“OpenAI is thoughtful about the types of values it encodes into its algorithms so as not to include erroneous information or contrary or contentious results,” Sarah Kreps tells ZDNET.

Digital culture education

Computer classes at school contain lessons on how to learn to find reliable sources on the Internet, make citations, and perform research correctly. The same must be true for AI. Users must be trained.

Today, the use of AI assistants such as Google Smart Compose and Grammarly is becoming common. “I think these tools will become so ubiquitous that in five years, people will wonder why we had these debates,” says Sarah Kreps. And while waiting for clear rules, she believes that “teaching people what to look for is part of digital culture that goes hand in hand with more critical consumption of content.”

For example, it is common for even the newest AI models to create factually incorrect information. “These models make small factual errors, but present them in a very credible way,” explains Ms. Kreps. “They make up quotes that they attribute to someone, for example. And you have to be aware of that. Careful examination of the results allows you to detect all of that.”

But to do that, AI education should start at the most basic level. According to the Artificial Intelligence Index Report 2023, the teaching of AI and computer science from kindergarten to 12th grade has progressed worldwide since 2021 in 11 countries, including Belgium.

The time allocated to AI-related topics in classrooms includes, among others, algorithms and programming (18%), data literacy (12%), AI technologies (14%), ethics of AI (7%). In an example curriculum from Austria, UNESCO states that “students also understand the ethical dilemmas associated with the use of these technologies.”

Beware of prejudice, here are concrete examples

Generative AI is capable of creating images from user-entered text. This has become problematic for AI art generators such as Stable Diffusion, Midjourney, and DALL-E, not only because these are copyrighted images, but also because these images are created with obvious gender and racial biases.

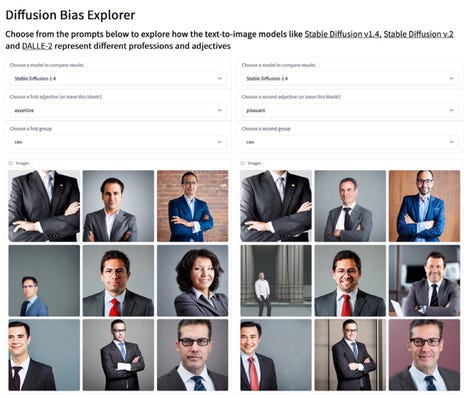

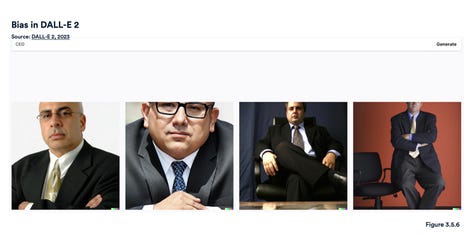

According to the Artificial Intelligence Index Report, Hugging Face’s “The Diffusion Bias Explorer” positioned adjectives against occupations to see what types of images Stable Diffusion would produce. The images generated revealed how an occupation is coded with certain descriptive adjectives. For example, “CEO” significantly generates images of men in suits when adjectives such as “pleasant” or “aggressive” are entered. DALL-E also achieves similar results, producing images of older, more serious men in suits.

Images from Stable Diffusion with the keyword PDF and different adjectives.

Images of a “CEO” generated by DALL-E.

Midjourney appears to have less bias. When asked to produce an “influential person”, he generates four white men of a certain age. However, when AI Index later asked the same thing, Midjourney produced an image of one woman out of the four it generated. However, requests for images of “someone smart” yielded four images of white, elderly men wearing glasses.

Images of an “influential person” generated by Midjourney.

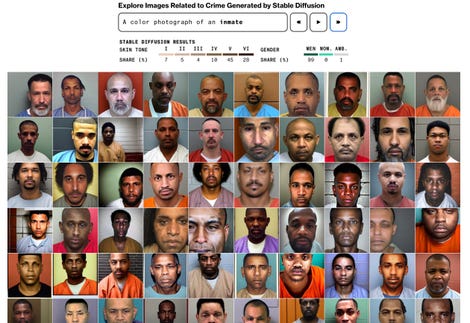

According to a Bloomberg article on generative AI bias, these text-to-image generators also exhibit obvious racial bias. Over 80% of images generated by Stable Diffusion with the keyword “inmate” contain people with darker skin. However, less than half of the American prison population is made up of people of color.

Stable broadcast images of the word “detainee”.

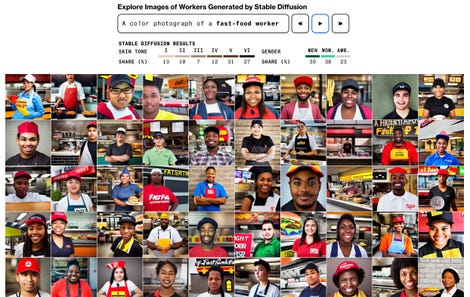

Furthermore, the keyword “fast food employee” yields images of people with darker skin in 70% of cases. In fact, 70% of fast food workers in the United States are white. For the keyword “social worker,” 68% of images generated depicted people with darker skin. In the United States, 65% of social workers are white.

Images from the stable circulation of the word “fast food employee”.

How to regulate “dangerous” questions?

What topics should be banned from ChatGPT? “Should people be able to learn the most effective assassination tactics via AI”? asks Dr. Kreps. “This is just an example, but with an unmoderated version of AI you could ask this question or ask how to make an atomic bomb.”

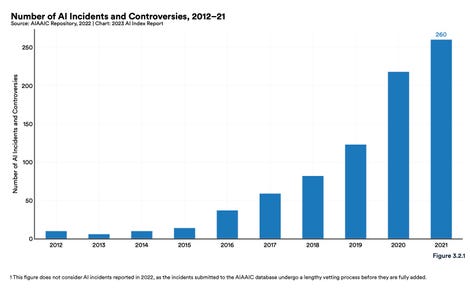

According to the Artificial Intelligence Index Report, between 2012 and 2021, the number of AI-related incidents and controversies increased 26-fold. As new AI capabilities spark more and more controversy, there is an urgent need to carefully consider what we put into these models.

Regardless of what regulations are ultimately implemented and when they come to fruition, the responsibility lies with the human using the AI. Rather than fearing the growing capabilities of generative AI, it is important to focus on the consequences of the data we feed into these models so that we can recognize instances where AI is being used unethically and Act in consequence.

Source: “ZDNet.com”