On the verge of becoming a key player in the high-bandwidth RAM market, Micron indicates that its HBM3E chips will all be sold by 2024, or even 2025. An announcement which casts a shadow over the supply of GPUs to middle term.

Until now a secondary player in the high-bandwidth RAM (HBM) market, Micron is making a breakthrough in this area thanks to its new HBM3E memory. However, the group’s latest statements suggest that the supply of chips of this type risks drying up in the medium term. An embarrassing situation, because it could induce a potential bottleneck in the production of GPUs dedicated in particular to artificial intelligence, reports Tom’s Hardware.

We also learn that the full production of HBM3E memory, which is very demanding in wafers (these silicon wafers necessary for the manufacture of semiconductors), could also have direct consequences on the supply of DRAM memory, used for its is available on many consumer devices. In other words, the possibility of a new shortage of components cannot be excluded – like the one we experienced after Covid.

HBM3E memory, critical for Nvidia and its GPUs dedicated to AI

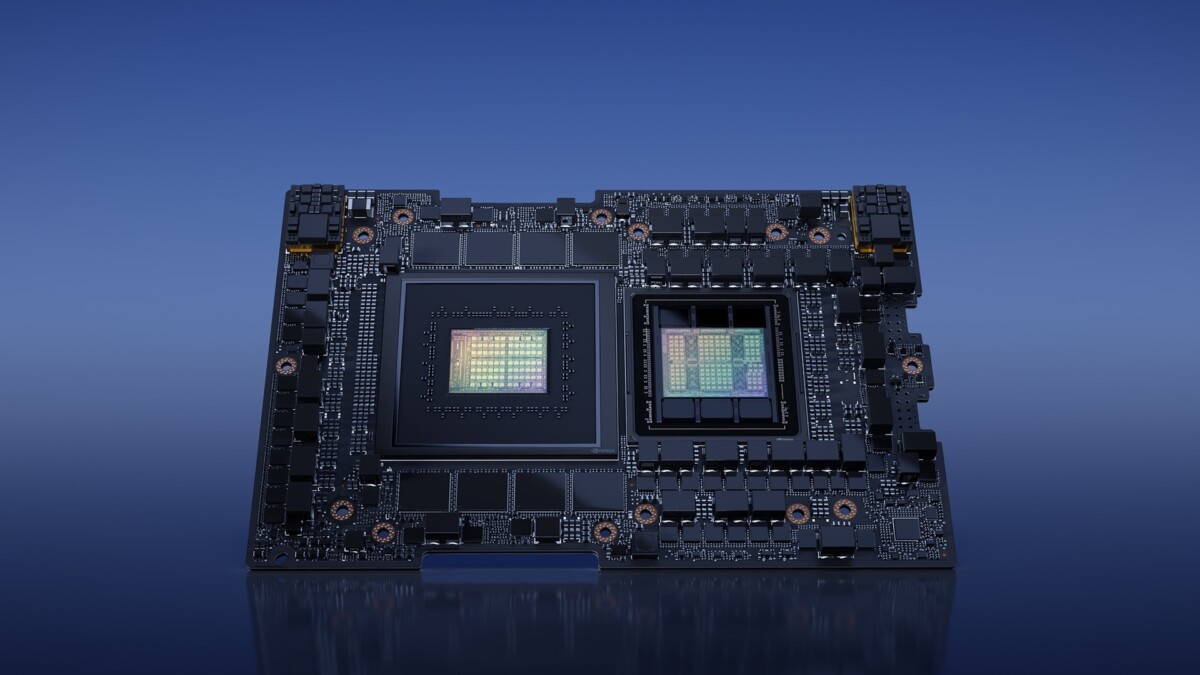

“ Our HBM memory is sold out for the year 2024, and the vast majority of our supply for 2025 has already been allocated “, notably indicated Sanjay Mehrotra, general manager of Micron when announcing the group’s latest results this week. For context, Micron’s HBM3E memory (which is the first company in the world to commercially exploit this type of RAM), is notably used by Nvidia’s new H200 GPU, dedicated to AI and supercomputers.

For the manufacture of each H200 graphics card, Nvidia uses no less than six HBM3E chips, which should allow Micron to capture a large market share in the HBM memory field… at the risk of quickly reaching the limits of its production capacity, even if the group wants to be reassuring.

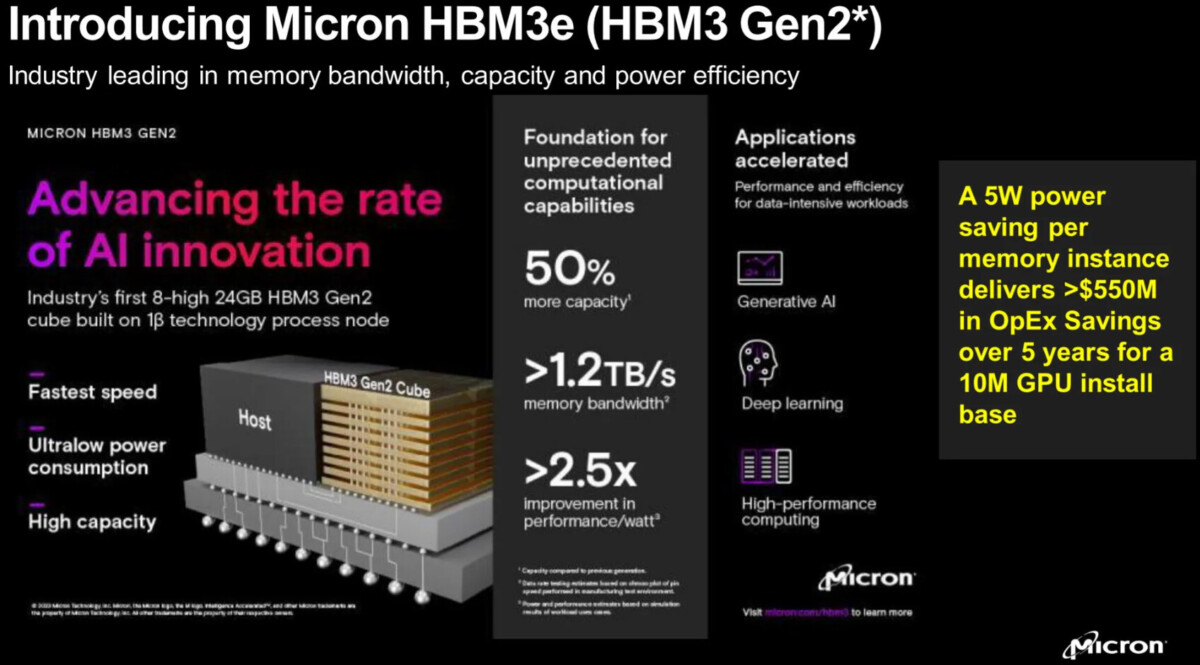

On the technical side, Micron’s HBM3E chips are currently 24 GB 8Hi modules, offering a data transfer rate of 9.2 GT/s and memory bandwidth of over 1.2 TB/s per device. By using six of these chips per H200 GPU, Nvidia allows it to total no less than 141 GB of HBM3E memory.

However, we know that Micron intends to go further in this area. Its next generation of HBM “12Hi” memory chips should indeed increase capacity by 50%, to 36 GB, and thus allow AI to train ever larger language models.

As mentioned above, this technological and industrial race for HBM memory could on the other hand lead to production problems for more traditional DRAM modules, used on a much larger scale.

“ Since the manufacturing of HBM modules involves the production of specialized DRAM, the ramp-up of HBM chips will significantly affect Micron’s ability to manufacture DRAM ICs for mainstream applications », explains in particular Tom’s Hardware.

A problem that Micron has already admitted. “ HBM production ramp-up will limit growth in non-HBM product offering », Indeed specified Sanjay Mehrotra. “ Across the industry, HBM3E memory consumes approximately three times more wafers than standard DDR5 (…) “. That’s what it says… and that leads us to fear possible shortages next year.