The new Vision Pro headphones are a completely new device in the Apple ecosystem. The company provided details on the device itself during its 2-hour-plus WWDC 2023 keynote. But it kept developer details to itself for a later event, the Platforms State of the Union.

So I attended this presentation and in this article I have listed the main development information for VisionOS.

Let’s start with a little background.

VisionOS is the operating system designed for what Apple calls “spatial computing”. The company distinguishes this computing paradigm from the two paradigms we are most familiar with, namely desktop computing and mobile computing. The idea of spatial computing is that your work environment floats in front of you.

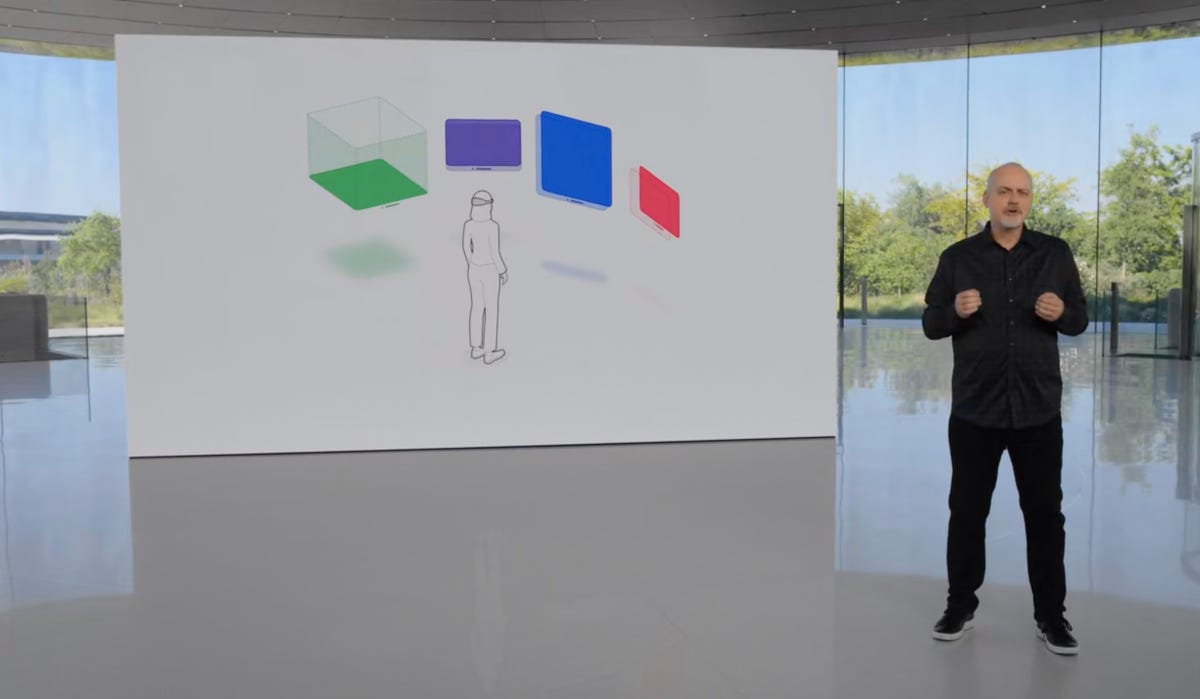

The “shared space” is where apps float side by side. Imagine several windows side by side, but they are not on a desk, but in the air.

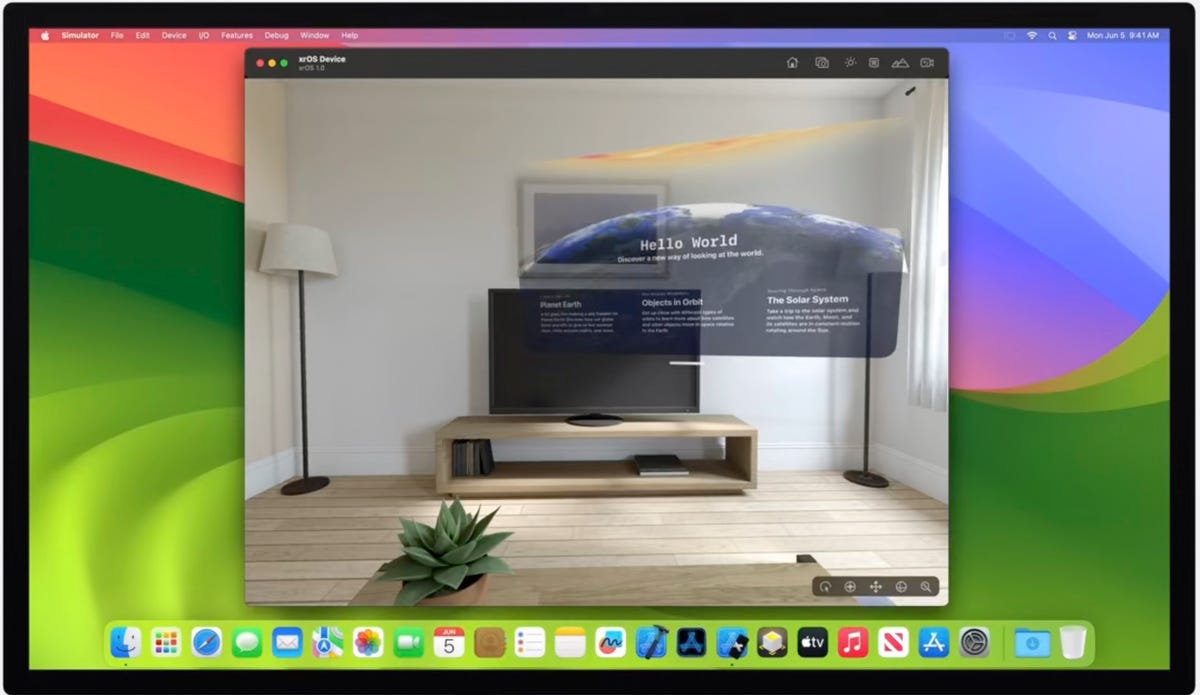

The Shared Space / Screenshot by David Gewirtz/ZDNET

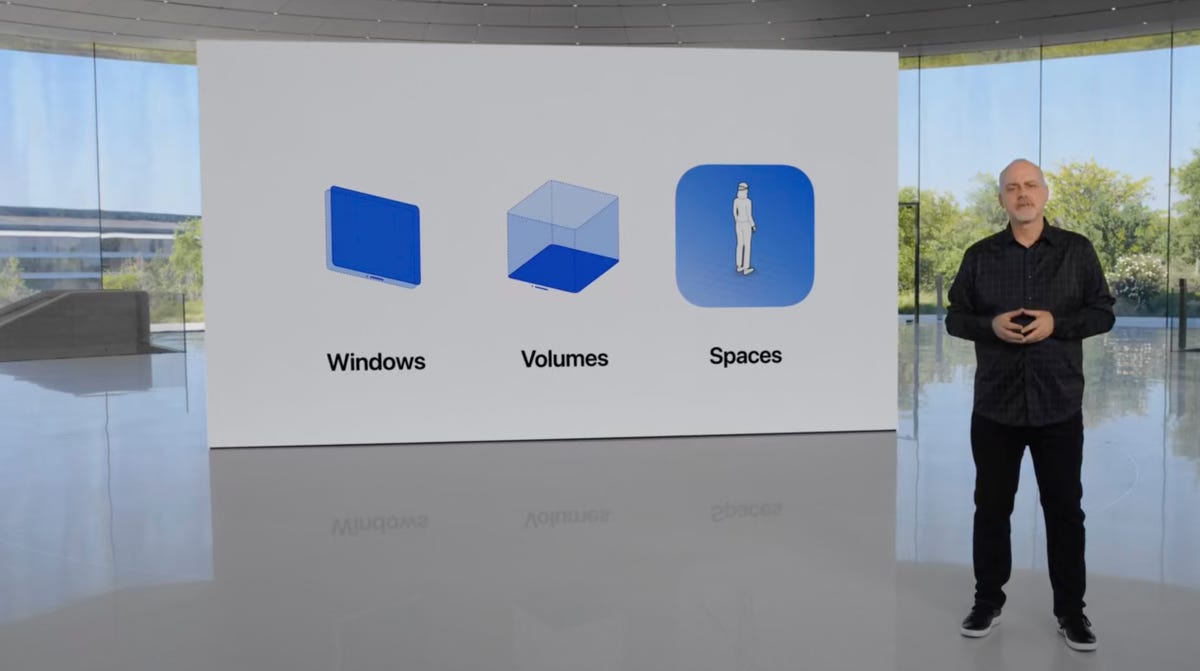

Users can open one or more windows that exist as planes in space. These windows support traditional views and commands, but also 3D content, which can be in a window containing 2D content. In a CAD program, the object might be 3D, but the toolbar might be 2D, for example.

Screenshot by David Gewirtz/ZDNET

Beyond windows, applications can create three-dimensional volumes. These can contain objects and scenes. The key difference is that volumes can be moved around in 3D space and viewed from all angles. It’s the difference between looking at a store window and driving around a car looking out the front, rear and side windows.

For developers who want total immersion, it is possible to create a complete dedicated space. It’s like when a game takes up the whole screen, but in the VisionOS experience, that screen is totally immersive. Here, applications, windows, and volumes work inside the fully immersive environment.

Now that you understand the virtual paradigms used by VisionOS, let’s take a look at seventeen things developers need to know about developing for VisionOS.

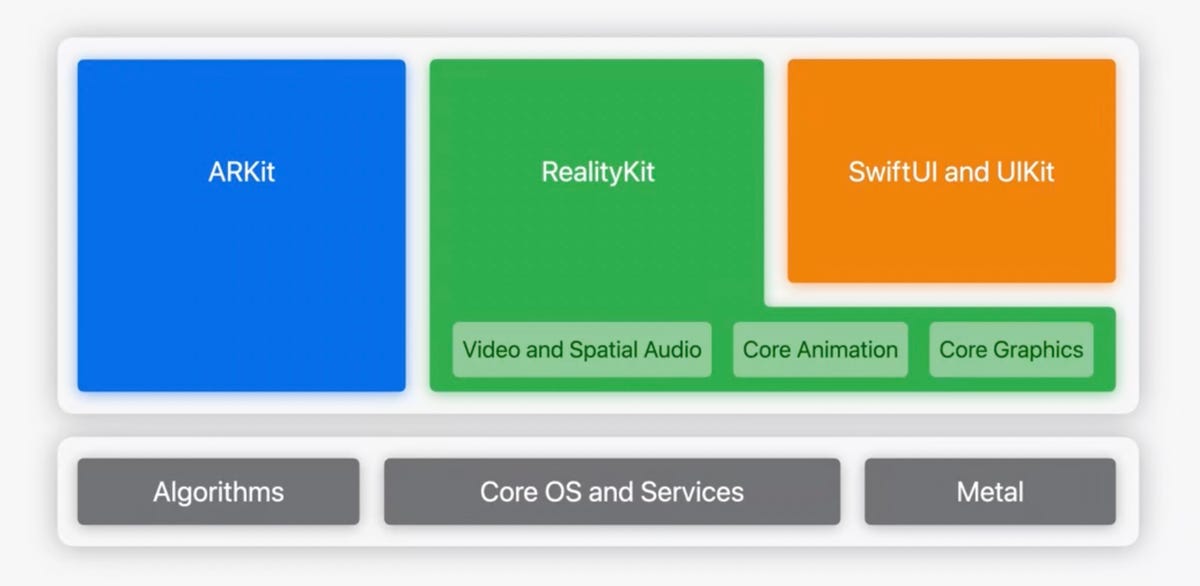

1. The development tools and libraries used for VisionOS will be familiar to many Apple developers

Development is based on Swift UI, RealityKit, and ARKit, which have been Apple ecosystem APIs for quite some time. Apple has extended these frameworks for VisionOS, adding support for new hardware.

Apple

2. VisionOS is an extension of iOS and iPad OS

Developers will use SwiftUI and UIKit to build the app UI for Apple’s VR headset. RealityKit is used to display 3D content, animations and visual effects.

ARKit allows apps to understand the real space around the user and makes that understanding accessible to code in an app.

3. All apps will need to exist in 3D space

Even 2D apps transferred from iOS or iPad OS will float in space. Whether it’s a view of the room where the user is using the Vision Pro environment or a simulated environment that blocks out the real world, even traditional apps will “float” in 3D space.

4. VisionOS offers a new destination for building apps

Previously, Xcode developers could choose iPhone, iPad, and Mac as destinations (i.e., where the app would run). Now developers can add VisionOS to it.

As soon as the application is rebuilt, the new destination adds VisionOS features, including resizable windows and adaptive translucency features of VisionOS.

5. Old UIKit apps (not built with Swift and SwiftUI) can be recompiled for VisionOS

They will then benefit from the 3D highlighting and presence features of VisionOS.

So even if UIKit and Objective C-based apps aren’t able to deliver a fully immersive 3D experience, they will benefit from a native look and feel of VisionOS and can coexist relatively seamlessly with apps more modern SwiftUI-based.

6. Traditional UI elements (like controls) get a new Z-offset option

This option allows developers to position panels and controls in 3D space, allowing certain interface elements to float in front of or behind other elements. Developers can thus draw attention to certain elements.

7. VisionOS uses eye tracking to enable dynamic foveation

Foveation is an image processing technique in which some areas of an image are more detailed than others. With VisionOS, the Vision Pro headset uses eye tracking to render the area of the scene being viewed in very high resolution, but reduces the resolution in peripheral vision.

This helps reduce processing time in areas where the user is not paying full attention. Developers don’t need to code for it. It’s built into the operating system.

8. Object lighting is derived from current spatial conditions

By default, objects floating in 3D space benefit from the lighting and shadow characteristics of the space where the user is wearing the headset.

Developers can provide an image-based lighting asset if they want to customize how objects are lit in virtual space.

9. ARKit provides apps with a usable model of where the device is being used

It uses plane estimation to identify flat surfaces in the room where the headset is used. Scene reconstruction builds a dynamic 3D model of the room space that applications can interact with.

Image anchoring allows 2D graphics to be fixed to a place in 3D space, making them appear to be part of the real world.

10. ARKit on VisionOS offers skeletal hand tracking and accessibility features

These features provide apps with positioning data and joint mapping, so gestures can more fully control the virtual experience. Accessibility features allow users to interact with eye movement, voice, and head movement in addition to hand movements.

11. Unity was overlaid on RealityKit

Apple has partnered with Unity so Unity developers can target VisionOS directly from Unity. Which allows all Unity-based content to migrate to VisionOS-based apps without much conversion effort.

This is actually quite important as it allows developers with extensive Unity experience to build Unity-based apps alongside VisionOS apps.

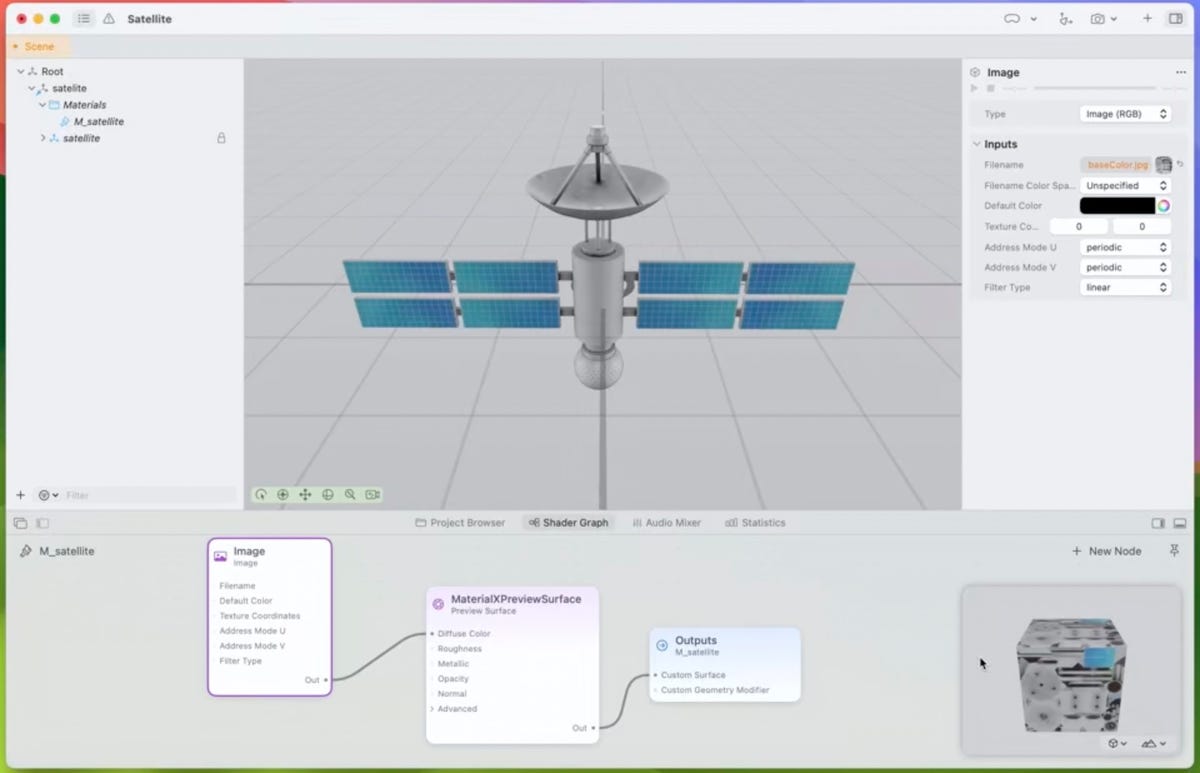

Reality Composer Pro. Apple

12. Reality Composer Pro is a new developer tool for previewing and preparing 3D content

It is an asset manager for 3D and virtual content. It also allows developers to create custom materials, test shaders, integrate these assets into the Xcode development process, and preview them in the Vision Pro headset.

13. Processing of shared space is done on the device

This means that the room’s visuals and mapping remain private. Cloud processing is not used for 3D mapping.

All personal information and spatial dynamics of the room are entirely managed inside the Vision Pro device.

14. For those without a device, Xcode provides previews and a simulator

This allows you to get an idea of what your application will look like and test it. Preview mode lets you see your layout in Xcode, while Simulator is a dedicated on-screen environment for testing general app behavior. You can simulate gestures using a keyboard, trackpad, or gamepad.

Xcode/Apple Simulator

15. For those with a Vision Pro headset, it is possible to code entirely in virtual space

The Vision Pro headset extends Mac desktops into virtual space, meaning you can have your Xcode development environment side-by-side with your Vision Pro application.

16. There will be a dedicated app store for the Vision Pro

Applications, with their in-app purchases, can be downloaded and purchased from the dedicated Vision Pro headset application store.

Additionally, Test Flight works as expected with Xcode and Vision OS, so developers will be able to distribute app betas in exactly the same way as for the iPhone and iPad.

17. Apple is preparing a number of resources to help with coding

The VisionOS SDK, Xcode update, simulator and Reality Composer Pro will be available soon. Apple is also setting up Apple Vision Pro development labs.

Located in London, Munich, Shanghai, Singapore, Tokyo and Cupertino, developers will be able to visit and test the apps. For those unable to visit Apple’s sites, developers can submit requests for Apple to evaluate and test apps and provide feedback. Apple did not mention a turnaround time for these requests.

Vision Pro for developers? What do you think ?

Further development information for Vision Pro and VisionOS is available on the Apple Developer website.

What do you think ? Are you a developer? If so, do you plan to develop for the Vision Pro? Are you a user? Do you see any immediate use for this device or does the $3,500 price tag and scope-like user experience put you off? Please let us know in the comments below.

Source: “ZDNet.com”